Author: Denis Avetisyan

Researchers have developed a system where a dual-arm robot, guided by artificial intelligence, can accurately match fabric textures without any real-world training data.

This work presents a Transformer-based visual servoing and dual-arm impedance control framework for robust, zero-shot fabric texture alignment achieved through sim-to-real transfer.

Achieving robust and adaptable fabric manipulation remains a challenge for robotic systems despite advances in vision and control. This paper introduces ‘Transformer Driven Visual Servoing and Dual Arm Impedance Control for Fabric Texture Matching’, a novel approach to aligning fabric pieces using a dual-arm manipulator and a Transformer-based visual servoing system. By leveraging synthetic data for training, the system achieves zero-shot texture-based alignment in real-world scenarios, simultaneously controlling fabric pose and applying tension for flatness. Could this sim-to-real transfer strategy unlock more versatile and autonomous textile handling capabilities in industrial and domestic settings?

The Challenge of Material Variability

Robotic systems designed to handle fabrics encounter significant obstacles due to the inherent variability of these materials and the environments in which they operate. Unlike rigid objects with predictable properties, fabrics exhibit complex behaviors – draping, stretching, and deforming in response to even slight forces – and their appearance changes dramatically with shifts in lighting and texture. This inconsistency presents a major challenge for computer vision systems attempting to identify grasping points or track fabric movements, leading to unreliable performance. Traditional robotic control strategies, often reliant on precise pre-programmed movements or assumptions about material uniformity, struggle to adapt to these unpredictable conditions, effectively hindering the automation of tasks like folding, sorting, or garment assembly. Consequently, achieving robust and reliable fabric manipulation requires overcoming these sensitivities to both material characteristics and external environmental factors.

Reliable manipulation of fabrics by robotic systems hinges on the convergence of sophisticated visual perception and adaptable control strategies. Current approaches often falter because fabrics lack rigid structure, presenting an infinite number of possible configurations and requiring systems to ‘understand’ material deformation in real-time. Consequently, researchers are developing vision systems capable of discerning subtle textural cues and accurately estimating fabric pose, even under varying lighting and complex backgrounds. These perceptual inputs then drive control algorithms that move beyond pre-programmed motions, instead employing techniques like reinforcement learning and model predictive control to dynamically adjust grasping and positioning actions. This allows robots to respond to unpredictable fabric behavior, compensate for inaccuracies, and ultimately achieve the dexterity needed for tasks like folding, draping, and sewing – pushing the boundaries of automated textile handling.

Many existing robotic fabric manipulation systems are hampered by a reliance on painstakingly defined, pre-programmed movements or the need for frequent and extensive recalibration. This approach proves problematic as even slight variations in fabric type, drape, or lighting conditions can throw off the robot’s precision, demanding constant human intervention to adjust the system. Consequently, these methods struggle with scalability – adapting to different fabrics or production volumes requires significant re-engineering and setup time. The inflexibility limits their practical application in dynamic environments, such as clothing manufacturing or automated laundry, where material properties and configurations are rarely consistent, and a truly adaptable robotic solution remains a considerable challenge.

Successfully manipulating fabrics with robotic systems presents a significant challenge due to the inherent complexity of aligning materials based on texture. Unlike rigid objects with defined shapes, fabrics lack consistent geometric features, demanding systems move beyond simple shape recognition. Accurate alignment requires discerning subtle visual cues – variations in weave, thread density, and surface patterns – which are easily distorted by lighting changes or fabric deformation. This necessitates the development of algorithms capable of robust feature extraction and adaptable control strategies that can compensate for the material’s inherent pliability. The precision needed extends beyond simply matching patterns; robotic systems must account for the fabric’s tendency to stretch, wrinkle, and slip, requiring a delicate balance of force and control to achieve reliable and repeatable manipulation.

Vision-Guided Control: A Dynamic Response to Complexity

Visual servoing is a closed-loop control technique where visual feedback is used to guide a robot’s motion. Unlike traditional methods relying on precise kinematic models, visual servoing directly uses image features extracted from a camera to calculate control commands. The system continuously analyzes the visual input, calculates the error between the current and desired pose based on these features, and adjusts the robot’s actuators accordingly. This approach allows for adaptation to uncertainties in the robot’s calibration, environment, and object pose, making it suitable for tasks requiring high precision and robustness where accurate modeling is difficult or impractical. The control loop continues until the visual error is minimized, achieving the desired pose.

The visual servoing system utilizes a Transformer architecture, a neural network design that departs from traditional convolutional approaches by employing self-attention mechanisms. This allows the network to weigh the importance of different image regions when processing visual data, effectively capturing long-range dependencies that are crucial for understanding complex scenes. Unlike convolutional networks which primarily focus on local features, Transformers can model relationships between distant pixels, improving robustness to occlusion and variations in appearance. The self-attention layers compute a weighted sum of the input features, where the weights are determined by the similarity between different parts of the image. This process enables the network to robustly interpret visual data and accurately estimate the robot’s pose relative to the target fabric.

The visual servoing network is trained exclusively with synthetically generated data to maximize robustness and generalization capability. This training data is produced through physics-based simulation, allowing for precise control over variables such as fabric texture, color, weave density, and illumination conditions. The simulation environment generates a diverse dataset encompassing a wide range of fabric properties and lighting scenarios, including variations in specular and diffuse reflectance. By training on this synthetic data, the network learns to extract robust visual features independent of specific environmental conditions or fabric characteristics, ultimately improving performance in real-world deployments with previously unseen materials and lighting.

The system achieves zero-shot generalization capabilities through a data-driven training methodology, eliminating the need for re-training when presented with novel fabric types. This is accomplished by exposing the visual servoing network to a comprehensive synthetic dataset encompassing diverse fabric characteristics and illumination scenarios. Evaluation on previously unseen fabrics demonstrates reliable performance, with an average positioning error consistently maintained at 0.106mm. This level of accuracy indicates the network’s ability to effectively transfer learned visual features to new, unencountered materials without specific adaptation.

A Dual-Arm Platform: Coordinated Manipulation for Precision

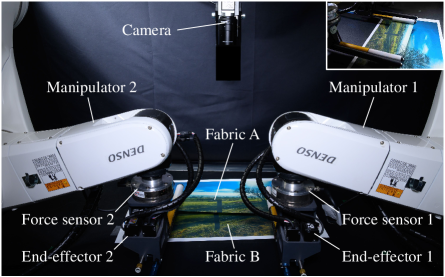

The robotic system employs a dual-arm manipulator featuring a specifically designed roll-up end-effector. This end-effector is engineered to reliably grasp and roll fabric edges, facilitating automated handling processes. The dual-arm configuration allows for coordinated manipulation, enabling complex maneuvers and improved efficiency compared to single-arm systems. The end-effector’s design prioritizes a secure grip while minimizing potential damage to the fabric, achieved through optimized contact geometry and material selection. This approach ensures consistent and repeatable performance across a range of fabric types and thicknesses.

The robotic platform incorporates force sensors directly into each end-effector to provide real-time feedback during fabric manipulation. These sensors measure the interaction forces between the end-effector and the material, enabling a closed-loop control system that dynamically adjusts grasping and rolling actions. This precise force control is crucial for handling delicate fabrics, preventing tearing or deformation that could occur with fixed-force or open-loop systems. The sensor data allows for consistent application of appropriate forces, maintaining secure grip while minimizing the risk of damage, and facilitating reliable handling of materials with varying textures and fragility.

Dual-Arm Impedance Control is implemented to actively regulate contact forces exerted by the robotic manipulators during fabric handling. This control strategy defines a desired relationship between force and position, allowing the system to respond to external disturbances and maintain consistent contact with the fabric surface. By adjusting the robot’s stiffness and damping parameters, the system minimizes deviations from the desired trajectory while simultaneously ensuring that applied forces remain within safe limits, preventing material deformation or damage. This approach enables stable manipulation even with variations in fabric thickness, texture, and pose, resulting in consistent flatness and reliable performance throughout the process.

Alignment accuracy is quantified using an image-based cost function that measures the discrepancy between the current and target visual states of the fabric. This approach yields a mean positioning error of 0.106mm, as determined through 30 experimental trials performed with fabrics exhibiting varied textures. The consistency of the system is demonstrated by a standard deviation of 0.043mm, indicating reliable performance across the tested material set and confirming the robustness of the image-based alignment method.

Implications and Future Trajectories in Automated Textile Handling

The development represents a substantial leap forward in robotic fabric manipulation, poised to reshape automation strategies within industries reliant on textiles. Current methods often struggle with the inherent complexity and variability of fabrics, necessitating laborious human intervention and limiting production speed. This new approach circumvents these limitations through a system capable of reliably grasping, positioning, and manipulating diverse fabric types without the need for extensive, task-specific recalibration. The implications extend beyond simply accelerating existing processes; it unlocks the potential for fully automated garment assembly, precision textile cutting, and adaptive manufacturing workflows, promising increased efficiency, reduced labor costs, and greater responsiveness to evolving market demands in apparel and beyond.

A key benefit of this robotic fabric manipulation system lies in its adaptability, eliminating the need for laborious recalibration when switching between different materials. Traditional robotic systems often require extensive adjustments to accommodate variations in texture, stiffness, and weight, leading to significant downtime and reduced productivity. This new approach, however, leverages advanced algorithms and sensor feedback to autonomously adjust its grip and manipulation strategies, seamlessly transitioning between fabrics like silk, denim, and polyester without human intervention. The resulting increase in operational efficiency not only lowers manufacturing costs but also enables more flexible and responsive production processes, particularly valuable in industries demanding rapid prototyping and customized products.

The system exhibits a remarkable capacity for zero-shot generalization, meaning it can adapt to previously unseen fabrics and environmental conditions without requiring any additional training or recalibration. This capability dramatically simplifies deployment in real-world scenarios, which are often characterized by unpredictable variations in lighting, fabric texture, and even the presence of wrinkles or folds. Unlike traditional robotic systems that demand extensive, task-specific programming for each new material or setting, this approach allows for seamless integration into dynamic environments, promising increased efficiency and reduced operational costs across industries like apparel manufacturing and automated textile handling. The ability to function effectively ‘out of the box’ represents a significant step toward truly adaptable and versatile robotic systems.

Rigorous experimentation has revealed the system achieves a maximum positioning error of only 0.450mm, demonstrating its reliable performance even when manipulating difficult or deformable fabrics. This level of precision signifies a substantial step towards automating complex textile handling tasks. Current research endeavors are directed towards broadening the system’s adaptability to encompass more intricate fabric shapes and layering, as well as seamless integration with broader robotic workflows. This expansion aims to move beyond isolated manipulation to enable fully automated processes, such as garment assembly, where the system can coordinate with other robotic tools to achieve complex manufacturing goals.

The presented research embodies a commitment to systemic understanding, mirroring the belief that structure dictates behavior. This work demonstrates how a carefully designed architecture – a Transformer network coupled with dual-arm impedance control – enables robust fabric manipulation. As Barbara Liskov aptly stated, “It’s one of the dangers of having good ideas that you don’t know when to stop.” This sentiment resonates with the iterative process of refining the visual servoing system; the team didn’t merely address texture alignment but considered the entire interaction loop, achieving successful sim-to-real transfer. The focus on a holistic system, rather than isolated components, is paramount to the project’s success, highlighting the interconnectedness of perception and action.

The Loom and the Algorithm

The demonstrated capacity to transfer fabric manipulation skills from simulation to reality, mediated by a Transformer network, is not merely a technical achievement. It exposes a fundamental tension. Each successful grasp, each aligned edge, is predicated on a meticulously constructed digital proxy – a world simplified to the point of usability. The elegance of this system lies in its abstraction, yet every abstraction incurs a cost. The apparent freedom from the need for real-world training data is, in truth, a dependence on the fidelity of that synthetic representation. Every new dependency is the hidden cost of freedom.

Future work must address the inevitable discrepancies between the simulated and physical worlds. Focus should shift beyond purely visual servoing towards incorporating haptic feedback, not as an addendum, but as an integral element of the control architecture. The system’s current reliance on pre-defined texture features also limits its adaptability. A more robust approach would involve learning texture representations directly from raw sensory data, allowing the manipulator to generalize to novel materials and patterns.

Ultimately, the challenge lies in creating a system that doesn’t simply react to visual input, but understands the material properties of fabric – its drape, its stretch, its inherent instability. Such understanding requires a move away from treating manipulation as a purely geometric problem and towards acknowledging the complex interplay of forces and constraints that govern the behavior of soft materials. The loom, after all, is more than just a mechanism; it’s a conversation between force, material, and design.

Original article: https://arxiv.org/pdf/2511.21203.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Mobile Legends: Bang Bang (MLBB) Sora Guide: Best Build, Emblem and Gameplay Tips

- Brawl Stars December 2025 Brawl Talk: Two New Brawlers, Buffie, Vault, New Skins, Game Modes, and more

- Clash Royale Best Boss Bandit Champion decks

- Best Hero Card Decks in Clash Royale

- Call of Duty Mobile: DMZ Recon Guide: Overview, How to Play, Progression, and more

- Clash Royale December 2025: Events, Challenges, Tournaments, and Rewards

- Best Arena 9 Decks in Clast Royale

- Clash Royale Best Arena 14 Decks

- Clash Royale Witch Evolution best decks guide

- Brawl Stars December 2025 Brawl Talk: Two New Brawlers, Buffie, Vault, New Skins, Game Modes, and more

2025-11-29 19:49