Author: Denis Avetisyan

Researchers are developing new methods to help artificial intelligence generate clearer, more logically structured explanations of procedural skills, moving beyond simple step-by-step instructions.

A novel symbolic-LLM hybrid architecture improves the causal reasoning and pedagogical alignment of AI-generated explanations for procedural skills using constrained generation and TMK models.

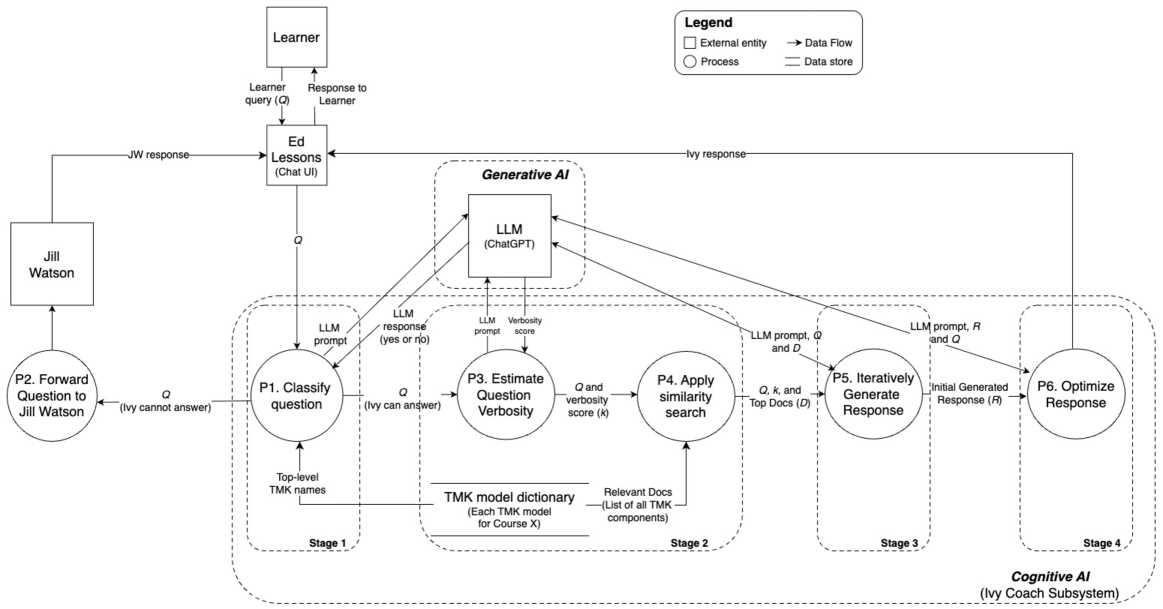

While large language models excel at fluent text generation, they often struggle to convey the underlying causal and compositional logic crucial for effective procedural skill learning. This limitation motivates the research presented in ‘Improving Procedural Skill Explanations via Constrained Generation: A Symbolic-LLM Hybrid Architecture’, which introduces Ivy, a novel AI coaching system. By integrating symbolic Task-Method-Knowledge (TMK) models with a constrained generative LLM, Ivy delivers structured explanations demonstrating improved inferential quality across dimensions of causal reasoning and problem decomposition. Could this hybrid approach unlock more pedagogically sound and scalable AI for educational applications beyond procedural skill instruction?

The Illusion of Understanding: Beyond Pattern Matching

Large language models demonstrate remarkable proficiency in identifying and replicating patterns within data, enabling them to generate text, translate languages, and even compose different kinds of creative content. However, this strength in pattern recognition doesn’t necessarily translate to genuine understanding or the ability to perform robust, step-by-step reasoning. These models frequently falter when confronted with tasks demanding causal inference – determining why something happens, not just that it happens – or complex problem-solving that requires breaking down a challenge into logical, sequential steps. While scaling up model size can improve performance to a degree, it often amplifies existing biases and doesn’t address the fundamental limitation of lacking an internal framework for representing and manipulating knowledge in a truly reasoned manner. This suggests that simply processing more data isn’t enough; a new architectural approach is needed to imbue these models with the capacity for reliable, explainable, and adaptable reasoning.

Current large language models, despite their impressive capabilities, frequently encounter limitations when tackling problems requiring systematic reasoning and understanding of cause-and-effect relationships. Simply increasing the size of these models – a strategy known as scaling – yields diminishing returns because it doesn’t address the fundamental issue: a lack of structured knowledge representation. Researchers posit that true progress demands a departure from purely statistical pattern matching towards architectures that incorporate explicit, symbolic representations of concepts and their relationships. This shift necessitates moving beyond models that primarily associate information to systems that can actively manipulate and reason with knowledge, mirroring the way humans build mental models to solve complex problems and enabling a more robust and generalizable approach to artificial intelligence.

Knowledge as Architecture: The TMK Framework

Task-Method-Knowledge (TMK) models represent skills by structuring them into three core components: tasks, methods, and knowledge. A task defines a specific goal to be achieved. A method outlines the sequence of steps, or sub-tasks, required to accomplish that goal. Finally, knowledge encompasses the information and resources necessary to execute each step within a method. This decomposition allows complex skills to be broken down into manageable, discrete units, facilitating both representation and automated execution. By explicitly defining the relationship between goals, procedures, and information, TMK provides a formalized framework for skill representation that goes beyond simple procedural lists.

Early Task-Method-Knowledge (TMK) implementations, designated TMK-Basic, focused on representing the desired outcomes of actions-goals-and the methods used to achieve them. However, these initial models were limited in their ability to articulate the reasoning process connecting a method to a goal. Specifically, TMK-Basic lacked a mechanism to formally represent the inferential steps, preconditions, and causal relationships that explain how a method reliably produces a given outcome. This meant that while the system could identify what needed to be done, it couldn’t represent the underlying logic or justify the chosen course of action beyond simply stating the goal-method association.

TMK-Structured builds upon initial Task-Method-Knowledge (TMK) models by incorporating three key enhancements to improve reasoning capabilities. Explicit causal transitions define the relationships between actions and their expected outcomes, allowing the system to understand why a method achieves a task. Goal hierarchies enable the representation of complex tasks as nested sub-goals, facilitating planning at multiple levels of abstraction. Finally, hierarchical decomposition allows methods to be broken down into successively simpler sub-methods, improving both efficiency and the ability to adapt to changing circumstances. These combined features enable more reliable and flexible action planning compared to earlier TMK implementations.

The Skeleton of Logic: Modeling Procedure

Finite State Machines (FSMs) are a computational model consisting of a finite number of states and transitions between those states, triggered by specific inputs or conditions. Each state represents a distinct stage in a process, and transitions define the allowable movements from one stage to another. This structure is fundamental to representing sequential procedures because it explicitly defines all possible system behaviors and the conditions governing them. TMK-Structured utilizes the principles of FSMs by representing procedural logic as a network of interconnected steps – analogous to states – and defined conditions – analogous to transitions – that dictate the execution flow. This direct correspondence to FSMs allows for formal verification and analysis of the procedural logic implemented within TMK-Structured, ensuring predictable and controllable task execution.

TMK-Structured employs a formal representation of procedural logic where each step is explicitly defined by its current state and the conditions governing transitions to subsequent steps. This is achieved through the definition of state variables and conditional logic – typically expressed as boolean evaluations – that determine the next step executed. Consequently, the execution path of a task can be fully traced and verified through static analysis, allowing for deterministic prediction of behavior and identification of potential errors or inconsistencies. This explicit representation contrasts with implicit state management found in many conventional systems, enabling rigorous validation and facilitating the development of reliable, predictable procedural sequences.

Traditional AI approaches often prioritize achieving a goal without detailing the specific sequence of actions used. TMK-Structured diverges from this by explicitly modeling the procedural logic – the how – of task completion. This means the system doesn’t simply indicate that a task is done, but provides a traceable, step-by-step account of exactly how the result was achieved. This granular representation facilitates mechanistic explanations, allowing for analysis of the causal relationships between actions and outcomes, and enabling verification of the system’s reasoning process. The focus shifts from observable results to the underlying process, crucial for debugging, refinement, and establishing trust in the system’s behavior.

Constrained Genesis: Bridging the Symbolic and the Statistical

A constrained generation architecture functions by distinctly separating symbolic control, implemented through a Task Management Kernel (TMK) layer, from the generative capabilities of a Large Language Model (LLM) layer. The TMK layer manages the reasoning process and enforces constraints, operating on a symbolic level to ensure logical consistency and adherence to specified rules. This symbolic output then serves as input to the LLM layer, which is responsible for translating the structured information into natural language. This separation allows for precise control over the generated content while leveraging the LLM’s ability to produce fluent and coherent text, effectively bridging the gap between structured reasoning and natural language expression.

The integration of knowledge graphs and structured-output Retrieval-Augmented Generation (RAG) significantly improves the robustness of the constrained generation architecture. Knowledge graphs provide a structured representation of facts and relationships, enabling more precise knowledge retrieval than traditional text-based methods. Structured-output RAG then formats this retrieved knowledge into a machine-readable format, aligning it with the symbolic constraints imposed by the Task Manipulation Kernel (TMK). This structured data facilitates improved reasoning and reduces the likelihood of generating factually incorrect or irrelevant outputs, offering a reliable foundation for both knowledge retrieval and subsequent text generation by the Large Language Model (LLM).

Domain grammars function as a set of formalized rules that govern the structure and content of generated text within a specific field. These grammars define permissible syntactic structures, vocabulary usage, and semantic relationships relevant to the target domain, such as legal contracts, medical reports, or financial summaries. Implementation typically involves defining constraints on the output space of the Large Language Model (LLM), guiding it to produce text that conforms to these pre-defined rules. This ensures outputs are not only grammatically correct but also logically consistent and factually accurate within the specified domain, improving reliability and reducing the incidence of hallucination or irrelevant information in generated content.

Beyond Correlation: Towards Genuine Expertise

The capacity for expert-level problem-solving arises when reasoning processes are firmly anchored in explicit causal models and structured knowledge. This approach moves beyond simple pattern recognition, enabling systems to understand why things happen, not just that they happen. By representing knowledge as interconnected causal relationships, the system can simulate potential outcomes, identify root causes, and formulate effective solutions with a depth mirroring human expertise. This contrasts with methods relying on statistical correlations alone, which are prone to errors when faced with novel situations or incomplete data. Consequently, reasoning grounded in causal models demonstrates robustness and adaptability, facilitating performance on complex tasks requiring nuanced understanding and predictive capabilities, and ultimately bridging the gap between artificial intelligence and genuine cognitive skill.

The ability to tackle intricate problems often hinges on decomposing them into manageable components, and recent advancements demonstrate a pathway to achieve this through compositional skill representations. Utilizing TMK-Structured, a system designed to foster this decomposition, complex tasks are not approached as monolithic challenges, but rather as sequences of smaller, masterable skills. This modularity allows for incremental learning; as each sub-skill is acquired, the system’s overall proficiency increases, ultimately enabling successful completion of the larger task. This contrasts with traditional approaches where models attempt to solve problems holistically, often struggling with nuanced reasoning or unforeseen circumstances; instead, TMK-Structured facilitates a building-block approach to intelligence, mirroring how humans learn and adapt to complex environments.

Recent evaluations reveal a substantial performance advantage for Ivy+TMK-Structured over established models in complex reasoning tasks. The system achieved ratings of 1.6 for causal understanding, 1.333 for teleological reasoning-demonstrating an ability to grasp purpose and consequence-and 1.5 for compositional skill, reflecting proficiency in breaking down and mastering intricate problems. Critically, the F1 score for assessing the validity of knowledge mappings reached 0.708, indicating robust and accurate connections between concepts, and overall correctness landed at 0.65. These results sharply contrast with the performance of RAG-GPT, which achieved a correctness score of 0.35, and Standard GPT, which scored only 0.15, underscoring the efficacy of the TMK-Structured approach in facilitating advanced reasoning capabilities.

The pursuit of clarifying procedural skill explanations, as detailed in this work, echoes a fundamental truth about complex systems. This research, with its focus on constrained generation and TMK models to enhance inferential structure, isn’t about building understanding, but rather cultivating its emergence. As John McCarthy observed, “Every architectural choice is a prophecy of future failure.” The very act of designing an architecture for explanation – even one aiming for pedagogical alignment and improved causal reasoning – acknowledges the inherent unpredictability of how knowledge takes root. It isn’t a question of achieving perfect instruction, but of building systems robust enough to survive the inevitable challenges to comprehension, much like order itself is merely a temporary reprieve from the encroaching chaos.

The Horizon of Explanation

This work, in attempting to sculpt explanations from the unruly clay of large language models, reveals a familiar truth: control is an illusion. Constraining generation, anchoring it to symbolic representations of procedural knowledge, offers a temporary reprieve from the stochastic wilderness. Yet, each constraint is a prophecy of future brittleness. The gains in causal reasoning and problem decomposition are not endpoints, but merely demonstrate how easily these systems appear to reason – a convincing performance, perhaps, but one built on a foundation of increasingly complex, and therefore increasingly opaque, architecture. Scalability is just the word used to justify complexity.

The pursuit of ‘pedagogical alignment’ highlights a deeper issue. Education is not simply the transmission of information, but the cultivation of adaptability. Every optimized explanation, every perfectly structured breakdown of a skill, diminishes the learner’s capacity to forge their own understanding. Everything optimized will someday lose flexibility. The architecture, no matter how meticulously crafted, will inevitably become a bottleneck, a rigid framework incapable of accommodating novel situations or unforeseen challenges.

The perfect architecture is a myth to keep sane. The next step isn’t to build better explanations, but to cultivate systems that can learn alongside the learner, embracing ambiguity and fostering genuine understanding. The challenge lies not in controlling the explanation, but in relinquishing control – allowing the system to evolve, to surprise, and to ultimately, become something other than a sophisticated instruction manual.

Original article: https://arxiv.org/pdf/2511.20942.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- World Eternal Online promo codes and how to use them (September 2025)

- Clash Royale Season 79 “Fire and Ice” January 2026 Update and Balance Changes

- Best Arena 9 Decks in Clast Royale

- Best Hero Card Decks in Clash Royale

- Clash Royale Witch Evolution best decks guide

- Clash Royale Furnace Evolution best decks guide

- FC Mobile 26: EA opens voting for its official Team of the Year (TOTY)

2025-11-27 20:40