Author: Denis Avetisyan

Researchers are applying techniques from natural language processing to reveal and control how physics foundation models represent fundamental concepts.

This work demonstrates interpretable and causally controllable concept vectors within a physics foundation model, enabling targeted manipulation of spatiotemporal data representations.

Recent advances in mechanistic interpretability have revealed surprisingly human-understandable concepts within large language models, yet whether this capacity extends beyond models trained on language remains an open question. In ‘Physics Steering: Causal Control of Cross-Domain Concepts in a Physics Foundation Model’, we demonstrate that a physics-focused foundation model also develops interpretable internal representations corresponding to physical principles like vorticity and diffusion. By identifying and manipulating “concept directions” within the model’s activation space, we achieve causal control over its predictions, effectively steering simulated physical behaviours. This suggests that scientific foundation models learn generalized representations-not merely superficial correlations-raising the possibility of AI-enabled scientific discovery and control; but how can we best leverage these interpretable models to accelerate scientific progress?

The Limits of Simulation: A Necessary Rebellion

Achieving truly realistic physical simulations demands immense computational resources, largely due to the need to resolve details across a vast range of scales. Consider fluid dynamics; accurately modeling turbulence, for example, requires capturing eddies of varying sizes, from large-scale weather patterns down to microscopic dissipations of energy. The number of calculations needed grows exponentially with the desired fidelity – doubling the resolution often requires eight times the computational power. This creates a significant bottleneck, rendering high-fidelity simulations impractical for real-time applications like interactive video games, rapid design iterations in engineering, or immediate forecasting scenarios. Consequently, researchers are actively exploring techniques – such as reduced-order modeling and machine learning – to approximate these complex phenomena, trading some accuracy for a substantial gain in computational efficiency and enabling simulations to run within feasible timeframes.

Capturing the intricacies of complex systems like fluid flows and reaction-diffusion processes presents a significant challenge for conventional simulation techniques. These systems are characterized by non-linear interactions occurring across multiple scales, from macroscopic turbulence to microscopic molecular interactions. Traditional methods, often reliant on discretizing space and time, struggle to resolve these fine-scale details without incurring prohibitive computational costs. For example, accurately modeling turbulent flows requires capturing a vast range of eddy sizes, while simulating reaction-diffusion systems necessitates tracking the concentration of reactants and products as they evolve in both space and time. The inherent limitations of these approaches often lead to simplified models that sacrifice accuracy for computational feasibility, hindering a complete understanding of the underlying physical and chemical phenomena. Consequently, researchers are actively exploring novel techniques, such as machine learning and multi-scale modeling, to overcome these hurdles and achieve more realistic and efficient simulations.

The ambitious goals of modern scientific inquiry are increasingly constrained by computational limits, particularly in fields reliant on complex simulations. Accurate weather forecasting, for example, demands modeling atmospheric interactions at scales far exceeding current processing capabilities, leading to persistent uncertainties in prediction. Similarly, the design of novel materials – from lightweight alloys to superconductors – necessitates simulating atomic interactions and emergent properties, a task often intractable for all but the simplest compositions. Drug discovery faces comparable hurdles; predicting how a potential therapeutic molecule will interact with biological systems requires simulating complex chemical reactions and protein folding, processes that strain even the most powerful supercomputers. Consequently, progress in these critical areas is not solely limited by theoretical understanding, but also by the ability to effectively translate that understanding into actionable simulations and predictions.

Walrus: A Foundation Model for Decoding Reality

Walrus is a large vision transformer model developed as a foundation model for surrogate modelling of spatiotemporal data originating from physical systems described by partial differential equations (PDEs). The model utilizes a transformer architecture, enabling it to process and learn complex relationships within spatiotemporal fields. Specifically, Walrus is designed to approximate the solutions of PDEs without directly solving them, offering a computationally efficient alternative to traditional numerical methods like finite element analysis or computational fluid dynamics. The model takes spatiotemporal data as input and predicts future states of the physical system, effectively learning a mapping from initial conditions and parameters to the resulting dynamics. This approach allows for rapid prediction and analysis of physical phenomena governed by $PDEs$ across various domains.

Walrus’s training regimen utilizes a dataset comprising simulations across a range of physical phenomena, including fluid dynamics, heat transfer, and wave propagation. This diversity is achieved through the inclusion of data generated from multiple simulation environments and parameter settings, exposing the model to a broad spectrum of possible states and behaviors. The dataset incorporates variations in boundary conditions, initial conditions, and governing equation parameters to promote generalization capability. Specifically, the training data includes simulations with differing Reynolds numbers, Prandtl numbers, and material properties, enabling Walrus to approximate solutions for previously unseen physical scenarios without requiring retraining or fine-tuning for each new problem.

Walrus offers a significant computational advantage over traditional numerical simulations for solving partial differential equations (PDEs). Conventional methods, such as finite element or finite difference schemes, require substantial computational resources, particularly as the complexity or resolution of the simulation increases. Walrus, as a deep learning-based surrogate model, is trained to approximate the solutions of these PDEs, enabling predictions with significantly reduced computational cost. Once trained, Walrus can generate predictions much faster than running a full numerical simulation, often achieving speed-ups of several orders of magnitude. This efficiency stems from the model’s ability to learn the underlying mapping between input conditions and solution fields directly from data, bypassing the iterative solving process inherent in numerical methods. The resulting computational savings allow for increased simulation throughput, exploration of a wider parameter space, and real-time applications that are impractical with traditional approaches.

Concept Steering: Unearthing the Language of Physics

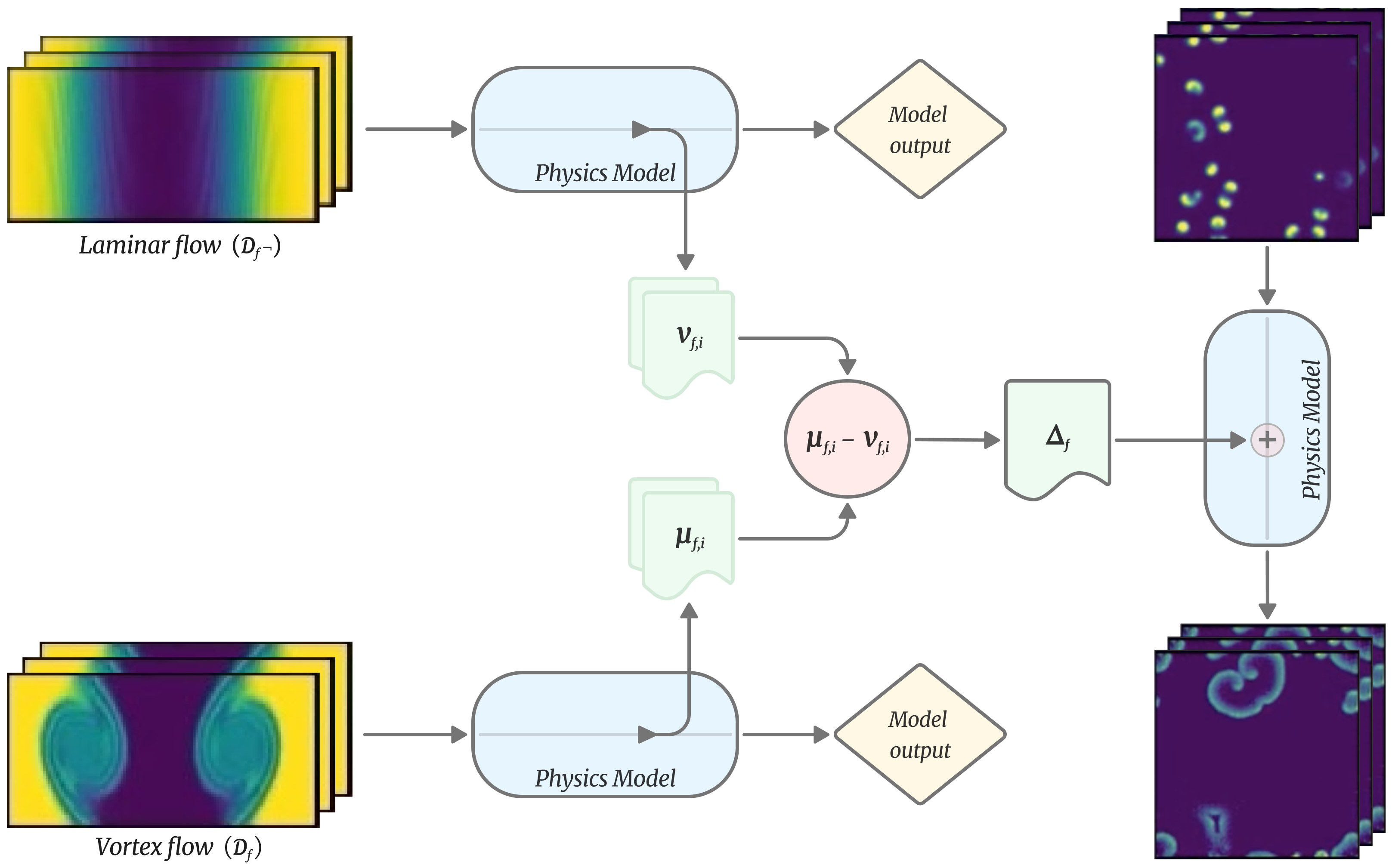

Walrus utilizes a method called ‘Concept Delta’ to map interpretable physical properties – including vorticity, diffusion rates, and simulation speed – to specific directions within its high-dimensional activation space. Concept Delta functions by calculating the gradient of a chosen concept with respect to the activation vector. This gradient indicates the direction in activation space that most strongly influences the target concept. By identifying these steerable directions, Walrus establishes a quantifiable relationship between abstract concepts and the underlying neural network representation, allowing for targeted manipulation of physical phenomena within the simulation. The technique effectively transforms qualitative physical characteristics into quantifiable vectors within the model’s latent space.

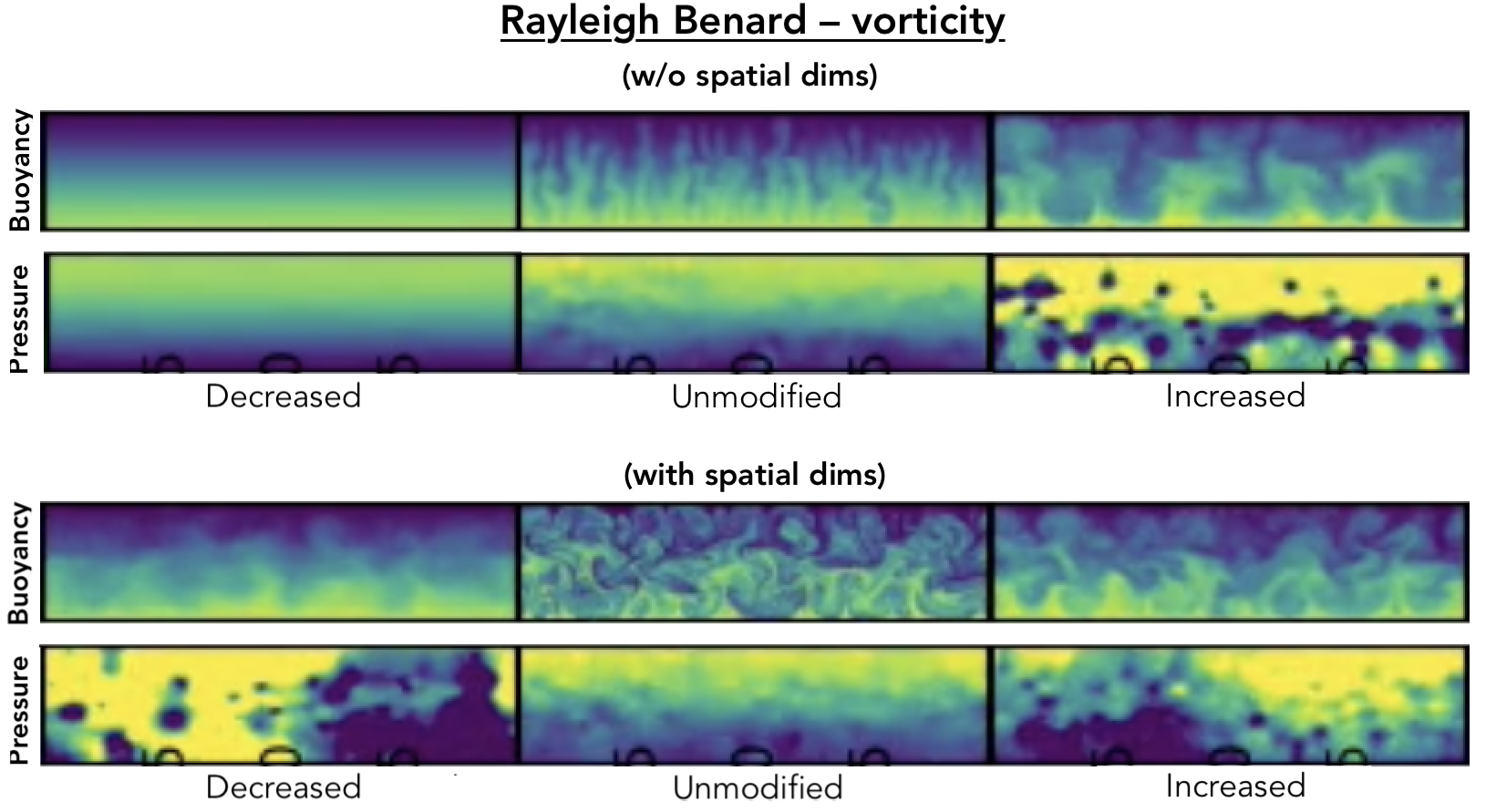

Activation Steering, within the Walrus framework, facilitates the targeted modification of simulated physical systems by directly influencing the network’s internal activations. This process leverages identified ‘steerable directions’ – representing physical phenomena like vorticity or diffusion – to alter simulation outcomes. By applying perturbations along these directions in activation space, the system’s behavior is effectively ‘edited’, allowing for interventions such as increasing or decreasing the intensity of a specific characteristic without retraining the underlying model. The magnitude of the perturbation directly correlates with the degree of behavioral change observed in the simulation, providing a quantifiable control mechanism over the physical process.

Single Direction Steering within the Walrus model enables isolated manipulation of specific physical characteristics during simulation. Interventions have been successfully demonstrated across multiple systems, including Rayleigh-Bénard convection, where control over convective roll patterns was achieved; Euler equations, allowing for directed modification of vortex structures; and the Gray-Scott reaction-diffusion system, where localized pattern formation and dissipation could be influenced. This functionality is achieved by identifying a singular direction within the model’s activation space corresponding to the target physical characteristic, and then applying a targeted intervention along that direction without significantly impacting other simulated behaviors.

Beyond the Black Box: Towards Legible Intelligence

Recent investigations into the AI model Walrus demonstrate a compelling alignment with the Linear Representation Hypothesis, a foundational concept in neural network theory. This hypothesis posits that distinct features – such as the characteristics of physical objects – aren’t scattered randomly within a model’s internal representation, but rather are encoded along specific directions in its activation space. The ability to ‘steer’ concepts within Walrus – meaning that smoothly altering a specific activation dimension predictably changes the corresponding feature – provides strong empirical support for this idea. Essentially, manipulating an activation vector along a given direction reliably adjusts the presence or intensity of a particular concept, akin to tuning a dial. This suggests a highly organized internal structure, where concepts aren’t represented by complex, non-linear combinations of neurons, but by relatively simple, directional encodings, offering a pathway toward deciphering and ultimately understanding the model’s reasoning process.

Recent research into the inner workings of AI models like Walrus is beginning to dismantle the long-held belief in ‘polysemanticity’ – the idea that individual neurons encode a multitude of different features simultaneously. Instead, evidence suggests a more streamlined approach, where features are represented by specific directions within the model’s activation space. This challenges the conventional understanding of neural networks as complex, interwoven systems, and opens up promising avenues for improving interpretability. If features consistently align with dedicated regions or vectors, it becomes significantly easier to trace the model’s reasoning, understand its decision-making process, and ultimately build AI systems that are not simply accurate, but also transparent and trustworthy – a critical step for reliable application in scientific domains.

The development of trustworthy artificial intelligence for scientific endeavors hinges on deciphering how these systems internally represent physical concepts. Current AI, often described as a ‘black box’, can achieve remarkable results without revealing the underlying reasoning. However, for applications demanding reliability – such as drug discovery, materials science, or climate modeling – this opacity is unacceptable. A deeper understanding of conceptual representation within AI models, like Walrus, allows for verification of the model’s logic, identification of potential biases, and ultimately, increased confidence in its predictions. This knowledge unlocks the potential for AI to not merely simulate scientific insight, but to genuinely contribute to it, by revealing previously unseen relationships and accelerating the pace of discovery.

Generalization and the Future of Scientific Discovery

The artificial intelligence known as Walrus exhibits a remarkable capacity for generalization, extending its learned understanding beyond its initial training domain. Originally developed to master fluid dynamics – predicting how liquids and gases flow – Walrus successfully applied this knowledge to the seemingly disparate field of reaction-diffusion systems, which model how substances spread and interact. This transfer of learning wasn’t limited to these two areas; the system demonstrated an ability to adapt its core principles to solve problems in other complex physical systems as well. This suggests that Walrus doesn’t simply memorize solutions, but rather internalizes fundamental principles governing these systems, enabling it to creatively address novel challenges and hinting at a powerful new approach to artificial intelligence and scientific problem-solving.

The demonstrated capacity of Walrus to extrapolate learned principles represents a significant leap toward automating and accelerating scientific progress. By successfully applying insights gained from the study of fluid dynamics – specifically, concepts derived from shear flow – to the distinctly different realm of reaction-diffusion systems, the model showcases a form of analogical reasoning previously uncommon in artificial intelligence. This transfer of knowledge isn’t simply pattern recognition; it’s the ability to identify underlying principles and apply them to novel contexts, potentially circumventing the need for exhaustive, system-specific training. Consequently, researchers envision applications ranging from the rapid prototyping of new materials with desired properties to the development of more efficient algorithms for tackling complex engineering problems, effectively allowing scientists and engineers to explore a broader solution space with greater speed and precision.

The versatility of Walrus extends beyond its initial design parameters, as concepts originating from the study of shear flow – a fundamental principle in fluid dynamics – have proven remarkably adaptable to disparate physical systems. Researchers successfully applied insights derived from modeling fluid behavior to reaction-diffusion processes, and even further afield, revealing an unexpected interconnectedness between seemingly unrelated phenomena. This transfer of knowledge isn’t merely analogical; the underlying mathematical structures governing shear flow provided predictive power in these new contexts, suggesting a universal principle at play. The success of this cross-disciplinary application highlights Walrus’ potential as a powerful tool for identifying hidden connections and accelerating innovation across a broad spectrum of scientific and engineering challenges, offering a pathway to leverage knowledge gained in one domain to unlock breakthroughs in another.

The pursuit detailed within this research echoes a fundamental tenet of understanding any complex system: disassembly and re-evaluation. The work showcases how activation steering – essentially probing and nudging internal representations – reveals the ‘code’ governing a physics foundation model’s understanding of concepts like vorticity and diffusion. This aligns with Tim Berners-Lee’s vision: “The Web is more a social creation than a technical one.” Just as the Web’s structure became apparent through exploration, so too does the internal logic of these models yield to careful, causal interrogation. The researchers don’t simply observe the model’s knowledge; they actively test its boundaries, confirming – or correcting – its internal representation of physical laws. It’s an acknowledgement that reality, like a well-designed system, is open source-we just haven’t fully read the code yet.

What Lies Beyond?

The application of interpretability techniques, borrowed from the realm of large language models, to physics foundation models reveals a predictable truth: understanding isn’t about observing correlation, but about establishing dominion over causation. This work demonstrates a nascent ability to nudge internal representations – to steer concepts like vorticity – but the truly interesting questions remain unaddressed. If a model ‘understands’ diffusion, can it predict novel scenarios outside its training data, or is it merely a sophisticated mimic? More pointedly, what constitutes a meaningful ‘concept’ within a purely mathematical structure?

The current approach relies on activation steering, a technique akin to prodding a black box with a stick. Future investigations must move beyond simply identifying and manipulating these internal levers. A more rigorous approach would involve developing formal methods to verify causal relationships within the model – to prove, not just demonstrate, that a specific activation corresponds to a specific physical principle.

Ultimately, the challenge isn’t building models that resemble intelligence, but dismantling them to reveal the underlying logic. If one can’t break the model – if its ‘understanding’ remains opaque to analytical probing – then the claim of genuine comprehension remains, at best, premature. The real work begins when the foundations themselves are subjected to stress testing.

Original article: https://arxiv.org/pdf/2511.20798.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- World Eternal Online promo codes and how to use them (September 2025)

- Clash Royale Season 79 “Fire and Ice” January 2026 Update and Balance Changes

- How to find the Roaming Oak Tree in Heartopia

- Clash Royale Furnace Evolution best decks guide

- Best Arena 9 Decks in Clast Royale

- FC Mobile 26: EA opens voting for its official Team of the Year (TOTY)

- Best Hero Card Decks in Clash Royale

2025-11-27 07:15