Author: Denis Avetisyan

Researchers demonstrate that large language models can intelligently suggest the most informative experiments, accelerating the search for novel materials.

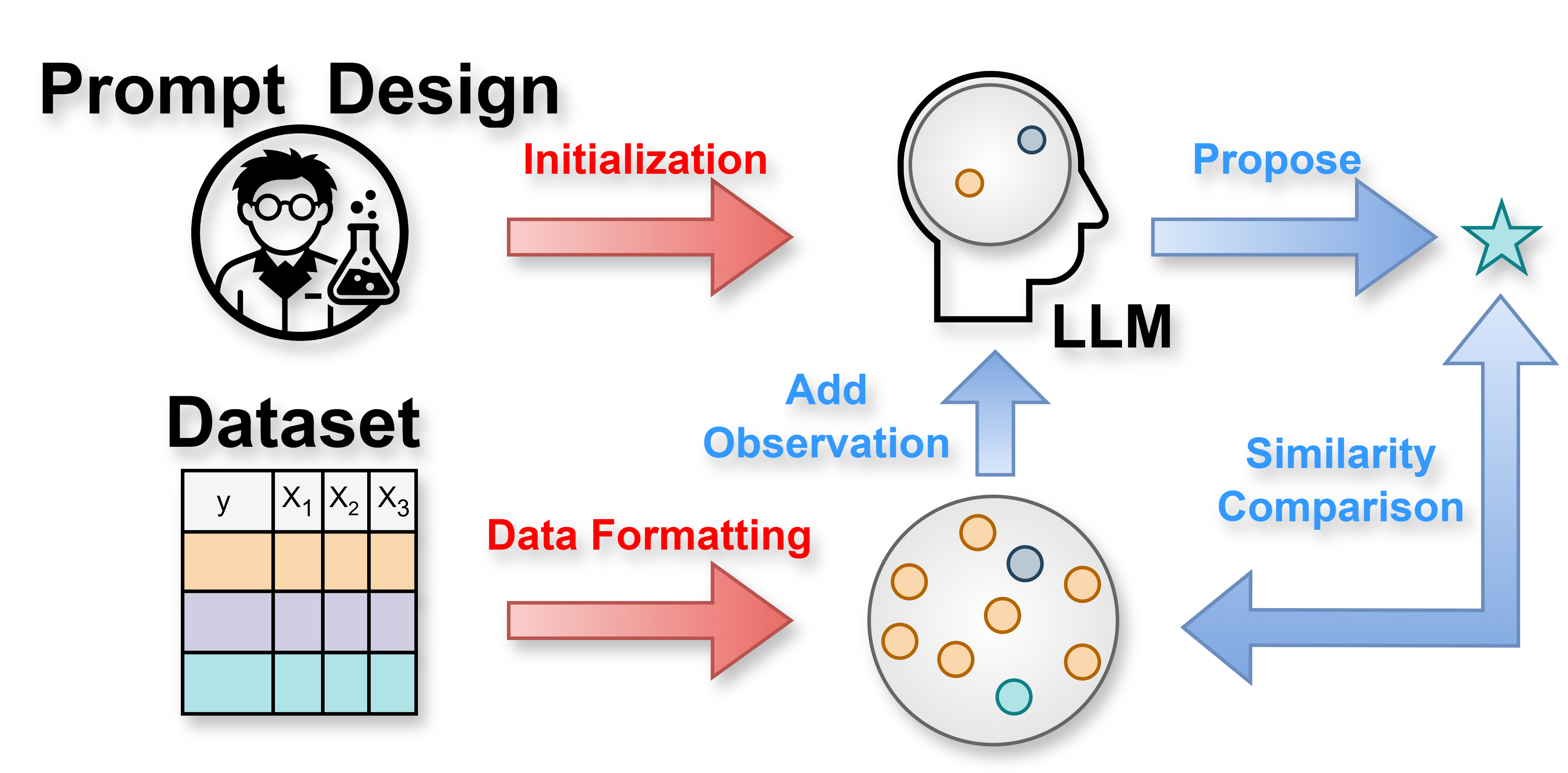

A training-free active learning framework leverages large language models for efficient materials discovery with performance competitive to traditional machine learning approaches.

Accelerating scientific discovery often demands prioritizing informative experiments, yet conventional machine learning approaches to active learning struggle with generalizability and require extensive, domain-specific feature engineering. This limitation motivates the work presented in ‘Training-Free Active Learning Framework in Materials Science with Large Language Models’, which introduces a novel framework leveraging the pretrained knowledge of large language models to directly propose experiments from textual descriptions. Our results demonstrate that this LLM-based active learning approach consistently outperforms traditional methods across diverse materials science datasets, reducing the experimental burden by over 70% while maintaining robust performance. Could this represent a paradigm shift towards more efficient, interpretable, and ultimately autonomous materials discovery driven by the power of large language models?

The Burden of Materials Discovery

Historically, the development of new materials-those with properties tailored for specific applications-has been a protracted and resource-intensive process. Researchers often rely on a combination of chance observations and painstakingly thorough experimentation, synthesizing and testing countless compounds in the hope of stumbling upon a breakthrough. This approach is not only slow, frequently taking years to move from initial concept to practical implementation, but also exceedingly expensive, demanding significant investments in both personnel and specialized equipment. The inherent limitations of this traditional methodology mean that vast regions of the potential materials space remain unexplored, potentially obscuring innovative compounds with transformative capabilities, and hindering progress in fields ranging from energy storage to biomedical engineering.

The search for novel materials is hampered by the sheer scale of possible compositions and structures-a materials space so vast that traditional methods, relying on trial and error, become impractical. A systematic, data-driven approach is therefore crucial, yet fully exploring this space presents immense computational challenges. Recent research indicates that leveraging Large Language Model-guided Active Learning (LLM-AL) offers a significant advantage in navigating this complexity. Specifically, studies demonstrate LLM-AL can identify optimal material candidates while evaluating less than 30% of the data typically required, representing a substantial reduction in computational cost and accelerating the pace of materials discovery. This efficiency stems from the model’s ability to intelligently prioritize promising candidates, effectively ‘learning’ which avenues of exploration are most likely to yield successful results and minimizing the need for exhaustive searching.

Intelligent Experimentation: A Shift in Strategy

Active Learning (AL) presents an iterative approach to materials discovery, differing from traditional methods by strategically prioritizing which experiments are conducted. Instead of randomly sampling or exhaustively searching a material space, AL employs a feedback loop: a surrogate model is trained on initial data, then used to predict the outcome of potential new experiments; the experiment predicted to yield the most valuable information – minimizing uncertainty or maximizing expected improvement – is then performed, and the resulting data is added to the training set. This cycle of prediction, experimentation, and model refinement continues until a desired level of accuracy or a defined target property is achieved, significantly reducing the experimental burden and accelerating the discovery process. The efficiency of AL stems from its focus on informative experiments, as opposed to simply increasing the volume of data.

Active Learning strategies enhance materials discovery efficiency by strategically balancing exploration and exploitation during experimentation. Exploitation focuses on refining predictions in areas where the surrogate model already exhibits high confidence, leveraging existing knowledge to optimize material properties. Conversely, exploration directs experimentation towards regions of high uncertainty, seeking to expand the model’s knowledge base and potentially uncover novel materials with superior characteristics. This iterative process, prioritizing informative experiments, minimizes the total number of required experiments to achieve a predetermined level of accuracy or desired material outcome, thereby reducing both time and resource expenditure compared to random or grid-based search methods.

Within the Active Learning framework for materials discovery, surrogate models function as predictive tools estimating material properties based on limited experimental data. Common implementations include Gaussian Process Regression (GPR), which provides probabilistic predictions and uncertainty estimates; Random Forest Regressor, an ensemble learning method leveraging multiple decision trees; XGBoost, a gradient boosting algorithm known for its efficiency and accuracy; and Bayesian Neural Networks (BNNs), which incorporate Bayesian inference to quantify prediction uncertainty. These models are trained on initial datasets and iteratively refined with new experimental results, allowing the Active Learning algorithm to intelligently select the most informative experiments to minimize the total number required to achieve desired performance metrics.

The Upper Confidence Bound (UCB) acquisition function is a key component of Active Learning, strategically selecting experiments predicted to yield the most significant information gain. This is achieved by balancing the predicted value of an experiment with the uncertainty associated with that prediction; experiments with high predicted values and high uncertainty are prioritized. Evaluation of a Large Language Model-based Active Learning (LLM-AL) approach utilizing UCB demonstrates performance comparable to, and in some cases exceeding, that of traditional Active Learning methods employing Gaussian Process Regression, Random Forest Regressor, XGBoost, and Bayesian Neural Networks. This was verified through testing across four distinct datasets, indicating the robustness and efficacy of the LLM-AL approach in materials discovery applications.

Leveraging Knowledge: Large Language Models in the Loop

Large Language Models (LLMs) are increasingly utilized in materials science to expedite the discovery process by leveraging the extensive data contained within existing scientific literature. These models are trained on vast corpora of published research, enabling them to identify relationships between material composition, structure, processing, and properties. By analyzing these learned associations, LLMs can predict the properties of novel materials or suggest promising compositions for specific applications, effectively reducing the need for costly and time-consuming physical experiments and simulations. This predictive capability extends to various material properties, including but not limited to, band gap, conductivity, mechanical strength, and thermal stability, thereby accelerating the iterative cycle of materials design and optimization.

Effective utilization of Large Language Models (LLMs) for materials discovery necessitates careful prompt engineering, specifically employing both Report-Format Prompts and Parameter-Format Prompts. Report-format prompts request information in a narrative structure, allowing the LLM to synthesize and present findings in a readily interpretable manner. Conversely, Parameter-format prompts focus on direct extraction of specific numerical or categorical data points, such as material properties or synthesis conditions. Combining these approaches-leveraging report prompts for contextual understanding and parameter prompts for precise data retrieval-maximizes the LLM’s ability to deliver relevant and actionable insights from scientific literature. The selection of prompt type directly impacts the quality and usability of the extracted information, influencing downstream analysis and materials design workflows.

The ‘Cold Start Problem’ in Large Language Models (LLMs) refers to the diminished performance observed when applying these models to materials or research areas with scarce existing data. LLMs rely on patterns learned from extensive training datasets; consequently, their predictive accuracy and ability to generate meaningful insights are significantly reduced when faced with limited initial information. This is because the model lacks sufficient examples to establish reliable correlations between material characteristics and desired properties, hindering its capacity to extrapolate effectively and make accurate predictions for novel compositions or experimental conditions. Performance improves as more data becomes available, but the initial lack of information presents a substantial challenge for LLM-driven materials discovery in data-scarce domains.

Large Language Models (LLMs) exhibit non-deterministic behavior, meaning that identical prompts can yield different outputs across multiple executions due to the probabilistic nature of their underlying algorithms. This presents a significant challenge to reproducibility in materials discovery workflows, as results are not guaranteed to be consistent. Our research indicates that utilizing Report-format prompts – those framing requests as comprehensive reports rather than isolated parameters – mitigates this issue by consistently generating responses with higher semantic consistency relative to established experimental contexts, thereby improving the reliability of LLM-driven predictions and analyses. Careful consideration of this non-determinism and prompt engineering strategies are therefore crucial for leveraging LLMs effectively in scientific research.

Towards Autonomous Innovation: The Self-Driving Laboratory

The emergence of Self-Driving Laboratories represents a paradigm shift in scientific experimentation, integrating the precision of automated hardware with the analytical power of machine learning. These systems aren’t simply about automation; they establish a closed-loop process where experiments are designed, executed, and analyzed without direct human intervention. Utilizing algorithms like Active Learning and, increasingly, Large Language Models, the laboratory intelligently selects the most informative experiments to perform, iteratively refining its understanding of a material or process. This synergistic combination allows for a dramatic acceleration of the scientific method, enabling researchers to explore vast experimental spaces and optimize complex systems far more efficiently than traditional approaches, ultimately promising to unlock materials innovations at an unprecedented rate.

A self-driving laboratory operates as a fully automated, closed-loop system capable of independently iterating on experiments to optimize material properties. This innovative approach bypasses the traditional, often slow, cycle of human-designed experiments, data analysis, and subsequent experimental adjustments. By integrating robotic hardware with intelligent algorithms, the system autonomously proposes, executes, and analyzes experiments, then uses the resulting data to refine its experimental strategy. This continuous cycle of learning and refinement dramatically accelerates the discovery process, allowing for the exploration of vast materials spaces and the identification of optimal compositions with minimal human intervention. The potential impact lies in significantly reducing the time and resources required for materials innovation, paving the way for breakthroughs in fields ranging from energy storage to advanced manufacturing.

Materials design often involves navigating complex relationships between a material’s composition, structure, and properties-a scenario aptly described as a “black-box” function where the underlying mechanisms are poorly understood. Bayesian Optimization provides a powerful and efficient strategy for exploring these functions, building upon the principles of Active Learning by intelligently suggesting the most informative experiments to perform next. Instead of random or grid-based searches, Bayesian Optimization employs a probabilistic model – typically a Gaussian Process – to represent the unknown function and quantify the uncertainty associated with its predictions. This allows the algorithm to balance exploration – seeking out new regions of the design space – with exploitation – refining predictions in areas where promising materials are likely to be found. The result is a streamlined optimization process that requires significantly fewer experiments to identify materials with desired characteristics, accelerating discovery and reducing resource consumption in materials science.

The advent of self-driving laboratories heralds a potential revolution in materials science, offering a pathway to overcome longstanding challenges in discovery and optimization. Recent investigations demonstrate that integrating Large Language Models with Active Learning (LLM-AL) strategies significantly enhances experimental efficiency. This closed-loop system, autonomously designing and executing experiments, not only matches but often surpasses the performance of traditional methods, achieving comparable or superior results while drastically minimizing resource consumption. Specifically, this approach has been shown to reduce the number of required experiments by over 70%, accelerating the development of novel materials and offering a promising solution to expedite progress in fields ranging from energy storage to advanced manufacturing.

The pursuit of efficient materials discovery, as detailed in the study, mirrors a fundamental drive towards simplification. The framework’s ability to leverage Large Language Models for active learning-reducing the need for extensive training data-exemplifies this principle. It suggests that impactful progress isn’t always achieved through increasing complexity, but rather through distilling information to its most essential form. As Blaise Pascal observed, “The eloquence of the body is in the eyes, and the eloquence of the soul is in silence.” This resonates with the study’s focus on minimizing unnecessary data and maximizing the signal from each experiment, achieving a powerful result with elegant efficiency.

Where to Now?

The enthusiasm for applying large language models to materials science is, predictably, high. This work offers a useful, if understated, demonstration that these models can navigate the active learning landscape without requiring extensive bespoke training. They called it a framework, a polite term to hide the panic of realizing how much previous effort was spent chasing diminishing returns from hand-engineered features. The real question, of course, isn’t whether LLMs can perform active learning, but whether this approach genuinely simplifies the underlying scientific challenge.

A critical limitation remains the reliance on embedding spaces. These spaces, while powerful, are ultimately proxies for materials properties, and the fidelity of that representation will be the ceiling on any predictive capability. Future work must address how to incorporate explicit physical constraints and domain knowledge into the LLM’s reasoning process, rather than simply hoping emergence will deliver acceptable results. The elegant solution will likely involve distilling first-principles calculations into a form digestible by these models, not simply feeding them more data.

Ultimately, the true test will be whether this approach leads to materials discoveries that would have remained elusive through conventional means. A proliferation of ‘LLM-designed’ materials, indistinguishable from those created by more established methods, would suggest a beautifully complex solution to a surprisingly simple problem. Simplicity, after all, is the hallmark of maturity.

Original article: https://arxiv.org/pdf/2511.19730.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- World Eternal Online promo codes and how to use them (September 2025)

- Clash Royale Season 79 “Fire and Ice” January 2026 Update and Balance Changes

- Best Arena 9 Decks in Clast Royale

- Best Hero Card Decks in Clash Royale

- Clash Royale Furnace Evolution best decks guide

- FC Mobile 26: EA opens voting for its official Team of the Year (TOTY)

- Clash Royale Witch Evolution best decks guide

2025-11-26 07:23