Author: Denis Avetisyan

A new survey reveals how social scientists are approaching generative AI, finding limited current use but widespread ethical and practical concerns.

This review analyzes the adoption of generative AI tools within sociological research, highlighting prevalent skepticism and anxieties regarding their societal impact.

Despite widespread discussion of generative AI’s potential, empirical evidence regarding its actual adoption and perceived impact within specific disciplines remains limited. This paper, ‘Generative AI in Sociological Research: State of the Discipline’, addresses this gap by presenting findings from a survey of 433 sociologists regarding their use of these tools. Results reveal modest but growing adoption, primarily for writing assistance, coupled with significant concerns about the social and environmental consequences of GenAI and a generally skeptical outlook on its long-term benefits. As generative AI tools rapidly evolve, will sociological research embrace these technologies, or will caution and concern ultimately prevail?

The Looming Dataset: Sociological Inquiry in an Age of Abundance

Sociological inquiry is undergoing a transformative shift, increasingly leveraging computational methods to dissect the intricacies of social life. Researchers are now routinely employing techniques like network analysis, machine learning, and natural language processing to examine phenomena previously inaccessible to traditional qualitative or quantitative approaches. This move beyond surveys and interviews allows for the analysis of massive datasets – social media interactions, digital communication records, and administrative data – revealing patterns and relationships at scales previously unimaginable. By applying computational power, sociologists can model complex social processes, identify emergent trends, and gain deeper insights into human behavior, ultimately refining understandings of societal structures and dynamics.

The accelerating production of data through digital interactions presents a significant challenge to established sociological research practices. Historically, qualitative interviews and small-scale surveys provided rich, yet limited, insights into social trends. These methods now struggle to capture the breadth and speed of change occurring within complex social systems. The sheer volume of data generated by social media, online platforms, and administrative records overwhelms traditional analytical techniques, while the velocity – the rate at which data is created – demands real-time or near-real-time analysis impossible with manual coding or limited statistical processing. Consequently, sociologists are increasingly turning to computational methods – including machine learning, network analysis, and text mining – not to replace established practices, but to augment them and unlock patterns hidden within the deluge of modern information, allowing for a more dynamic and nuanced understanding of social life.

The Algorithm as Apprentice: Generative AI Enters the Social Sciences

Generative AI models, including GPT-3 and ChatGPT, demonstrate substantial capabilities in both text generation and analysis due to their architecture based on deep learning and transformer networks. These models are trained on massive datasets of text and code, enabling them to predict and generate human-quality text, translate languages, summarize text, and answer questions. Specifically, their analytical abilities include topic modeling, sentiment analysis, and the identification of patterns within large textual corpora. The scale of these models, often containing billions of parameters, contributes to their performance, allowing them to capture complex relationships in language and produce coherent and contextually relevant outputs. Furthermore, these models can be fine-tuned for specific tasks, enhancing their accuracy and utility in specialized applications.

Sociological research is increasingly utilizing generative AI models for diverse analytical tasks. Current applications include automated literature reviews, where models synthesize findings from large corpora of academic papers, and assistance with qualitative data analysis, specifically in coding and thematic identification within interview transcripts or textual datasets. Researchers are also exploring the use of these models to generate hypothetical cases for testing theoretical propositions and to augment traditional content analysis methods. While still in early stages, pilot projects demonstrate potential for accelerating research workflows and identifying patterns within complex qualitative data that might otherwise be missed, though validation of model outputs remains crucial.

The integration of generative AI tools into social science research workflows offers the potential for significant acceleration of traditionally time-consuming processes, such as literature reviews and initial qualitative data coding. However, researchers must address substantial methodological implications stemming from the non-deterministic nature of these models and the potential for introducing bias. Specifically, concerns regarding reproducibility, validity, and the potential for algorithmic amplification of existing societal biases necessitate transparent reporting of prompts, model versions, and validation strategies. Furthermore, establishing clear protocols for verifying AI-generated outputs against source materials and assessing the impact of AI assistance on researcher interpretation is crucial for maintaining the rigor and trustworthiness of social science findings.

Mapping the Terrain: Sociologist’s Engagement with Generative AI

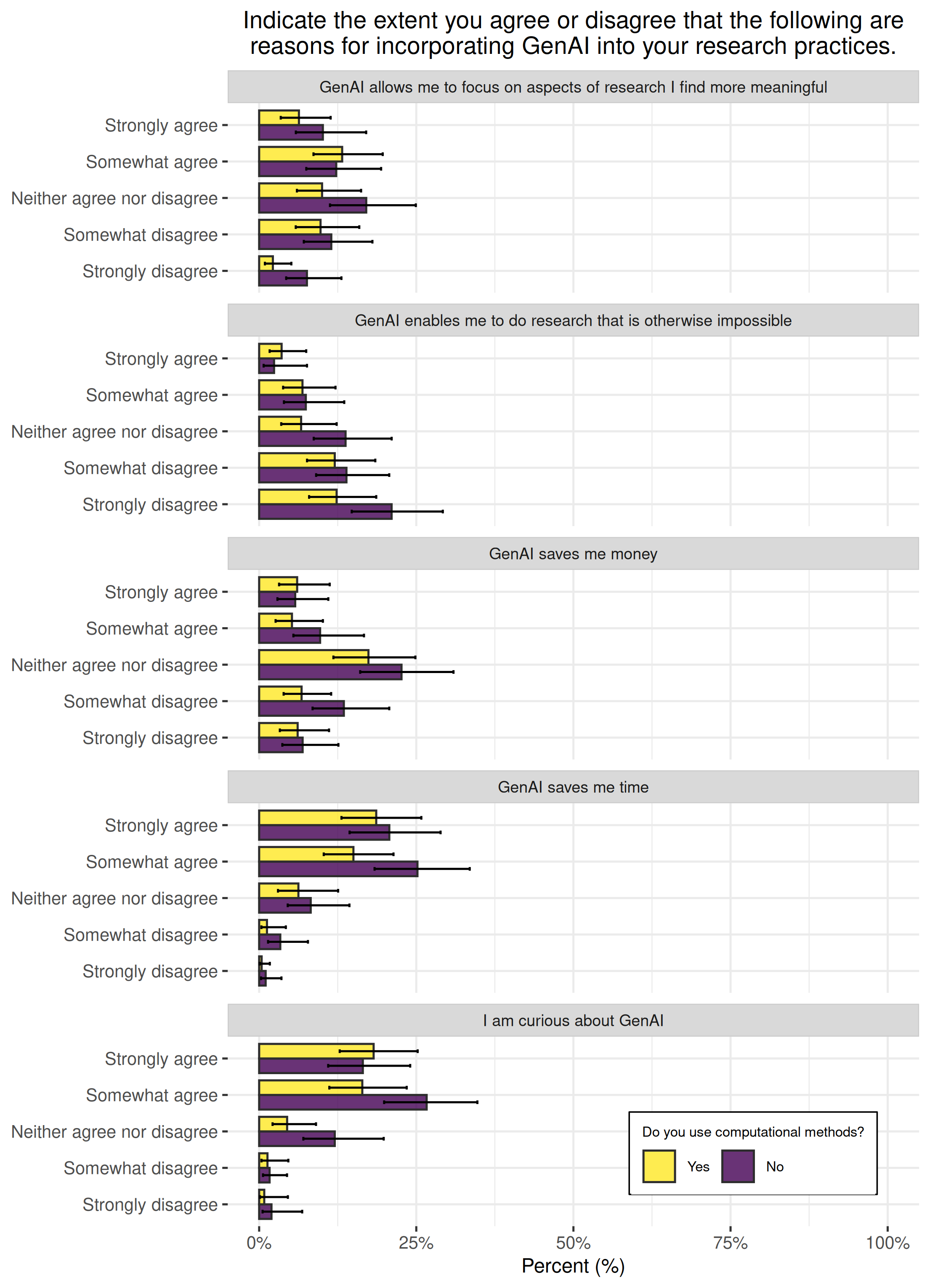

A recent survey examined the integration of generative artificial intelligence (GenAI) tools within the field of sociology. Results indicate increasing engagement with these technologies, though adoption remains measured. The study quantified current usage patterns to establish a baseline understanding of GenAI’s role in sociological research and writing. While a significant proportion of sociologists report no current use, a notable and growing percentage are experimenting with or actively utilizing GenAI, suggesting a trend toward increased implementation as the technology matures and its capabilities become more widely understood within the discipline.

Rake weighting was implemented to mitigate sampling bias in the survey data. This statistical technique adjusts the weighting of individual responses based on known demographic distributions within the broader sociological field, specifically considering factors like academic rank, institutional affiliation, and research specialization. By assigning higher weights to underrepresented groups and lower weights to overrepresented groups, rake weighting ensures the sample more accurately reflects the characteristics of the overall population of sociologists, thereby improving the generalizability and validity of the survey findings. This process minimizes the impact of non-response bias and other systematic errors that could skew the results.

A recent survey indicates that writing assistance represents the most prevalent application of generative AI among sociologists and their collaborators. Specifically, 34% of respondents reported utilizing GenAI tools for tasks including grammar and spelling checks, as well as paraphrasing of existing text. This suggests a current focus on leveraging AI to refine and improve the technical aspects of scholarly writing, rather than employing it for more complex functions like data analysis or theory generation. The reported usage indicates a cautious, pragmatic approach to integrating these technologies into established research workflows.

The Echo Chamber and the Unseen Hand: Broader Implications and Future Directions

The increasing integration of generative AI into sociological research isn’t merely a methodological shift; it compels a rigorous assessment of the technologies’ broader societal ramifications. These tools, capable of producing text, images, and even simulating interactions, introduce new layers of complexity to the study of human behavior and social structures. Researchers are now tasked with considering how the very act of using AI to understand society might, in turn, shape it, potentially reinforcing existing power dynamics or creating unforeseen social consequences. Questions arise regarding the authenticity of data generated by AI, the potential for algorithmic bias to skew interpretations, and the ethical implications of representing social phenomena through artificial means. Ultimately, the sociological inquiry isn’t simply about what AI can reveal, but also about the reciprocal relationship between these technologies and the social world they analyze – a dynamic that demands careful, critical attention.

The escalating computational demands of generative AI models present significant environmental concerns, primarily due to the energy-intensive processes of training and operation. Beyond energy consumption, a critical examination reveals the potential for these systems to inadvertently amplify existing societal biases. Generative AI learns from vast datasets, and if these datasets reflect historical or systemic inequalities – regarding gender, race, or socioeconomic status – the resulting models may perpetuate and even exacerbate these biases in their outputs. This poses a serious risk across various applications, from hiring algorithms to content creation, potentially leading to unfair or discriminatory outcomes. Consequently, rigorous evaluation for bias and the development of mitigation strategies are crucial to ensure responsible and equitable deployment of this powerful technology.

Advancing the responsible integration of artificial intelligence into sociological study necessitates a focused effort on establishing clear best practices and proactively addressing potential harms. Future investigations should prioritize the creation of rigorously vetted datasets – essential for applications like simulating human behavior – ensuring these datasets are representative and free from inherent biases that could skew results or perpetuate inequalities. This includes developing methodologies for continuous monitoring and evaluation of AI systems, alongside transparent documentation of data sources and algorithmic processes. Such an approach will not only enhance the validity and reliability of sociological research utilizing AI, but also foster public trust and enable the beneficial application of these powerful technologies while safeguarding against unintended negative consequences.

The study reveals a cautious embrace of generative AI within sociological research, mirroring a broader skepticism regarding its unexamined consequences. This hesitancy isn’t mere technophobia; it’s a recognition that systems, even those promising efficiency, inevitably accrue unforeseen costs. As Tim Berners-Lee observed, “The web as I envisaged it, we have not seen it yet. The future is still so much bigger than the past.” This sentiment resonates deeply with the findings; sociologists, trained to examine systemic effects, understand that the ‘web’ of AI – its algorithms, data dependencies, and societal impacts – is only beginning to unfold, and promises of seamless integration often obscure the sacrifices demanded of ethical research practices and environmental sustainability. The current limited adoption, therefore, isn’t a rejection, but a prudent assessment of a system still under construction.

What’s Next?

The limited adoption of generative AI within sociological research, as this study demonstrates, isn’t a resistance to innovation – it’s a rational response to inherent systemic risk. The discipline observes, with practiced skepticism, a technology promising effortless insight, yet built upon foundations of obscured provenance and escalating resource demands. Architecture is how one postpones chaos, and the current moment reveals a widespread understanding that these tools merely relocate it – transferring epistemic burdens and ecological costs elsewhere.

Future inquiry shouldn’t focus on if these technologies will be integrated, but how their inherent contradictions will be navigated. The concern regarding social and environmental consequences isn’t a roadblock, but a crucial data point. There are no best practices – only survivors. The field will likely witness a divergence: a pursuit of ‘AI-assisted’ methods focused on augmentation rather than automation, and a parallel development of critical frameworks to assess the true cost of ‘progress.’

Ultimately, the question isn’t whether generative AI will change sociology, but whether sociology will change generative AI. Order is just cache between two outages. The discipline’s strength lies not in predicting the future, but in meticulously documenting the unfolding present – a present increasingly defined by the ghosts in the machine and the externalities they generate.

Original article: https://arxiv.org/pdf/2511.16884.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Clash Royale Season 79 “Fire and Ice” January 2026 Update and Balance Changes

- World Eternal Online promo codes and how to use them (September 2025)

- M7 Pass Event Guide: All you need to know

- Clash Royale Furnace Evolution best decks guide

- Best Arena 9 Decks in Clast Royale

- Best Hero Card Decks in Clash Royale

- Clash of Clans January 2026: List of Weekly Events, Challenges, and Rewards

2025-11-24 19:43