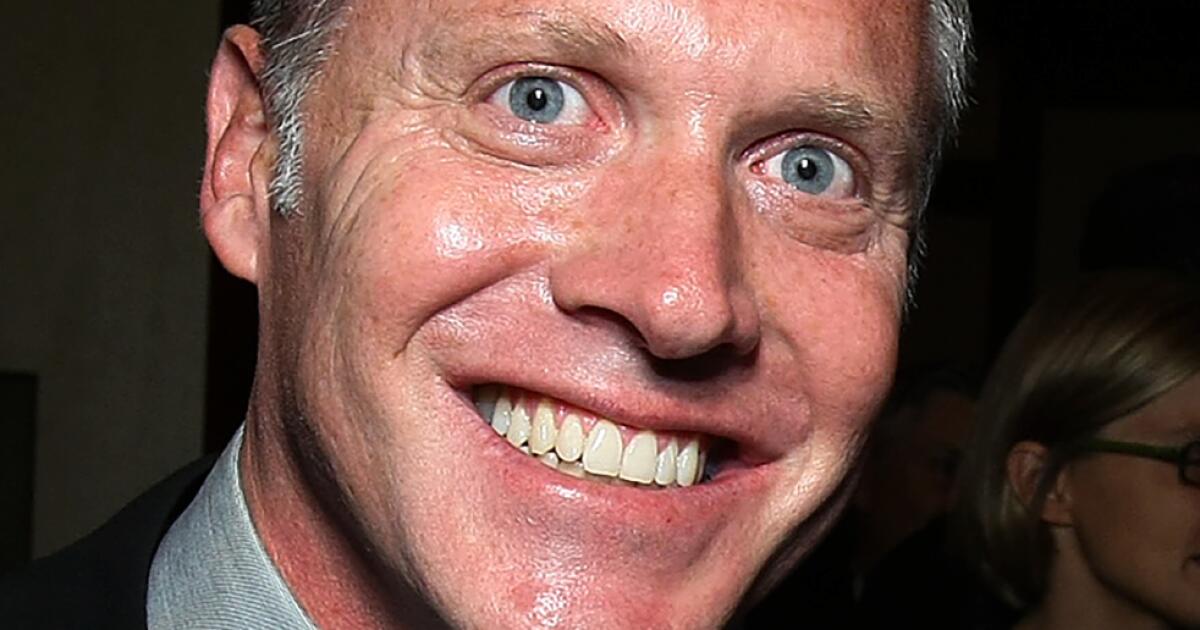

Industry insider weighs in on Kyle Sandilands’ absence on Australian Idol following shock radio suspension

He hasn’t been seen since his recent argument with Jackie ‘O’ Henderson on the KIIS FM show, The Kyle & Jackie O Show.

He hasn’t been seen since his recent argument with Jackie ‘O’ Henderson on the KIIS FM show, The Kyle & Jackie O Show.

Based on a February 25th court filing, the company, headquartered in Boca Raton, Florida, has 13 locations nationwide, including Pasadena and Westwood.

After his parents, David and Victoria Beckham, publicly shared birthday wishes for him, the cooking personality was given a chance to mend his relationship with his family.

The second season, consisting of eight episodes, will premiere on Thursday, May 28th. That’s just over a year after the first season was released.

The Financial Times reports that White House officials are discussing whether investments by Tencent could create security concerns. These discussions are happening as U.S. President Donald Trump and Chinese President Xi Jinping prepare to meet in April.

Morrison shared with Inverse that Lucasfilm reached out to him after he playfully suggested fans ask Disney to revive Boba Fett. They informed him that the character was being put on hold, but might be revisited in the future. As Morrison put it, they told him, “You’ve been put on the shelf, Boba Fett. We might open up the jar later.”

Before E!’s “Dirty Rotten Scandals” premieres on Wednesday, the Daily Mail shared shocking new information from sources close to the show, detailing issues like eating disorders and emotional distress experienced by those involved.

ARN confirmed today that Henderson’s $100 million contract has been cancelled after she informed management she could no longer work with Kyle Sandilands. This decision followed Sandilands’ on-air remarks, which upset her significantly.

According to his obituary, James Gunn passed away at his home in Toronto on Monday after a struggle with ALS.

The former Arrow actor will play Hobie Buchannon in a new 12-episode series. Hobie was previously portrayed by Peter Phelps and Brandon Call in the original Baywatch, which ran for 11 seasons and was the son of Mitch Buchannon.