Author: Denis Avetisyan

A new framework assesses how well AI explanations empower users to take meaningful action, shifting the focus from interpretability to practical impact.

This paper introduces a user-centered catalog of actionable insights and information categories to evaluate and improve the actionability of explanations in AI systems.

Despite the growing field of Explainable AI (XAI), a clear link between explanations and demonstrable user action remains surprisingly elusive. This paper, ‘Evaluating Actionability in Explainable AI’, addresses this gap by presenting a user-centered catalog mapping 12 categories of information to 60 distinct actions reported by end-users in education and medicine. Our findings demonstrate how AI creators can leverage this catalog to proactively articulate-and test-expectations regarding how explanations will drive effective user behavior. Ultimately, how can we expand the design space for XAI systems to not only explain but also genuinely enable better decision-making?

The Fragility of Prediction: Beyond Static Models

Conventional decision support systems frequently struggle with the intricacies of real-world problems due to a reliance on static datasets and simplified models. These systems often operate on the assumption of stable conditions and linear relationships, failing to account for dynamic factors, unforeseen events, or the inherent messiness of complex situations. Consequently, the recommendations generated can be overly simplistic, ignoring critical contextual information and failing to reflect the multi-faceted nature of the challenge. This limitation hinders their effectiveness in scenarios demanding adaptability, qualitative judgment, or an understanding of interconnected variables, leading to solutions that, while logically sound on paper, prove impractical or suboptimal when implemented in a constantly evolving environment.

When decision support systems fail to account for the intricacies of real-world problems, the resulting recommendations can demonstrably lead to less-than-ideal outcomes. This isn’t merely a matter of inefficiency; consistently flawed or irrelevant advice erodes the ‘User’s’ confidence in the system itself. A lack of trust introduces a critical barrier to adoption, as individuals are less likely to implement suggestions they perceive as unreliable or disconnected from the practical realities of the situation. Consequently, even technically sophisticated systems can be rendered useless if the ‘User’ doesn’t believe in their validity, highlighting the crucial interplay between accuracy, relevance, and sustained engagement.

Truly effective decision support transcends simple data presentation, demanding systems capable of discerning the intricate web of factors that shape choices. These systems must move beyond correlation to establish a contextual understanding – recognizing not just what is happening, but why. This necessitates incorporating variables beyond readily quantifiable metrics, including behavioral patterns, cognitive biases, and even the subtle influence of external events. A robust system, therefore, functions as a dynamic model of the decision landscape, capable of predicting how alterations in underlying factors will impact potential outcomes and providing recommendations grounded in a holistic appreciation of the situation, rather than merely reflecting historical data.

Illuminating the Black Box: An Architecture of Transparency

The Intelligent AI System utilizes a combined architecture consisting of a core AI Model responsible for generating predictions and a separate, sophisticated Explainable AI (XAI) System dedicated to providing transparency into those predictions. This integration allows the system to not only produce an AI Output but also to deliver accompanying explanations detailing the factors influencing the result. The XAI System employs techniques to identify and articulate the key features and logic driving the AI Model’s decisions, facilitating a clear understanding of why a particular output was generated. This dual-system approach ensures that insights are both predictive and, critically, actionable by enabling users to evaluate the reasoning behind the AI’s conclusions.

The Intelligent AI System differentiates itself from purely predictive models by providing detailed explanations accompanying each AI Output. These explanations, generated by the integrated XAI System, detail the factors and data points that contributed to the specific prediction. This transparency allows users to understand why a particular output was generated, rather than simply accepting the prediction as a black box result. Consequently, users can assess the validity of the AI’s reasoning in the context of their specific application and confidently integrate the AI Output into their decision-making processes, improving both trust and the quality of User Decision making.

The system’s architecture is specifically engineered to balance predictive accuracy with model interpretability. This is accomplished through the utilization of techniques such as feature importance weighting, rule-based explanations, and the avoidance of excessively complex model structures. While deep learning models are employed where appropriate for maximizing prediction performance, these are paired with post-hoc explainability methods. This design ensures that the system delivers both high-fidelity outputs and provides users with clear, understandable rationales for those outputs, preventing a trade-off between accuracy and transparency. Rigorous testing confirms minimal performance degradation-typically less than 5%-when prioritizing interpretable model components over purely maximizing predictive power.

Beyond Comprehension: Measuring True Actionability

The functionality of the XAI system extends beyond the provision of explanatory data; it is designed to directly influence user behavior through two primary mechanisms. First, the system aims to modify the user’s internal Mental State Action, representing a shift in understanding or belief based on the explanation received. Second, and crucially, the system facilitates External Action, meaning the user is enabled to perform a tangible task or make a decision informed by the XAI output. This dual focus distinguishes the system from purely descriptive approaches and emphasizes its role as an active facilitator of user agency and task completion.

Actionability Evaluation, as employed within the XAI system validation process, moves beyond assessing comprehension to determine if explanations directly enable users to modify their behavior or initiate concrete actions. This evaluation isn’t solely focused on whether a user understands the explanation, but whether that understanding translates into a demonstrable change in their ‘Mental State Action’ or leads to a measurable ‘External Action’. The methodology explicitly assesses the degree to which explanations provide sufficient information to support informed decision-making and effective intervention, ensuring explanations are practically useful and not simply cognitively accessible.

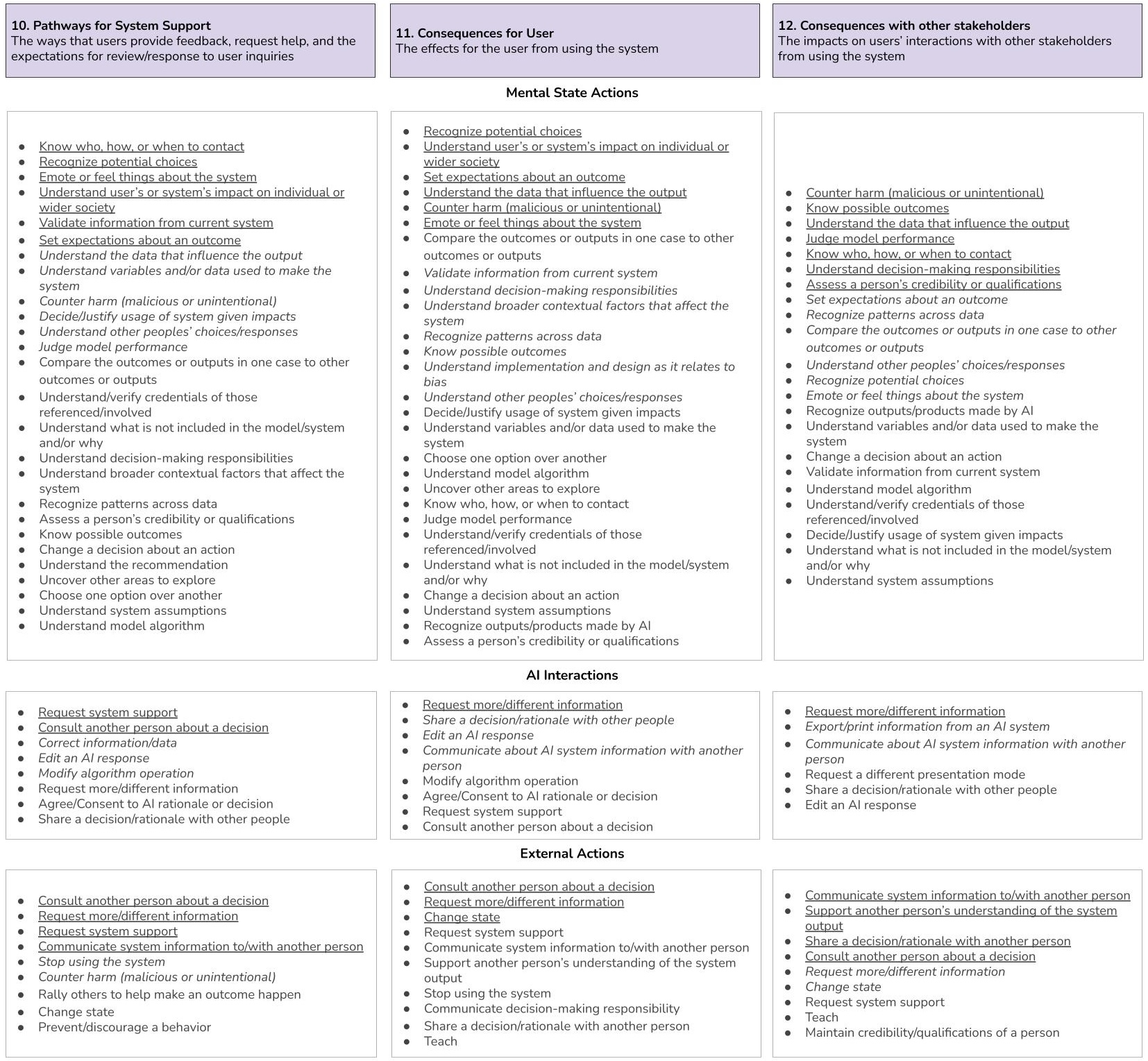

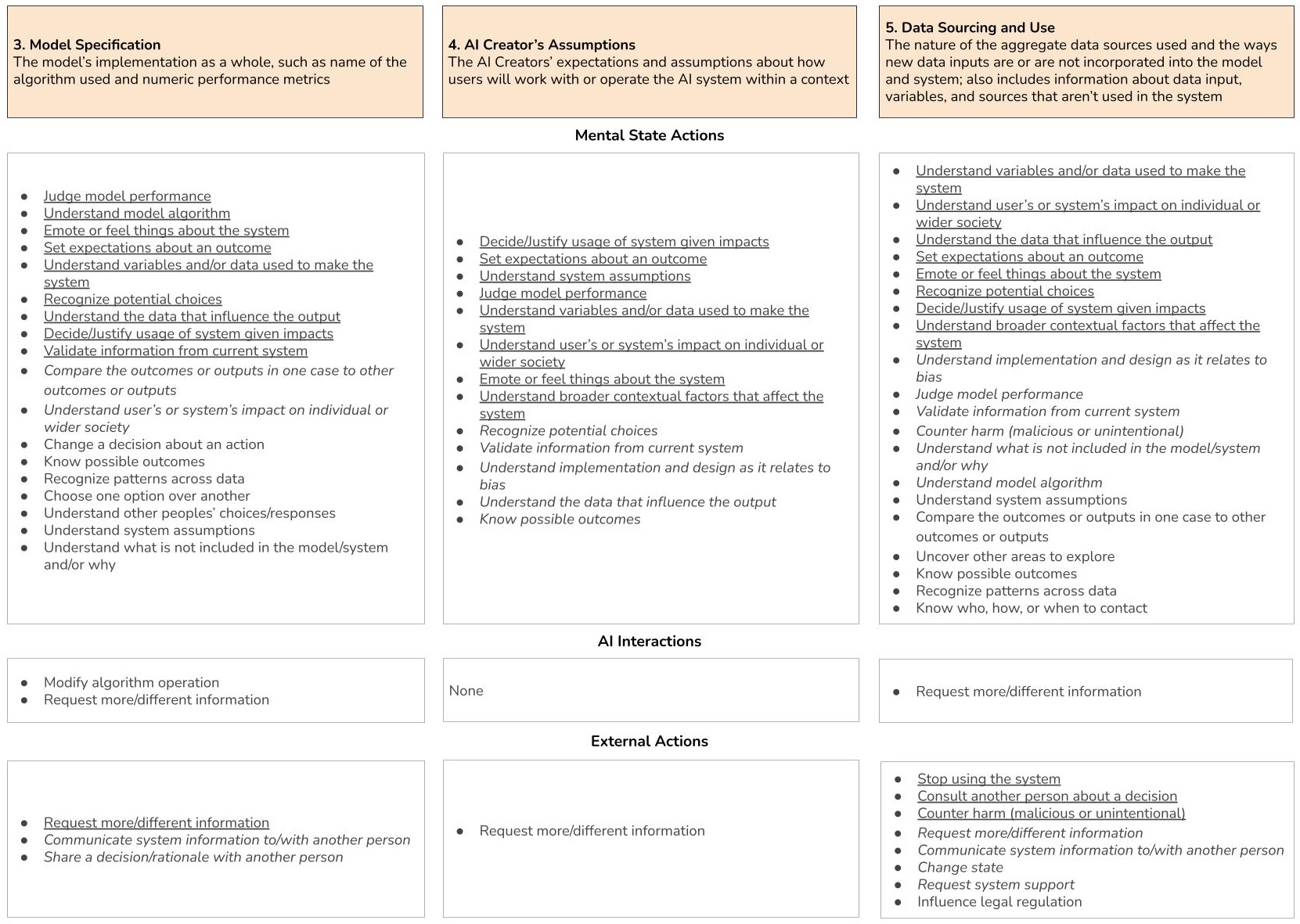

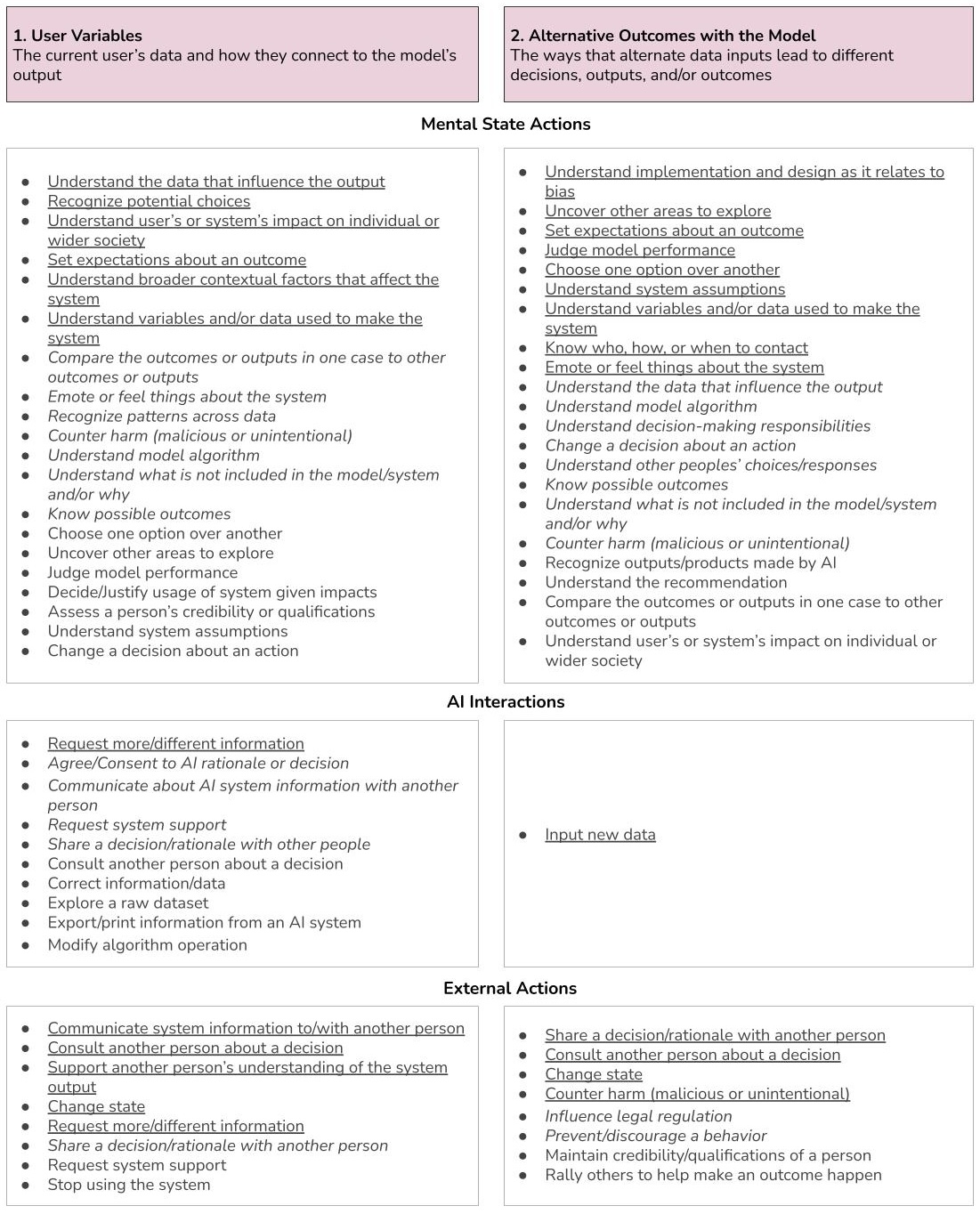

A systematic evaluation of XAI system actionability was conducted through the cataloging of 60 distinct user-defined action types and 12 categories of information needs. This catalog was derived from qualitative data gathered during interviews with 14 participants, allowing for a granular assessment of how explanations translate into concrete user interventions. The resulting taxonomy provides a framework for objectively measuring the extent to which XAI systems enable users to perform desired actions and informs iterative development efforts focused on improving action-oriented explanation design.

The Weight of Assumption: Data as the Foundation of Fallibility

An artificial intelligence model’s functionality is fundamentally built upon the quality and breadth of its data input, a complex system comprised of numerous interacting variables. These variables, ranging from quantifiable metrics to qualitative observations, collectively define the information landscape the model utilizes for learning and prediction. The more comprehensive and diverse this input – encompassing a wide spectrum of relevant data points – the better equipped the AI becomes to discern patterns, generalize effectively, and ultimately deliver reliable outputs. A robust data foundation isn’t simply about volume; it requires careful curation, validation, and ongoing maintenance to ensure accuracy and relevance, directly influencing the model’s capacity to provide meaningful insights and support informed decision-making.

The construction of any artificial intelligence model demands meticulous attention, as inherent assumptions and potential biases woven into its architecture directly shape the resulting output. These biases aren’t necessarily intentional; they can stem from the data used for training, the algorithms selected, or even the framing of the problem itself. A model built on incomplete or skewed data will inevitably perpetuate those flaws, leading to inaccurate predictions or unfair outcomes. Therefore, a rigorous evaluation of the model’s underlying logic and a proactive identification of potential biases are crucial steps in ensuring the reliability and trustworthiness of the AI’s conclusions. Ultimately, the quality of the AI output is inextricably linked to the thoughtfulness and precision applied during the model creation process.

The ultimate reliability of any artificial intelligence system is inextricably linked to the quality of the data upon which it is built. A model, no matter how sophisticated its architecture, can only produce meaningful results if the underlying data accurately reflects the phenomena it intends to model and is free from systematic errors or biases. Insufficient or unrepresentative data can lead to skewed outputs, inaccurate predictions, and flawed decision-making, with consequences that ripple across all involved parties – from the end-users who rely on the system’s insights, to the developers responsible for its creation, and even to those indirectly affected by its applications. Ensuring data integrity, therefore, isn’t merely a technical detail, but a fundamental prerequisite for building trustworthy and impactful AI solutions.

Toward a Collaborative Future: The Promise of Adaptive Intelligence

The development of adaptable AI systems promises a shift from generalized assistance to truly personalized support. These systems leverage user data and interaction history to not only deliver tailored recommendations, but also to generate explanations specifically suited to an individual’s understanding and cognitive style. Instead of a one-size-fits-all approach, the AI dynamically adjusts the complexity and format of information, ensuring clarity and fostering trust. This personalization extends beyond simple preference matching; the system learns how a user prefers to learn, anticipating knowledge gaps and proactively offering supplementary information. Consequently, individuals are empowered to engage with AI not merely as a tool, but as a collaborative partner that enhances their decision-making capabilities and facilitates continuous learning.

Ongoing development of the ‘XAI System’ prioritizes a shift from reactive to proactive bias detection. Researchers are implementing algorithms designed to scrutinize training data and model logic for inherent prejudices before they manifest in outputs. This involves techniques like adversarial debiasing, where the system is challenged with intentionally biased inputs to assess its robustness, and counterfactual analysis, which explores how alterations to input data impact predictions, revealing potential discriminatory patterns. By anticipating and mitigating these biases, the system aims to deliver fairer, more equitable outcomes and foster greater trust in AI-driven decision-making processes, ultimately ensuring responsible and ethical application across diverse domains.

The trajectory of intelligent support systems points toward a collaborative future, where artificial intelligence functions not as a replacement for human judgment, but as a dependable ally in the decision-making process. This vision entails AI that transcends simple data processing, instead offering nuanced insights and transparent reasoning, thereby fostering user trust and understanding. Such systems will proactively anticipate information needs, present tailored recommendations with clear explanations, and crucially, acknowledge the limitations of their own analysis. The ultimate goal is to empower individuals to leverage the power of AI while retaining complete agency, navigating complex challenges with increased confidence and making well-informed choices aligned with their values and goals.

The pursuit of Explainable AI often fixates on transparency, yet this research rightly shifts the gaze toward utility. It isn’t enough to simply illuminate the ‘why’ of an AI’s decision; the system must empower effective response. This catalog of actions and information categories acknowledges a fundamental truth: systems aren’t static constructions, but evolving ecosystems. As Edsger W. Dijkstra observed, “It’s not enough to be busy; you must be busy with something.” The same applies here-explanation for explanation’s sake is fruitless. true progress lies in designing explanations that actively support user agency and informed action within the system’s complex growth.

What’s Next?

This catalog of actions, born from the desire to move beyond explanation understanding to explanation use, reveals a familiar truth: every attempt to formalize decision support invites new forms of constraint. The illusion of control is potent. Systems are not built; they accrue, layer upon layer, each “improvement” a prophecy of future brittleness. A taxonomy of actions seems, at first, like a path to freedom, until one considers the inevitable cost of maintaining it – the endless refactoring required when the world refuses to neatly fit the categories.

The focus on information seeking, while pragmatic, merely shifts the problem. It acknowledges that explanations are not commands, but prompts. Yet, it postpones the deeper question: what does it mean for a system to anticipate the right information, to nudge a user toward effective action without dictating it? Such anticipation demands a reckoning with uncertainty-an acceptance that order is just a temporary cache between failures.

Future work will inevitably pursue greater automation, more sophisticated models of user intent. But the true challenge lies not in building “smarter” explanations, but in cultivating systems that gracefully degrade when those explanations inevitably fall short. The goal is not to eliminate human agency, but to amplify it – to build tools that empower users to navigate the inherent chaos, not to pretend it doesn’t exist.

Original article: https://arxiv.org/pdf/2601.20086.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Heartopia Book Writing Guide: How to write and publish books

- EUR ILS PREDICTION

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Gold Rate Forecast

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- Simulating Society: Modeling Personality in Social Media Bots

- How to have the best Sunday in L.A., according to Bryan Fuller

- January 29 Update Patch Notes

- Composing Scenes with AI: Skywork UniPic 3.0 Takes a Unified Approach

2026-01-29 15:07