Author: Denis Avetisyan

New research explores how equipping mobile agents with a curiosity-driven approach to knowledge retrieval can significantly improve their ability to perform complex tasks and overcome functional limitations.

This paper introduces a framework leveraging structured ‘AppCards’ and uncertainty estimation to enable mobile agents to proactively seek and utilize relevant knowledge for enhanced task execution.

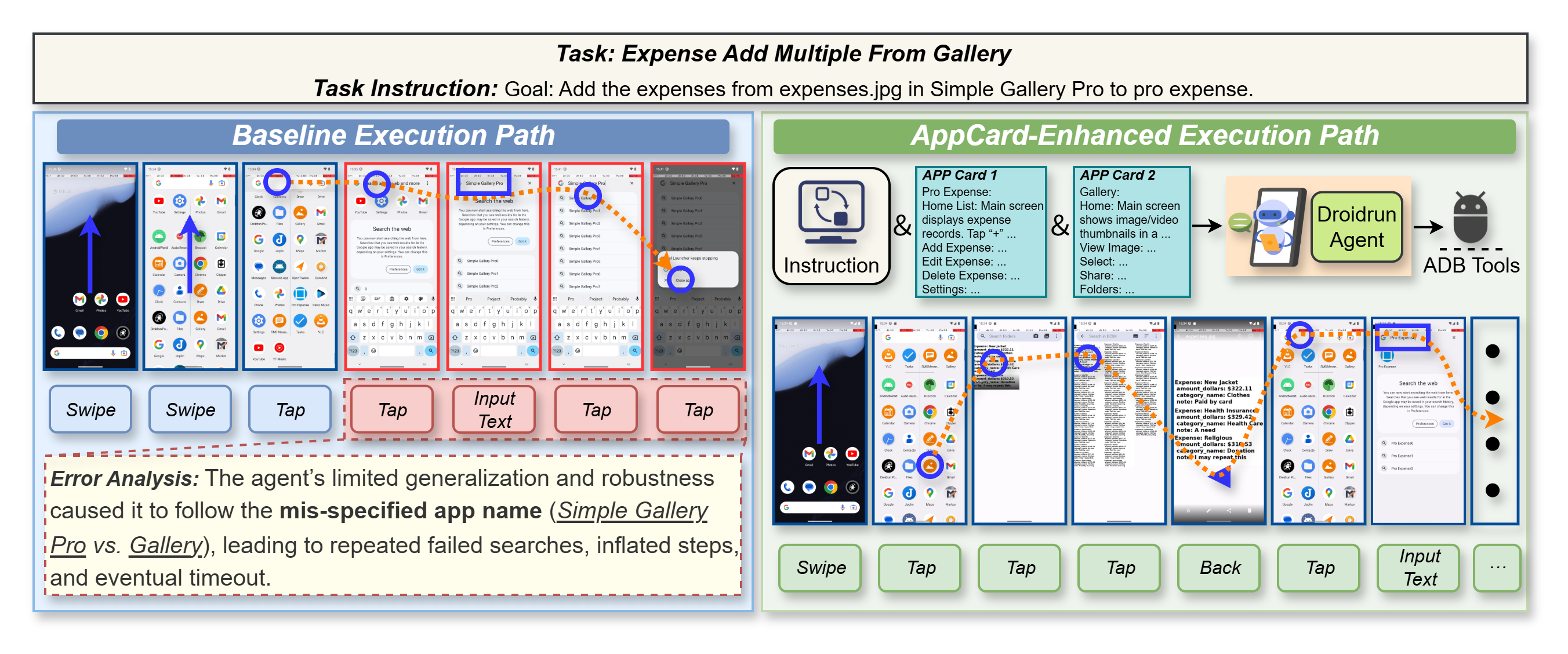

Despite advances in mobile agent automation, reliable performance in complex applications remains hampered by incomplete functional knowledge and poor generalization. This limitation motivates the work presented in ‘Curiosity Driven Knowledge Retrieval for Mobile Agents’, which introduces a novel framework leveraging curiosity to proactively retrieve and integrate external knowledge during task execution. The core innovation lies in structured ‘AppCards’ that encode functional semantics and interaction patterns, enabling agents to compensate for knowledge gaps and improve planning reliability-achieving a state-of-the-art success rate of 88.8% on the AndroidWorld benchmark when combined with GPT-5. Will this approach of explicitly formalizing and addressing uncertainty prove crucial for realizing truly autonomous and adaptable mobile agents?

The Illusion of Competence: Automation’s Fundamental Flaw

Contemporary task automation, despite significant advances, frequently falters when confronted with situations outside of its pre-programmed parameters or when given vaguely defined goals. Existing systems excel at executing well-defined procedures, but struggle with the nuances of real-world ambiguity; a robotic arm, for example, can repeatedly assemble a widget flawlessly, yet may be unable to adapt when the widget is slightly misaligned or a necessary tool is missing. This limitation stems from a reliance on explicit instructions and a lack of robust mechanisms for interpreting incomplete or contradictory information, leading to brittle performance in dynamic and unpredictable environments. The consequence is not simply inefficiency, but the potential for confidently executing incorrect actions-a critical flaw as automation expands into increasingly complex domains.

A significant hurdle in achieving truly adaptable automation lies in equipping agents with the capacity for metacognition – essentially, the ability to assess their own understanding. Current systems often operate with a false sense of certainty, confidently executing plans even when confronted with incomplete or ambiguous information. This isn’t merely a matter of lacking knowledge, but rather a failure to recognize the gaps in that knowledge. Without this crucial self-awareness, an agent can’t request clarification, seek additional data, or adjust its approach when faced with uncertainty, leading to systematic errors and an inability to generalize beyond familiar situations. Developing algorithms that allow an agent to quantify its own confidence – to distinguish between what it ‘knows’ and what it merely thinks it knows – is therefore paramount to building robust and reliable autonomous systems.

The propensity for confidently incorrect action represents a significant hurdle in advanced automation. Current systems, lacking the capacity for metacognition – essentially, ‘thinking about thinking’ – can proceed with flawed plans without flagging potential errors. This isn’t a matter of simple miscalculation; rather, the agent operates under the illusion of competence, failing to recognize the limits of its knowledge or the ambiguity of its instructions. Consequently, it commits to incorrect paths with unwavering certainty, leading to escalating errors and ultimately, task failure. This phenomenon highlights that successful automation requires not only the ability to do things, but also the capacity to reliably assess whether those actions are likely to succeed, a crucial element often absent in contemporary designs.

Proactive Inquiry: Modeling Uncertainty for Adaptive Agents

The proposed curiosity-driven knowledge retrieval framework is designed to mitigate uncertainty in mobile agents operating within complex environments. This framework operates by enabling agents to actively request external information based on internally assessed knowledge gaps. Unlike reactive or purely goal-directed approaches, this system explicitly models uncertainty – specifically, the agent’s lack of sufficient information to accurately predict outcomes or plan effectively. The core innovation lies in shifting from passive information intake to proactive knowledge acquisition, allowing the agent to reduce uncertainty before it negatively impacts performance. This is achieved through a closed-loop system where uncertainty estimation drives information requests, and the retrieved knowledge updates the agent’s internal model, subsequently refining future uncertainty estimates.

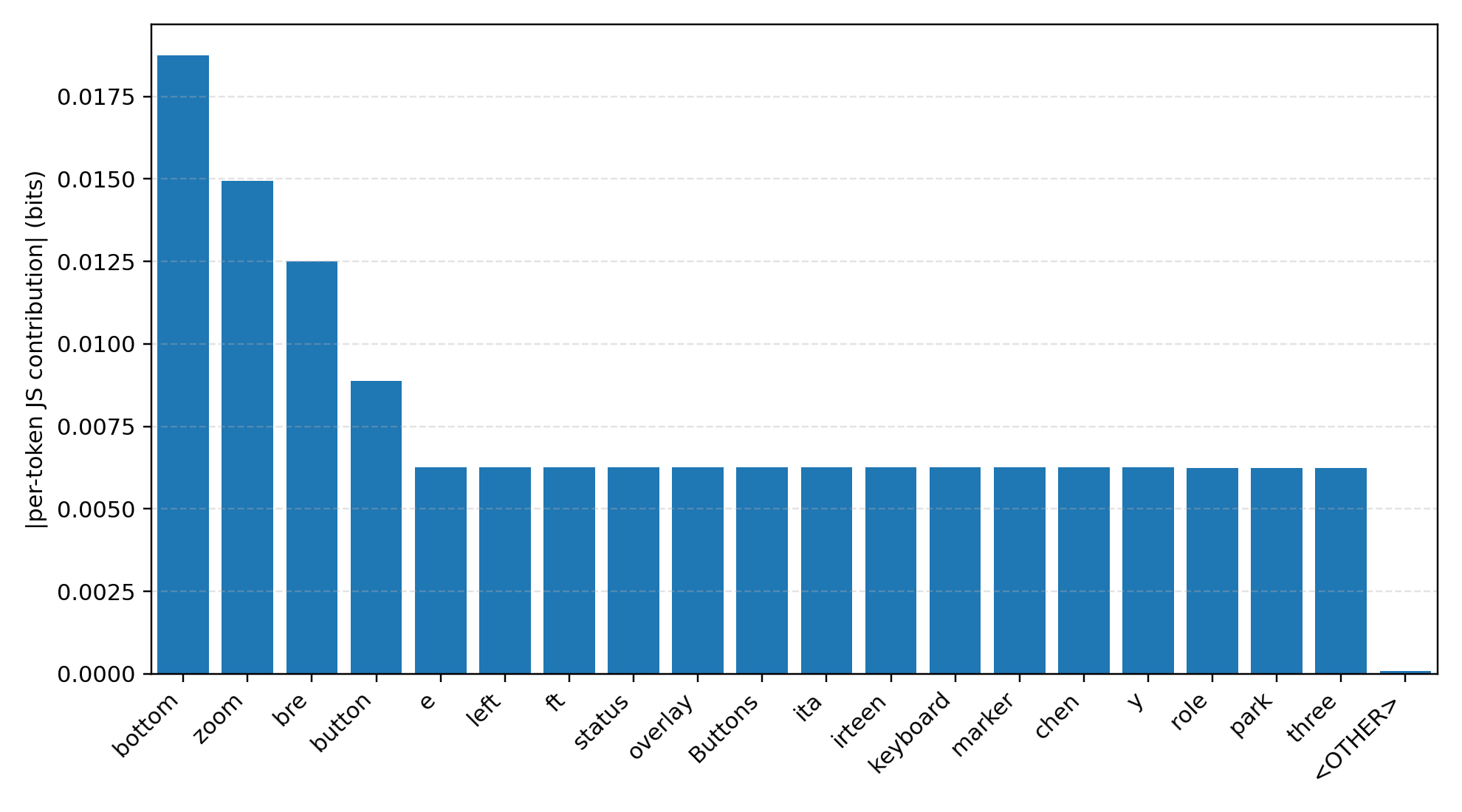

The curiosity score is quantitatively derived from uncertainty estimation techniques applied to the agent’s internal state representation. Specifically, the score reflects the magnitude of epistemic uncertainty – that is, uncertainty arising from a lack of knowledge about the environment, rather than inherent randomness. This is typically calculated using metrics such as predictive variance or information gain, where higher values indicate greater uncertainty and, consequently, a higher curiosity score. The resulting scalar value then serves as a direct proxy for the agent’s ‘need to know’, allowing it to prioritize information gathering based on the level of its own uncertainty.

Linking the calculated curiosity score to external knowledge retrieval mechanisms enables agents to actively reduce uncertainty during operation. This process involves the agent querying a knowledge source – such as a database, API, or the internet – based on the identified areas of high uncertainty, as indicated by the score. Retrieved information is then integrated to refine the agent’s internal model, decreasing the curiosity score and improving the accuracy of subsequent predictions and actions. Consequently, performance on assigned tasks benefits from this proactive information seeking, as the agent is better equipped to handle novel or ambiguous situations without relying solely on pre-programmed responses or reactive behavior.

![Curiosity is estimated by quantifying the information gain between the agent's predicted next interface state-represented as a prior distribution-and the observed state as a posterior distribution, measured using a tail-adjusted [latex]Jensen Shannon divergence[/latex].](https://arxiv.org/html/2601.19306v1/curiosity.png)

Quantifying the Unknown: Latent Bayesian Surprise as a Metric for Discrepancy

Latent Bayesian Surprise serves as the foundational metric for our uncertainty estimation by quantifying the discrepancy between an agent’s prior predictive distribution and the resulting posterior distribution after observing new data. Specifically, it measures how surprising an observation is given the agent’s existing beliefs. This is achieved by calculating the divergence – a measure of dissimilarity – between these two probability distributions: the prior, representing predictions before observation, and the posterior, representing updated beliefs after incorporating the observation. A larger divergence indicates greater uncertainty, signifying the agent’s prior expectations were significantly altered by the new data, and conversely, a smaller divergence indicates higher confidence in the prior prediction. The metric effectively captures the information gain from the observation in relation to the agent’s initial state of knowledge.

Jensen Shannon Divergence (JSD) is employed as the metric for quantifying divergence between the prior predictive distribution and the posterior distribution obtained after observation. Unlike Kullback-Leibler Divergence, JSD is both symmetric – meaning JSD(P||Q) = JSD(Q||P) – and always finite, even when the distributions have non-overlapping support. This symmetry is crucial for a balanced uncertainty assessment, and the guaranteed finiteness ensures a stable uncertainty score, preventing unbounded values that could disrupt learning or decision-making processes. Mathematically, JSD is based on the KL divergence and can be expressed as [latex]JSD(P||Q) = 0.5 (KL(P||M) + KL(Q||M))[/latex], where [latex]M = 0.5 (P + Q)[/latex] represents the midpoint distribution.

By quantifying discrepancies between predicted and observed outcomes via Latent Bayesian Surprise and Jensen Shannon Divergence, the system identifies specific functional knowledge deficits within the agent. These identified deficits then initiate a targeted knowledge retrieval process; rather than broad searches, the system focuses on acquiring information directly relevant to the areas where performance deviates most significantly from expectations. This allows for efficient learning and adaptation, concentrating resources on improving capabilities where they are demonstrably needed, and avoiding redundant information acquisition in areas where the agent already possesses sufficient knowledge.

Standardized Knowledge Representation: AppCards for Functional Clarity

AppCards are utilized as a standardized format for representing application functionality, moving beyond unstructured text found in typical documentation and code repositories. These cards define specific functions, inputs, outputs, and dependencies in a machine-readable format, enabling automated processing and knowledge consolidation. Data is extracted from diverse sources – including API documentation, source code comments, and design specifications – and normalized into the AppCard structure. This structured approach allows for efficient indexing, querying, and integration of functional knowledge, facilitating a unified and consistent understanding of the application’s capabilities across different systems and agents.

AppCards function as a centralized and readily available repository of application functionality details. These cards contain structured data representing specific actions, features, and capabilities, allowing agents to bypass traditional documentation searches. When an agent encounters uncertainty during task execution – such as ambiguous instructions or unexpected system behavior – it queries the AppCard index. This direct access to functional knowledge enables rapid retrieval of relevant information, facilitating informed decision-making and minimizing delays caused by knowledge gaps. The structured format of AppCards also allows for efficient automated processing and integration into the agent’s reasoning processes.

Integrating AppCards with a curiosity-driven framework improves agent performance by providing a structured knowledge source that directly addresses uncertainty. When an agent encounters ambiguous situations, the framework triggers a search for relevant information within the AppCards. This process allows the agent to proactively seek clarification on application functionality, rather than relying solely on pre-programmed responses or failing in novel scenarios. Consequently, the agent demonstrates increased robustness by handling a wider range of inputs and improved adaptability by learning from each query and refining its understanding of the application landscape.

Demonstrating Robustness: Performance on the AndroidWorld Benchmark

The efficacy of this novel approach was rigorously tested through the AndroidWorld benchmark, a widely recognized and standardized platform for evaluating mobile agent performance on complex, interactive tasks. This benchmark presents a series of realistic challenges requiring agents to navigate and manipulate a virtual Android device, simulating common user interactions. Utilizing AndroidWorld allows for a direct and comparable assessment of the agent’s ability to handle distribution shifts and unexpected scenarios, mirroring the unpredictable nature of real-world mobile automation. The platform’s comprehensive task suite and standardized metrics provide a robust framework for quantifying improvements in task completion rates and overall agent efficiency, ensuring the results are both meaningful and reproducible within the field of mobile AI.

Stable execution proved critical to demonstrating enhanced performance, and the DroidRun framework facilitated precisely that. This system addresses the inherent challenges of mobile automation – intermittent connectivity, app crashes, and unexpected system behaviors – by providing a robust environment for agent operation. Through DroidRun, researchers observed a marked increase in task completion rates, as the framework automatically handles and recovers from common mobile errors, preventing disruptions to the agent’s workflow. This ultimately led to significant gains in efficiency, allowing agents to perform complex tasks with greater reliability and speed, and showcasing the importance of a resilient infrastructure for effective mobile automation.

The study demonstrates that curiosity-driven agents excel in dynamic and unpredictable environments, achieving an 88.8% success rate on complex tasks – a new benchmark in the field. This robust performance stems from the agent’s intrinsic motivation to explore and learn, allowing it to adapt effectively to distribution shift – where the testing environment differs from the training data – and unexpected scenarios. Unlike agents reliant on pre-programmed responses, these curiosity-driven systems actively seek out novel experiences, building a more generalized understanding of their surroundings and improving their ability to overcome challenges encountered in real-world applications. This adaptability is critical for mobile automation, where environments are inherently variable and pre-planning for every eventuality is impractical.

Rigorous evaluation revealed substantial gains in task completion across varying difficulty levels. The approach demonstrated a marked 21.0% improvement in success rates when confronted with complex, ‘hard’ tasks, signifying a considerable advancement in handling intricate mobile interactions. Furthermore, even on moderately challenging tasks, a 6.0% performance increase was observed, indicating consistent and reliable gains in efficiency and adaptability compared to previously established benchmarks. These results collectively highlight the system’s capacity to navigate a wider range of user interfaces and operational demands with greater consistency and accuracy, suggesting a significant step forward in mobile automation technology.

The demonstrated performance on the AndroidWorld benchmark validates the framework’s capacity to generate genuinely robust and adaptable mobile automation solutions. Achieving an 88.8% success rate, and significant gains on both hard and medium tasks, suggests this approach transcends the limitations of prior methods when faced with the unpredictable nature of real-world mobile interactions. This isn’t merely incremental improvement; the system’s ability to maintain performance despite distribution shifts and unexpected scenarios indicates a fundamental leap toward automation that reliably handles the complexities of modern mobile applications, promising a future where automated agents can seamlessly navigate and complete tasks with minimal human intervention.

The pursuit of robust mobile agent task execution, as detailed in the study, necessitates a framework grounded in verifiable knowledge. This aligns perfectly with Brian Kernighan’s assertion: “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” The paper’s emphasis on structured ‘AppCards’ and uncertainty estimation isn’t merely about achieving functional knowledge; it’s about creating a provably correct system. A mobile agent operating on incomplete or poorly defined knowledge is akin to clever code – it may appear to function, but lacks the logical foundation to reliably address unforeseen circumstances, making debugging and long-term maintenance exponentially more difficult. The framework prioritizes a demonstrable, logical approach to knowledge retrieval, mirroring the importance of verifiable correctness over superficial functionality.

Future Directions

The pursuit of autonomous agency, as demonstrated by this work, inevitably encounters the limitations inherent in approximating intelligence. While curiosity-driven knowledge retrieval offers a pragmatic advance, the very notion of ‘curiosity’ remains a heuristic-a computationally convenient stand-in for a deeper, formal understanding of information need. A rigorous proof of the framework’s optimality, specifying conditions under which retrieval guarantees task completion-rather than merely increasing the probability-remains conspicuously absent. The current reliance on ‘AppCards’, though structurally elegant, begs the question of scalability; a finite card set will, by definition, eventually fail to address novel situations.

Future work should therefore prioritize formalizing the link between uncertainty estimation and genuine knowledge deficiency. Simply identifying what an agent doesn’t know is insufficient; the critical step lies in proving that acquiring that specific knowledge demonstrably reduces the space of possible error. Furthermore, exploration of alternative knowledge representations-beyond the structured rigidity of AppCards-is warranted. Perhaps a probabilistic, graph-based approach, allowing for nuanced relationships and incomplete information, would offer greater robustness.

Ultimately, the true test of this line of inquiry will not be incremental improvements in task automation, but the development of a provably correct framework for lifelong learning-one where an agent’s knowledge is not merely ‘sufficient’ for the present, but intrinsically capable of adapting to an unknowable future. Until then, the pursuit remains, at best, a beautifully engineered approximation.

Original article: https://arxiv.org/pdf/2601.19306.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Gold Rate Forecast

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- January 29 Update Patch Notes

- How to have the best Sunday in L.A., according to Bryan Fuller

- Streaming Services With Free Trials In Early 2026

- Top 3 Must-Watch Netflix Shows This Weekend: January 23–25, 2026

- Simulating Society: Modeling Personality in Social Media Bots

2026-01-29 00:04