Author: Denis Avetisyan

A new co-training approach combines the strengths of simulated environments and human demonstrations to dramatically improve robot learning and generalization capabilities.

SimHum leverages both simulation and human data to achieve data-efficient and generalizable robotic manipulation in real-world settings.

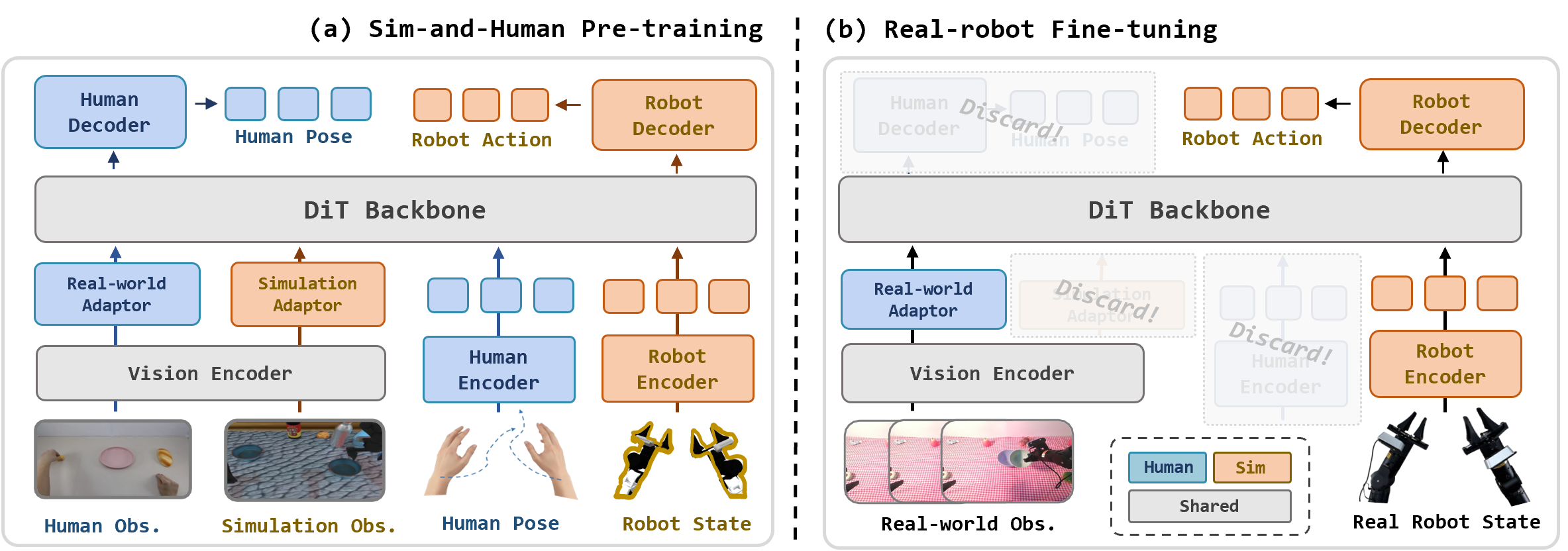

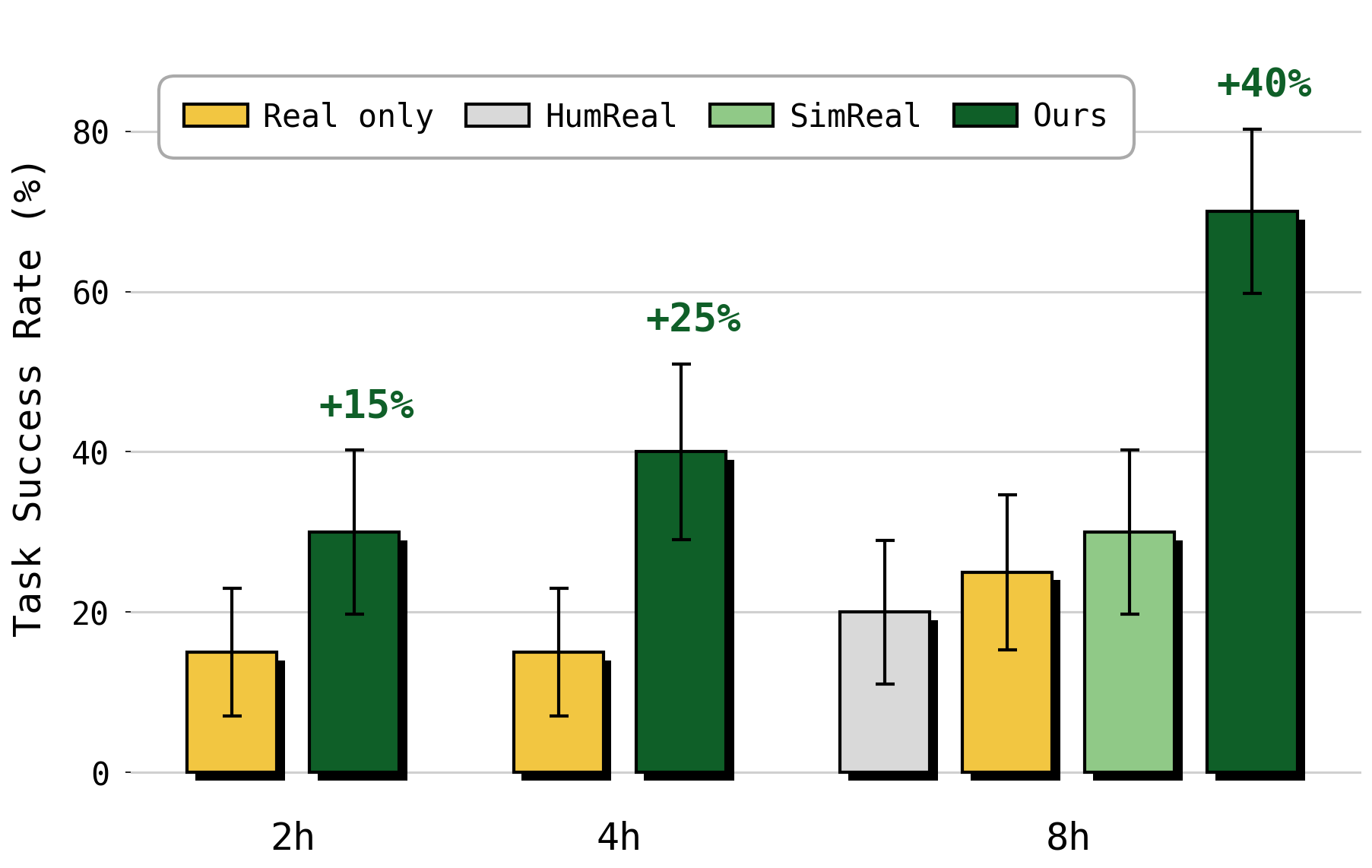

Collecting sufficient real-world data for robust robot learning remains a persistent challenge, yet both simulated and human demonstration datasets suffer from limitations in transferring to novel environments. This work, ‘Sim-and-Human Co-training for Data-Efficient and Generalizable Robotic Manipulation’, addresses this by recognizing a complementary relationship between simulation-providing action-rich data-and human data-offering realistic observations. We introduce SimHum, a co-training framework that simultaneously learns from these sources to achieve data-efficient and generalizable robotic manipulation, improving performance by up to [latex]40\%[/latex] under limited data budgets. Could this approach unlock more adaptable and cost-effective robotic systems capable of thriving in complex, real-world scenarios?

The Illusion of Reality: Why Robot Training Always Falls Short

Robotic systems trained exclusively in simulated environments often encounter significant performance drops when deployed in the real world-a phenomenon known as the ‘sim-to-real’ gap. This discrepancy arises from unavoidable differences between the simplified, idealized conditions of simulation and the unpredictable complexity of unstructured environments. Factors such as imperfect sensor data, unmodeled physical interactions, and variations in lighting or object appearance contribute to this gap, causing robots to struggle with tasks they previously mastered in simulation. Consequently, the reliance on purely simulated training data limits the practical deployment of robots in real-world scenarios, hindering their ability to operate reliably in dynamic and unpredictable settings. Bridging this gap is therefore crucial for realizing the full potential of robotic automation.

Robots trained exclusively on simulated data frequently encounter difficulties when deployed in the unpredictable real world, a phenomenon stemming from discrepancies between the controlled virtual environment and the complexity of reality. Conversely, systems learning solely from real-world interactions often prove inflexible, struggling to adapt to novel situations or generalize beyond the specific scenarios encountered during training. This limitation arises because real-world data is inherently noisy and limited in scope, hindering the robot’s ability to develop robust and adaptable manipulation strategies. Consequently, performance can degrade significantly when faced with even minor variations in object appearance, lighting conditions, or environmental disturbances, leading to brittle behavior and restricted applicability in dynamic, unstructured settings.

Robust robotic manipulation hinges on the development of models capable of bridging the inevitable gap between a robot’s perception of the world and the actions it takes within it. Complex scenes introduce inherent discrepancies – visual noise, imperfect sensor data, and the unpredictable nature of physical interactions – that can derail even carefully programmed behaviors. Consequently, successful manipulation isn’t simply about accurately identifying objects, but about building systems that can reconcile these perceptual uncertainties with the required motor commands. These models must effectively predict the consequences of actions, even when those actions unfold in a manner slightly different from what was anticipated, allowing the robot to adapt and maintain a stable grasp or successfully complete a task despite real-world complexities. This requires moving beyond rigid, pre-defined responses toward more flexible, learning-based approaches that account for the inherent variability of the physical world.

![Simulation data improves generalization to unseen robot positions, as demonstrated by a consistent progress rate across a [latex]4 \times 4[/latex] grid, including areas outside the scope of real-robot training.](https://arxiv.org/html/2601.19406v1/figures/position_ablation.png)

Co-training: A Pragmatic Approach to Robot Learning

Sim-and-Human Co-training, termed SimHum, is a novel machine learning framework designed to enhance robotic learning through the synergistic combination of three data sources: simulated robot experiences, human-provided demonstrations, and real-world robot interaction data. This approach leverages the strengths of each data type; simulation offers scalability and control, human demonstrations provide behavioral priors, and real-world data ensures adaptation to actual environmental conditions. The framework facilitates a training process where these datasets are integrated and utilized to improve the robot’s performance and generalization capabilities in complex tasks.

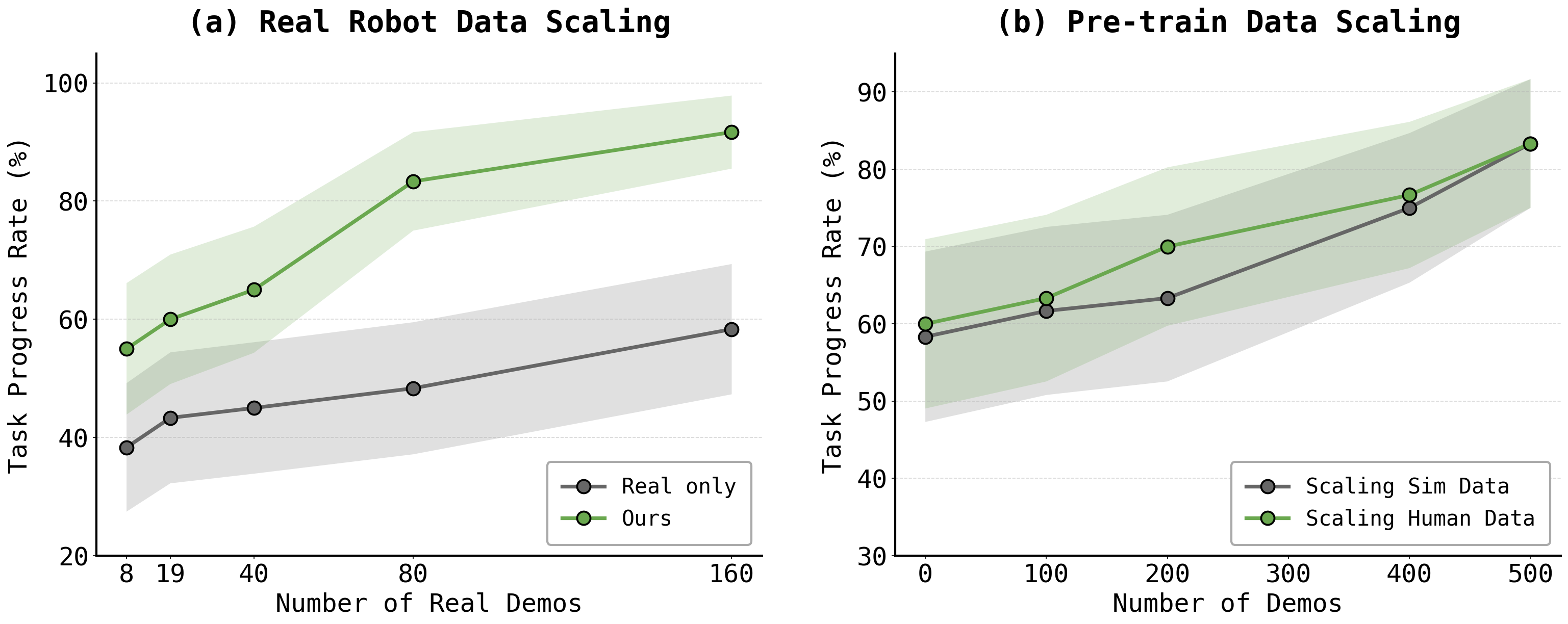

Simulated data serves as a primary source for initializing robot learning algorithms and facilitating exploratory behavior. This data is not utilized in its raw form; instead, it is systematically expanded using techniques such as Data Augmentation. Data Augmentation involves the creation of synthetic variations of existing simulated data points – for example, altering object positions, lighting conditions, or introducing sensor noise. This process effectively increases the diversity and volume of the training dataset, improving the model’s generalization capability and reducing the need for extensive real-world data collection during the initial learning phases. The resulting augmented dataset provides a robust foundation upon which subsequent learning stages, incorporating human demonstrations and real-world robot data, can build.

Human demonstration data contributes to robot learning by providing examples of desired behaviors, effectively establishing prior knowledge about successful strategies. This approach bypasses the need for extensive random exploration, allowing the robot to initialize its learning process with informed actions. The incorporation of human-provided trajectories accelerates convergence and reduces the amount of real-world data required to achieve comparable performance levels; the robot learns not just what to do, but also how to do it in a manner consistent with effective human practice, thus enhancing sample efficiency.

The SimHum framework demonstrably improves data efficiency by 40% relative to approaches relying solely on real-world robot data. This reduction in required data is achieved through the synergistic combination of simulated data and human demonstrations, allowing the robot to learn more effectively from a smaller volume of actual environmental interactions. Specifically, the framework minimizes the need for costly and time-consuming real-world data collection by leveraging the breadth of simulated experiences and the behavioral priors established through human-provided examples. This increased efficiency translates directly into reduced training time and resource expenditure.

Diffusion Policies: A Generative Approach to Actionable Control

A Diffusion Policy forms the central component of this system, operating as a generative model for robot actions. It is trained to predict actions conditioned on current state estimates – encompassing proprioceptive data and processed visual observations. This policy utilizes a diffusion process, iteratively refining a random action towards a feasible and desirable outcome. The model learns a probability distribution over actions given the state, allowing it to generate diverse and contextually appropriate behaviors. Unlike deterministic policies, the diffusion process enables the exploration of a wider range of possible actions, contributing to improved robustness and adaptability in dynamic environments. The policy directly outputs continuous control signals, facilitating precise and nuanced robot manipulation.

The Modular Action Encoder facilitates transfer learning by projecting both robot and human action spaces into a common latent space. This is achieved through separate encoders for each action space – one for the robot’s control commands and another for human demonstrations – which map these distinct inputs into a unified, lower-dimensional representation. By learning a shared latent space, the system can leverage human demonstrations to guide robot behavior and accelerate learning in new environments, even with differences in kinematic structures or control schemes. This modularity allows for the reuse of learned representations across different robots and tasks, reducing the need for extensive re-training and improving sample efficiency.

The Diffusion Policy incorporates two distinct priors during action generation: a Visual Semantic Prior and a Robot Kinematic Prior. The Visual Semantic Prior guides action selection based on the interpreted meaning of visual observations, allowing the policy to react appropriately to environmental context. Simultaneously, the Robot Kinematic Prior constrains generated actions to the robot’s physical capabilities and limitations, ensuring feasibility and preventing impossible or damaging movements. This dual-prior approach effectively combines contextual understanding with physical plausibility, resulting in actions that are both relevant to the situation and safely executable by the robot.

Domain-Specific Vision Adaptors are employed to mitigate the discrepancy between visual observations originating from real-world robotic deployments and those generated within simulated environments. These adaptors function as learned transformations applied to visual input, normalizing features and reducing the impact of domain-specific artifacts such as lighting conditions, textures, and sensor noise. By projecting observations into a common feature space, the Vision Adaptors enable the Diffusion Policy to generalize effectively from simulation to real-world scenarios without requiring extensive real-world training data, thus improving the robustness and transferability of learned policies.

Evaluation on out-of-distribution tasks indicates a 62.5% Progress Rate, quantifying the system’s ability to generalize to previously unseen scenarios. This metric assesses successful completion of tasks with novel environmental conditions, object configurations, or goal specifications not present in the training data. The 62.5% rate was achieved through testing on a diverse set of scenarios, and represents the percentage of trials where the robot successfully made demonstrable progress towards the assigned goal within a defined time horizon. This performance level suggests a robust capability to adapt to variations in the environment and task requirements beyond the initial training distribution.

![The policy's ability to generalize was evaluated by training on data limited to a [latex]3 \times 3[/latex] inner grid (green) and then testing its performance on an unseen [latex]4 \times 4[/latex] outer grid (orange) in the Stack Bowls Two task.](https://arxiv.org/html/2601.19406v1/figures/position_generalization.png)

Towards Robust Robotic Systems: A Pragmatic Leap Forward

Recent research highlights a substantial advancement in robotic manipulation through the integration of SimHum and a Diffusion Policy, yielding significant improvements in out-of-distribution generalization. This innovative approach enables robots to successfully perform complex tasks in environments markedly different from those encountered during training – a persistent challenge in robotics. By skillfully combining simulated data generated with SimHum and a Diffusion Policy, the system demonstrates a remarkable capacity to adapt and execute actions without requiring extensive fine-tuning in each new setting. The methodology effectively bridges the reality gap, allowing robots to transfer learned skills to previously unseen circumstances, and suggesting a pathway towards more versatile and reliable robotic systems capable of operating effectively in the complexities of the real world.

Robotic systems equipped with this novel approach demonstrate a remarkable capacity for adapting to novel environments without extensive retraining. Traditionally, deploying robots in new settings necessitates substantial fine-tuning with real-world data, a process that is both time-consuming and expensive. However, this methodology enables robots to perform intricate manipulation tasks – such as grasping, placing, and assembling objects – in previously unseen surroundings with only minimal adjustments. This adaptability stems from the system’s ability to effectively transfer learned skills from simulation and limited real-world examples, effectively bridging the reality gap and unlocking a new level of operational flexibility for robotic platforms.

A significant hurdle in robotic manipulation lies in the substantial data requirements for training adaptable systems. This research addresses this challenge by demonstrating the power of combining simulated and real-world data, effectively diminishing the need for exhaustive and expensive data collection efforts. By strategically leveraging simulations, the system can learn foundational manipulation skills in a controlled environment, then refine these abilities with a comparatively small dataset gathered from real-world interactions. This approach not only accelerates the development process but also broadens the scope of deployable robotic solutions, as acquiring vast amounts of real-world data often presents logistical and financial barriers to widespread implementation. The resulting system showcases a marked improvement in data efficiency, promising a future where robots can rapidly adapt to new tasks and environments with minimal human intervention and reduced resource expenditure.

Results demonstrate that SimHum significantly elevates a robot’s capacity to perform tasks it hasn’t explicitly been trained for, achieving a 7.1-fold improvement in zero-shot generalization compared to systems trained solely on real-world data. This leap in performance indicates a substantial reduction in the need for task-specific training in each new environment. The system effectively learns a more generalized understanding of manipulation, allowing it to adapt to previously unseen scenarios without requiring extensive fine-tuning or additional data collection. This advancement not only streamlines the deployment of robotic systems but also unlocks the potential for more versatile and adaptable robots capable of functioning reliably in diverse and unpredictable real-world settings.

The development of adaptable robotic systems represents a significant step towards reliable performance in unstructured, real-world environments. Current robotic deployments often struggle when faced with even slight variations from their training conditions, necessitating extensive and costly retraining for each new scenario. This research addresses this limitation by creating a framework for robots to generalize learned manipulation skills, enabling them to operate effectively across diverse and previously unseen settings. By enhancing a robot’s ability to transfer knowledge from simulation to reality and to adapt to novel circumstances, this work lays the foundation for robotic systems that are not simply programmed for specific tasks, but are capable of independent and robust operation in the face of complexity – a critical advancement for applications ranging from manufacturing and logistics to healthcare and disaster response.

The pursuit of data efficiency, as highlighted by SimHum’s co-training approach, invariably circles back to fundamental limitations. It’s a familiar pattern; elegant architectures built on simulated data, striving for generalizable manipulation, eventually encounter the chaotic reality of production environments. As Marvin Minsky observed, “Common sense is what stops us from picking up telephone poles.” SimHum attempts to inject ‘common sense’ through human demonstration, acknowledging that purely synthetic data, no matter how vast, cannot fully capture the nuances of physical interaction. The team’s focus on leveraging both simulation and human data feels less like a breakthrough and more like a sophisticated workaround – a necessary concession to the inherent messiness of the real world. One suspects that in a few years, this ‘novel’ approach will simply become another baseline, replaced by the next attempt to tame the intractable.

What Remains to be Seen

The pursuit of data efficiency in robotic manipulation, as exemplified by this work, inevitably bumps against the limitations of any simulated environment. The gap between the pristine world of simulation and the delightful chaos of production will not be bridged by clever algorithms alone. Diffusion policies offer a degree of robustness, yet the inevitable corner cases – the slightly warped object, the unexpected occlusion, the sheer variety of human error – will always find a way to expose the underlying brittleness. One anticipates a future taxonomy of ‘real-world failure modes’ becoming a core deliverable of any such system.

The leveraging of human demonstration, while intuitively appealing, introduces another layer of complexity. Humans are remarkably good at showing a task, and spectacularly bad at being consistent. The system’s ability to generalize from imperfect, variable data will be the true measure of its success. Expect the emergence of ‘demonstration smoothing’ techniques-algorithms designed to politely ignore the idiosyncrasies of the demonstrator, essentially rewriting history for the sake of robotic compliance.

Ultimately, this work joins a long lineage of ‘generalizable’ robotic systems. It’s a laudable effort, certainly. But legacy isn’t built on elegance; it’s built on maintenance cycles. The true test will come not with the initial deployment, but with the third rebuild after production finds a novel way to break everything. It’s not a question of if it will happen, but when.

Original article: https://arxiv.org/pdf/2601.19406.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Gold Rate Forecast

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- How to have the best Sunday in L.A., according to Bryan Fuller

- January 29 Update Patch Notes

- Top 3 Must-Watch Netflix Shows This Weekend: January 23–25, 2026

- Simulating Society: Modeling Personality in Social Media Bots

- Composing Scenes with AI: Skywork UniPic 3.0 Takes a Unified Approach

2026-01-28 22:27