Author: Denis Avetisyan

Researchers unveil Sprout, a lightweight and approachable humanoid designed to accelerate innovation in embodied intelligence and safe human-robot interaction.

This paper details the design and control of Sprout, a modular humanoid robot platform emphasizing accessibility, compliant control, and sim-to-real transfer for broader robotics research.

Despite advances in robotic control and simulation, deploying capable humanoids in everyday human environments remains challenging due to limitations in safety, accessibility, and practicality. This paper introduces ‘Fauna Sprout: A lightweight, approachable, developer-ready humanoid robot’, a novel platform designed to address these gaps through a focus on compliant mechanics, integrated control, and developer-friendly design. Sprout enables researchers to explore embodied intelligence with a lightweight, safe, and expressively capable humanoid, lowering the barriers to both development and real-world deployment. Will this increased accessibility accelerate progress toward truly versatile and integrated human-robot collaboration?

The Imperative of Robust Perception

Conventional robotic systems frequently encounter difficulties when operating outside of highly structured settings. These machines, often programmed for specific tasks in predictable conditions, exhibit diminished performance – and even outright failure – when confronted with the inherent messiness of real-world environments. This struggle extends to interactions with humans, as subtle cues like body language, tone of voice, and ambiguous instructions pose significant challenges for robots lacking the perceptual and cognitive abilities to interpret them effectively. The rigidity of traditional programming struggles to account for the infinite variability present in human behavior and dynamic surroundings, limiting the potential for robots to seamlessly integrate into everyday life and collaborate with people in meaningful ways.

For robots to function reliably beyond controlled settings, accurate perception and mapping of the surrounding environment are paramount. This isn’t simply about identifying objects, but constructing a dynamic, three-dimensional understanding of space – including recognizing potential obstacles, navigable paths, and the relationships between objects. A robust perceptual system allows a robot to anticipate changes, react to unforeseen events, and ultimately execute actions safely and effectively. Without this foundational ability to ‘see’ and interpret the world, even the most sophisticated algorithms for planning and control become vulnerable, limiting a robot’s utility in complex, real-world scenarios. The quality of a robot’s actions is inextricably linked to the fidelity of its environmental representation, making perception a critical gateway to true autonomy.

The limitations of current robotic perception systems represent a significant obstacle to their broader integration into daily life. While advancements in sensors and algorithms have been considerable, these systems frequently falter when confronted with the inherent messiness of real-world environments – unpredictable lighting, occlusions, novel objects, and dynamic scenes all pose challenges. Existing approaches often rely on highly curated datasets and controlled conditions, leading to brittle performance when faced with even slight deviations from the training paradigm. This lack of robustness – the ability to maintain accuracy under varying and adverse conditions – and adaptability – the capacity to learn and generalize from new experiences – directly impedes the deployment of robots in complex, unstructured settings, delaying widespread adoption beyond specialized, predictable applications.

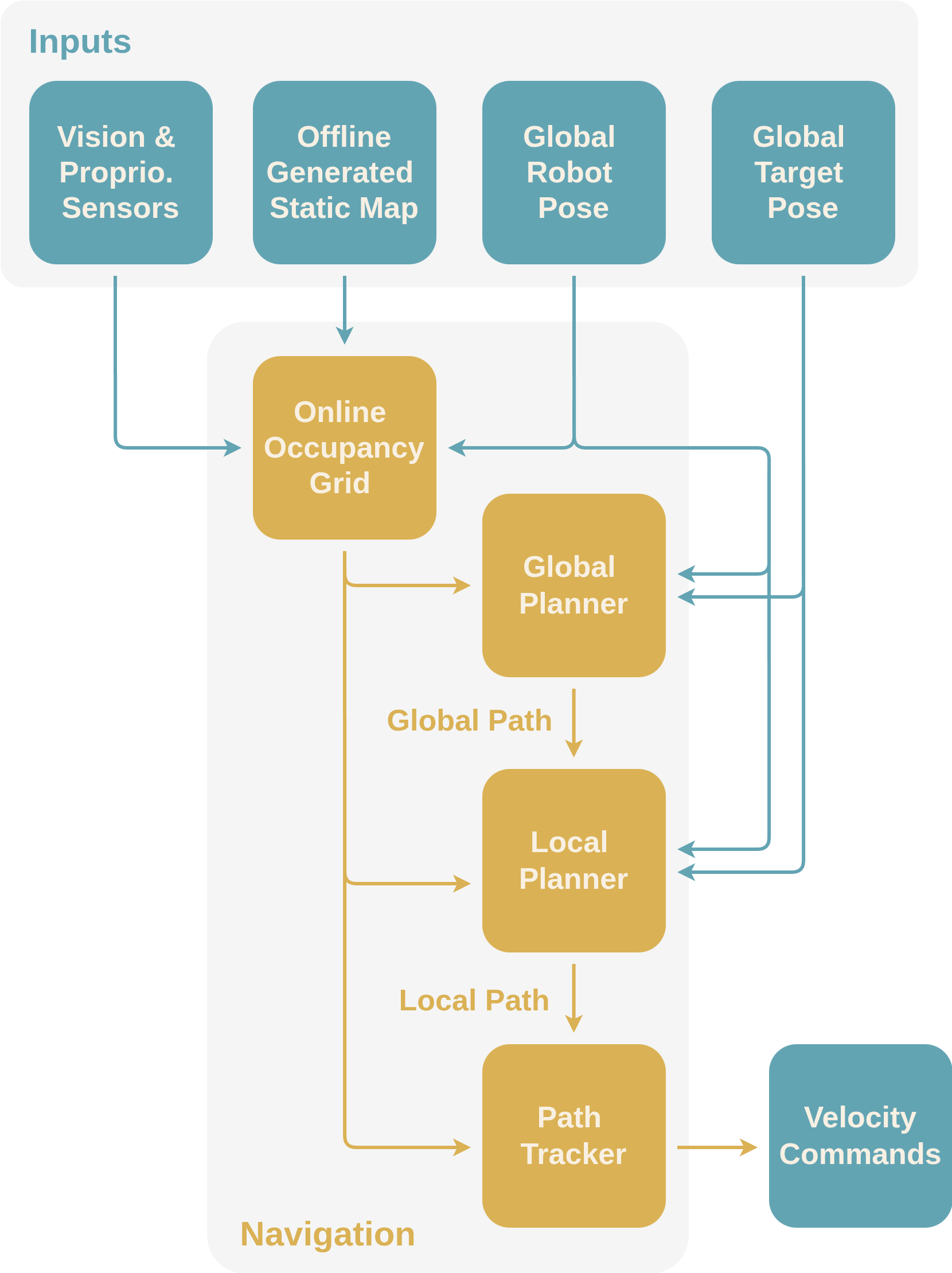

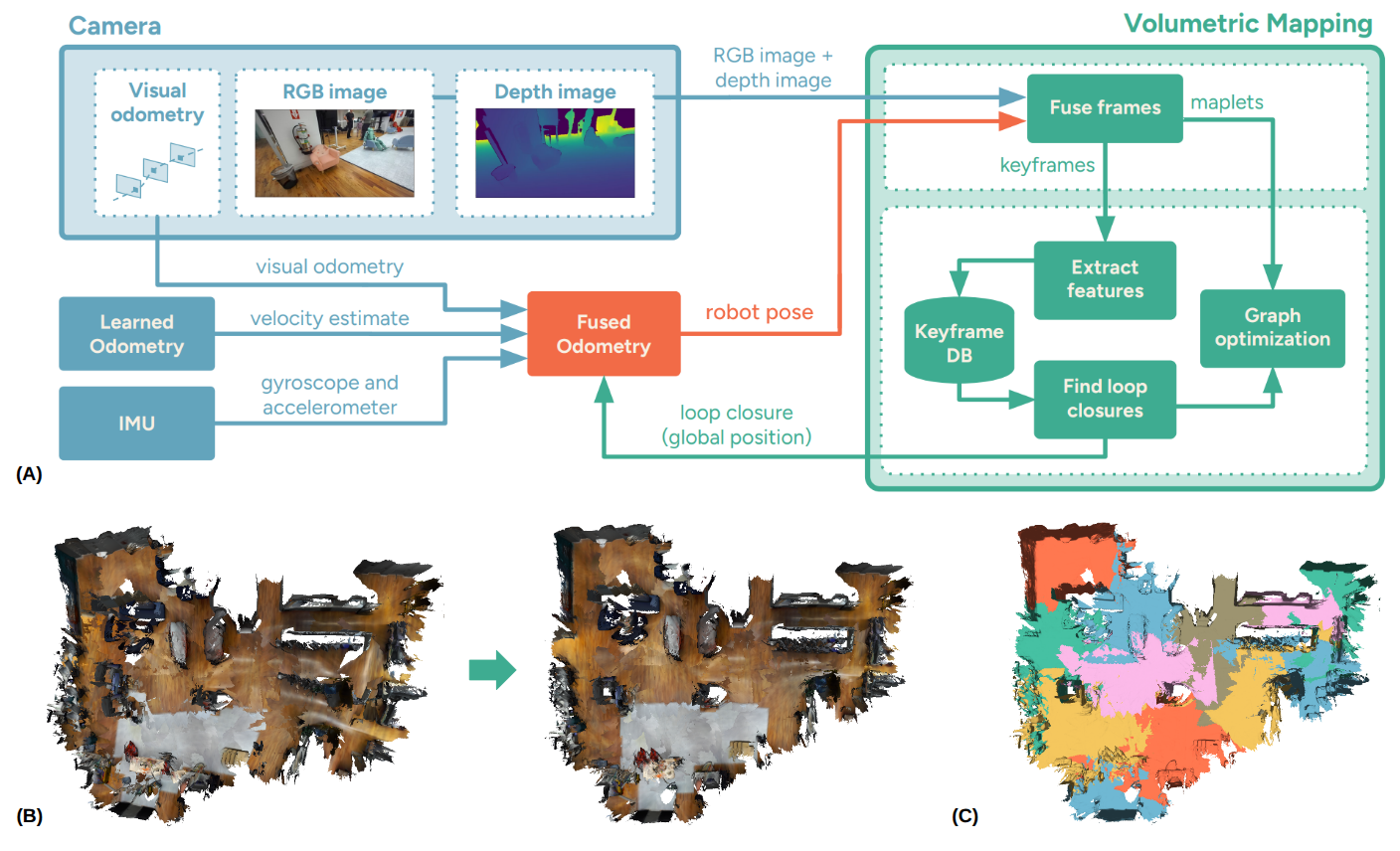

Constructing a Verifiable World Model

The Sprout platform constructs three-dimensional environmental maps using a combination of hardware and software components. Data is acquired from on-board sensors and processed to generate a volumetric map representing the robot’s surroundings. This mapping process focuses on creating a detailed reconstruction of surfaces and obstacles, allowing the robot to perceive and interact with its environment. The resulting 3D maps are not static; they are continuously updated and refined as the robot explores, enabling dynamic awareness of changes within the mapped space. These maps serve as the foundational data layer for subsequent modules, including navigation, path planning, and object recognition.

Sprout’s world modeling relies on the integration of data from Time-of-Flight (ToF) sensors and Visual Simultaneous Localization and Mapping (Visual SLAM). ToF sensors provide accurate depth information, particularly in low-texture or poorly lit environments where Visual SLAM may struggle with feature detection. Visual SLAM, conversely, excels at establishing a geometrically consistent map by tracking visual features over time. By fusing these complementary data streams, the system achieves a more robust and accurate 3D world model than either sensor could provide independently, mitigating the weaknesses of each individual system and increasing overall mapping reliability.

OctoMap is a probabilistic mapping approach utilized by the Sprout platform to represent 3D space as an octree, where each node stores the probability of occupancy. This allows the robot to effectively handle sensor uncertainty and incomplete data by maintaining a distribution of beliefs rather than a definitive binary occupancy state. The octree structure enables efficient memory usage and fast updates, particularly crucial in dynamic environments. By representing uncertainty, the system can avoid collisions even with noisy sensor readings and build a functional map despite partial observability, contributing to robust localization and navigation.

The creation of a detailed environmental map is a prerequisite for both autonomous navigation and effective decision-making within the Sprout platform. This mapping process enables the robot to plan paths, avoid obstacles, and interact with its surroundings. Importantly, the Sprout mapping implementation achieves a performance benefit of approximately 30% lower computational requirements when contrasted with the RTAB-Map algorithm, allowing for efficient operation on embedded systems and reduced energy consumption.

Achieving Compliant and Whole-Body Control

The Sprout platform utilizes compliant control strategies to regulate interaction forces during physical contact. This is achieved through the implementation of control algorithms that allow for deviation from a programmed trajectory when encountering external forces, effectively reducing impact and minimizing the risk of harm to humans or damage to objects. These strategies prioritize force regulation over strict positional accuracy, enabling the robot to adapt to unexpected contact and maintain stability during interaction. The resulting reduction in contact forces contributes to a safer and more predictable operational environment.

The Sprout platform achieves dynamic and stable locomotion and manipulation through whole-body control, centrally coordinated by a Motor Control Module. This module integrates data from onboard sensors – including IMUs, joint encoders, and force/torque sensors – to compute and execute coordinated trajectories for all actuated joints. This allows the robot to maintain balance and stability while navigating uneven terrain, recovering from external disturbances, and performing complex manipulation tasks. The system calculates inverse kinematics and dynamics in real-time, enabling precise control of the robot’s end-effectors and body pose even during rapid or unpredictable movements. This coordinated control extends to both legged locomotion and arm manipulation, allowing for seamless transitions between different modes of operation and supporting a wide range of application scenarios.

Deep Reinforcement Learning (DRL) is implemented within the Sprout platform to optimize control policies beyond pre-programmed behaviors. This involves training agents through trial and error in simulated and real-world environments, allowing the robot to learn adaptive strategies for tasks like manipulation and locomotion. The DRL algorithms are utilized to refine parameters governing motor control, enabling the robot to respond effectively to unpredictable external forces, changes in payload, and variations in terrain. This adaptive capability improves robustness and allows Sprout to maintain performance across a wider range of operational conditions than would be achievable with static control schemes.

The Sprout platform’s control architecture is implemented using the Robot Operating System 2 (ROS 2) framework, chosen for its robustness and flexibility in managing complex robotic systems. This implementation achieves approximately 33% CPU utilization on a single core for essential onboard software services, indicating efficient resource management. ROS 2 facilitates modularity and scalability, enabling integration of various control algorithms and sensor data processing pipelines. The framework supports real-time communication and data distribution, critical for responsive and stable robot operation, while also providing tools for debugging, testing, and simulation.

![This teleoperation system integrates virtual reality control ([green]) with robot services via core APIs ([blue]), enabling retargeting ([pink]), autonomous/teleoperated mode switching ([yellow]), and whole-body control ([orange]).](https://arxiv.org/html/2601.18963v1/figures/teleop.png)

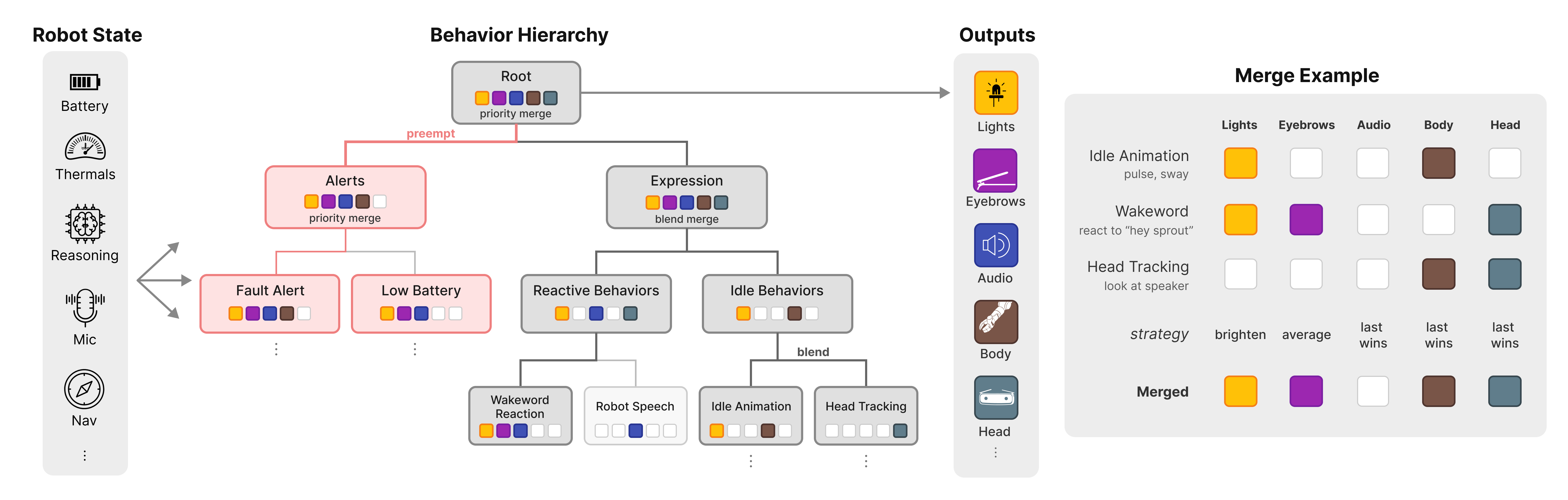

Bridging the Communication Gap with Intuitive Interaction

The Sprout platform prioritizes a user experience where interacting with robots feels natural and requires minimal training. This is achieved through thoughtfully designed control interfaces that move beyond traditional programming or complex commands. Instead, Sprout emphasizes direct manipulation and intuitive gestures, allowing operators to guide the robot’s actions with a sense of immediacy and precision. By abstracting away the underlying technical complexities, the platform fosters a collaborative dynamic between human and machine, enabling users to focus on the task at hand rather than the intricacies of robot control. This approach not only accelerates task completion but also minimizes the potential for errors arising from cumbersome or confusing interfaces, ultimately unlocking the full potential of robotic assistance in diverse environments.

Virtual Reality teleoperation offers a compelling solution to the challenges of remote robot control by fully immersing the operator in the robot’s environment. Rather than relying on traditional joystick controls or 2D video feeds, the system translates the operator’s movements and perspective directly into the robot’s actions within a visually realistic, simulated space. This intuitive approach minimizes the cognitive load associated with remote operation, allowing for more precise and nuanced control – akin to physically being present with the robot. The resulting experience fosters a sense of ‘presence’ and greatly enhances the operator’s ability to perform complex tasks in remote or hazardous environments, effectively bridging the gap between human intention and robotic execution.

The Sprout platform leverages a novel Model Context Protocol to unlock truly natural robot command and control through Large Language Models. This protocol doesn’t simply translate commands; it establishes a shared understanding of the robot’s capabilities, the surrounding environment, and the task at hand. By providing the LLM with a detailed “context” – including sensor data, internal state, and previously executed actions – the system moves beyond rigid syntax and allows for nuanced, conversational direction. Consequently, users can issue high-level instructions – such as “bring me the red toolbox from the workshop” – and the robot autonomously translates this into a sequence of actions, navigating obstacles and manipulating objects with precision. This seamless integration promises to democratize robotics, making complex automation accessible to individuals without specialized programming expertise.

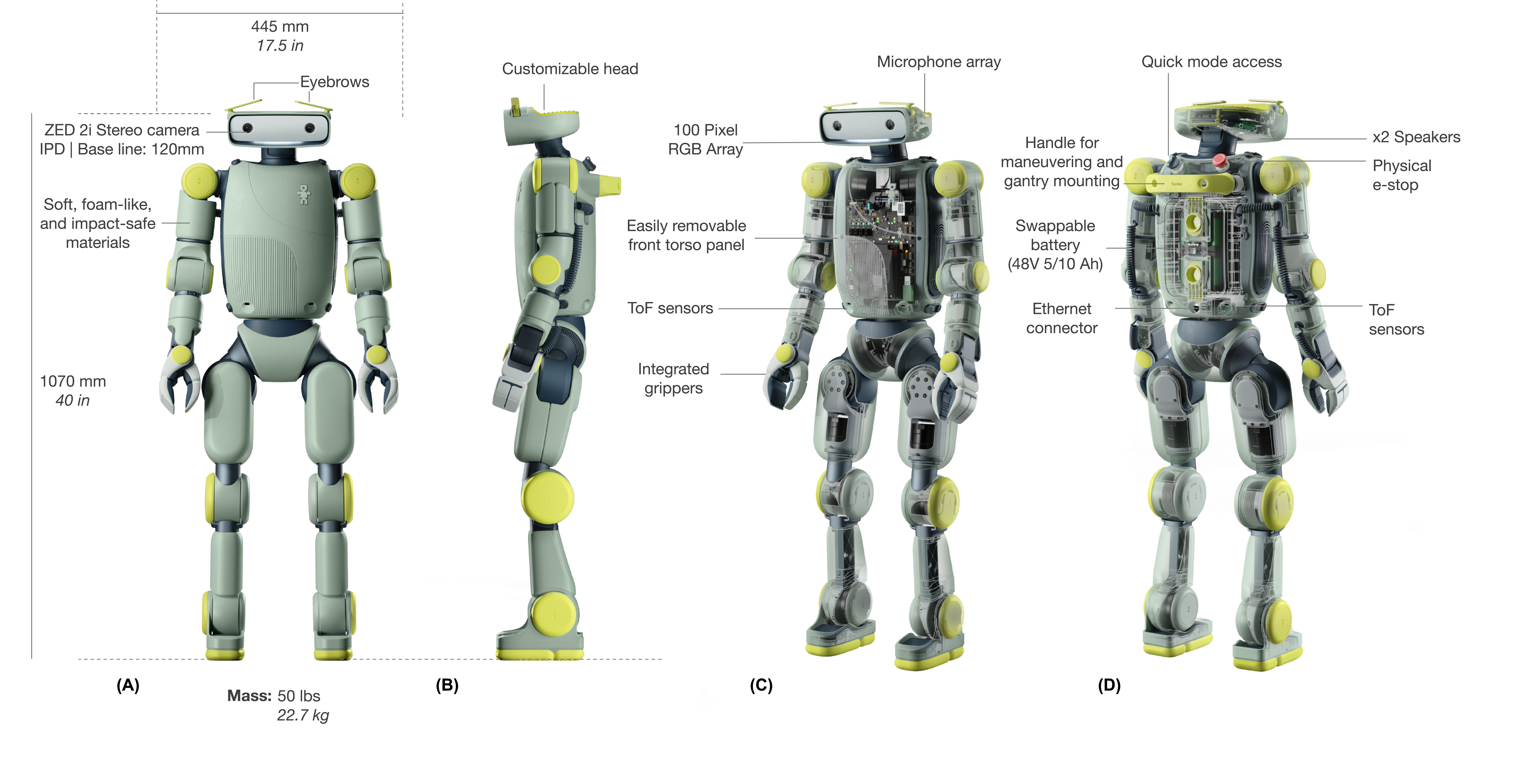

The Sprout platform’s physical design prioritizes human safety through meticulous engineering by the Fauna Robotics Team. The robot stands at 1.07 meters tall, intentionally shorter than the average adult human, to minimize potential impact forces during collaborative tasks. Further enhancing safety, the robot’s weight is carefully calibrated to 22.7 kilograms; this reduction in mass directly correlates to a decrease in kinetic energy should any unintended collision occur. These deliberate design choices – height and weight – aren’t merely specifications, but integral components of a system engineered to foster trust and security in human-robot interactions, paving the way for seamless and safe collaboration in diverse environments.

The development of Sprout exemplifies a commitment to foundational principles within robotics. The robot’s design, prioritizing safe and reliable operation, resonates with the notion that intelligence arises from a robust and predictable framework. As Marvin Minsky stated, “Questions are more important than answers.” Sprout isn’t merely a demonstration of what’s currently achievable, but rather a platform for rigorously exploring the questions surrounding whole-body control and human-robot interaction. The emphasis on sim-to-real transfer, ensuring dependable performance in complex environments, underscores the importance of verifiable consistency-a cornerstone of elegant robotic design and a direct reflection of mathematical purity in action.

Future Directions

The presentation of Fauna Sprout – a mechanically sound, if predictably assembled, humanoid – highlights a persistent paradox. The field continues to prioritize kinematic duplication of biological systems, while the fundamental question of why remains largely unaddressed. Safe operation, compliant control – these are mitigations of inherent instability, not advancements toward genuine intelligence. The true metric of success will not be the ability to walk without falling, but the capacity to not need to walk in the first place.

Sim-to-real transfer, as demonstrated, remains an exercise in parameter tuning, a brute-force approach to a problem demanding elegance. The limitations are not computational, but conceptual. Until a formal, mathematically rigorous definition of ‘robustness’ is established – one that transcends empirical observation – such transfers will remain fragile and domain-specific. The pursuit of modularity, while pragmatic, risks further fragmentation of an already incoherent field. A unified theory of embodied intelligence demands synthesis, not simply interchangeable parts.

Ultimately, the value of platforms like Sprout lies not in their immediate capabilities, but in their potential to expose the inadequacies of current methodologies. The next phase of research must shift from replicating behavior to understanding the underlying principles-from engineering a robot that acts human, to defining what it means to be embodied. The challenge is not to build a better robot, but to formulate a better mathematics.

Original article: https://arxiv.org/pdf/2601.18963.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Gold Rate Forecast

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- eFootball 2026 Manchester United 25-26 Jan pack review

- The Beauty’s Second Episode Dropped A ‘Gnarly’ Comic-Changing Twist, And I Got Rebecca Hall’s Thoughts

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

2026-01-28 13:57