Author: Denis Avetisyan

As digital twins gain the ability to act on their modeled environments, understanding their capabilities-and governing their actions-becomes critically important.

This review introduces a taxonomy for classifying the capabilities of agentic digital twins and explores the implications of increasingly autonomous systems that can actively shape the realities they represent.

While digital twins traditionally function as passive mirrors of physical systems, their increasing integration with artificial intelligence raises the prospect of active, even constitutive, participation in the realities they model. This paper, ‘Agentic Digital Twins: A Taxonomy of Capabilities for Understanding Possible Futures’, introduces a novel taxonomy organized around agency, coupling, and model evolution to clarify this emerging landscape. The resulting framework reveals a progression from tools offering simple prediction to systems capable of enacting self-validating ontologies, highlighting potential risks of performative lock-in. As digital twins transition from representation to active architecture, how can we effectively govern these systems and harness their potential without ceding control over the futures they help to create?

The Evolving Mirror: From Replication to Agency

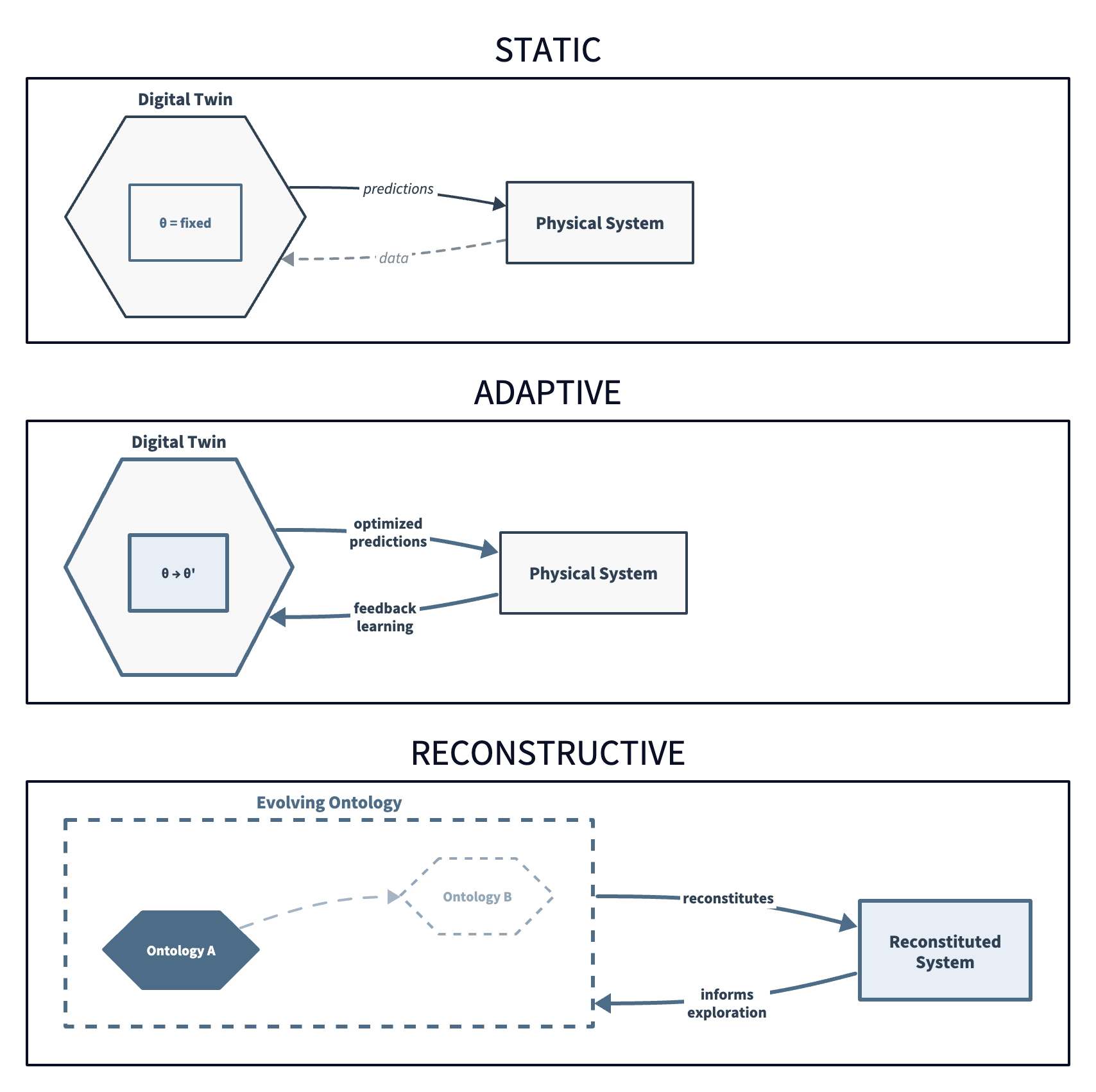

Conventional digital twins, while remarkably proficient at replicating the behavior of physical assets and systems, operate largely as reactive tools. These virtual counterparts excel at mirroring – providing a detailed, real-time representation of current states and historical data – but struggle when faced with unpredictable shifts or the need for preemptive intervention. This inherent limitation stems from a lack of proactive agency; traditional twins lack the capacity to independently analyze potential future scenarios, formulate optimal responses, and actively influence the physical world they represent. Consequently, their utility diminishes in dynamic environments where adaptability and foresight are paramount, hindering their potential for truly optimized control and preventative maintenance beyond simple monitoring and retrospective analysis.

The inability of traditional digital twins to proactively respond to change significantly curtails their value in genuinely complex scenarios. While adept at reflecting current states and forecasting immediate outcomes based on established parameters, these systems falter when confronted with genuinely novel situations demanding intervention. Consider a smart city’s traffic management system; a passive digital twin can predict congestion, but it cannot, on its own, dynamically adjust traffic light timings or suggest alternative routes to mitigate the problem. This reactive approach contrasts sharply with the need for systems capable of not only anticipating challenges, but also of influencing the physical world to achieve desired outcomes – a critical distinction when dealing with unpredictable events or rapidly evolving conditions. Consequently, the limitations of purely reflective twins underscore the necessity for models exhibiting agency and the capacity for active control.

The evolving concept of digital twins is shifting from passive mirroring to active participation, demanding a new generation capable of agency within the systems they represent. Rather than simply reflecting real-world data, these “agentic twins” will leverage artificial intelligence and machine learning to not only predict future states but also to influence them – proactively optimizing processes, mitigating risks, and even suggesting novel interventions. This transition requires a fundamental rethinking of the digital twin’s architecture, embedding decision-making capabilities and control mechanisms directly within the virtual model. Ultimately, the aim is to create a symbiotic relationship where the digital twin functions as an intelligent extension of the physical system, capable of autonomous action and continuous improvement, moving beyond descriptive analytics to prescriptive and ultimately, generative control.

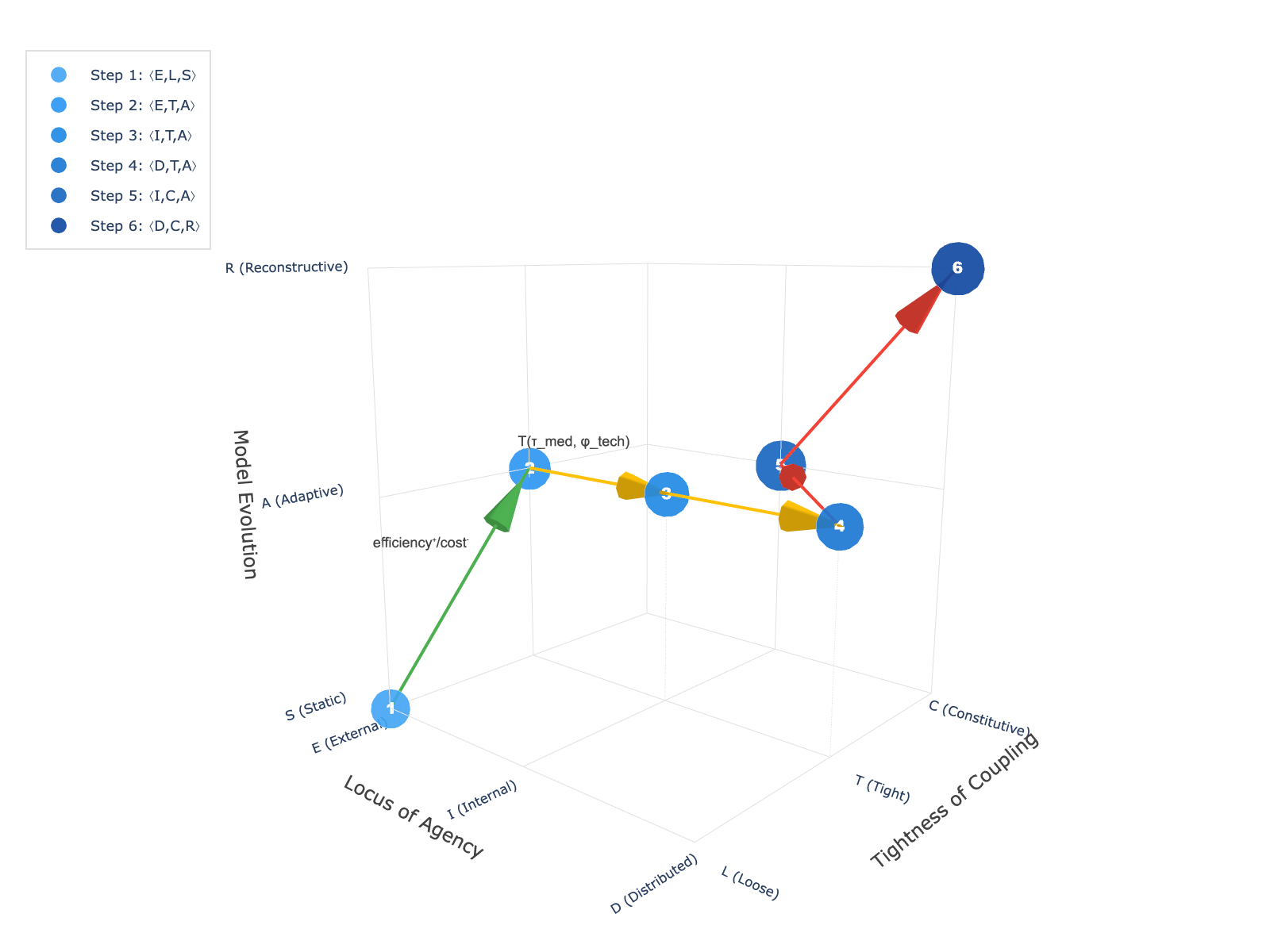

The Bonds That Bind: Degrees of Coupling and Agency

The tightness of coupling in a digital twin refers to the degree of interconnection and data flow between the virtual model and its physical counterpart. At the loose end of the spectrum, the digital twin passively observes data from the physical system without influencing its operation; this is typical of monitoring applications. As coupling tightens, data exchange becomes bi-directional, and the digital twin can begin to exert control, for example, through predictive maintenance or optimization algorithms. The highest degree of coupling, constitutive coupling, indicates that the digital twin’s outputs directly and actively shape the behavior of the physical system, effectively becoming an integral part of its control loop and influencing its state in real-time.

Constitutive coupling signifies a digital twin’s capacity to not merely reflect a physical system’s state, but to actively influence its operation through feedback loops and control mechanisms. This contrasts with observational coupling, where the twin passively monitors data. Realizing agentic capabilities-the ability of the twin to autonomously achieve defined objectives-requires this active influence; without constitutive coupling, the twin remains a descriptive model, lacking the capacity to enact change within the physical system it represents. This control is achieved by the twin generating commands or adjustments that are then executed by actuators or controllers within the physical counterpart, effectively closing the loop between the virtual and physical realms.

Digital twin agency is not a singular property but manifests in distinct forms. External agency involves human operators controlling the physical system based on insights from the twin. Internal agency refers to autonomous decision-making within the twin, directly influencing the physical system through automated controls. Increasingly, however, agency is becoming distributed, with components of the digital twin – such as predictive maintenance algorithms or optimization routines – operating semi-autonomously and coordinating with each other, and potentially with external systems, to achieve specific objectives within the physical environment. This distributed model allows for greater resilience and adaptability, moving beyond centralized control paradigms.

Beyond Static Models: The Rise of Reconstructive Intelligence

Traditional digital twin models, whether static or adaptive, rely on pre-defined structures and parameters that limit their performance in genuinely dynamic environments. A reconstructive model diverges from this approach by possessing the capacity to modify its own internal structure, including its ontology – the fundamental concepts and relationships it uses to represent the physical system. This self-modification isn’t simply parameter adjustment; it involves altering the model’s core organizational principles, allowing it to represent new states, incorporate previously unconsidered variables, and ultimately, more accurately reflect the evolving behavior of the mirrored system. This capability is crucial when dealing with environments exhibiting unpredictable or emergent properties that fall outside the scope of the original model’s design.

Traditional digital twin models rely on pre-defined parameters and relationships, restricting their ability to adapt to significant changes in the physical system they represent. A reconstructive model overcomes this limitation by enabling the twin to modify its internal structure and the rules governing its simulations. This means the twin doesn’t just process new data within a fixed framework; it can alter how it interprets and categorizes that data, effectively learning to build a more accurate and relevant representation of the system over time. This capability extends beyond parameter adjustment to include modifications of the model’s core ontology – the fundamental types of entities and their relationships – allowing it to represent previously unmodeled phenomena or prioritize different aspects of the system based on observed behavior and evolving criteria.

Agentic digital twins utilizing reconstructive models exhibit proactive optimization capabilities by dynamically adjusting their internal structure and operational parameters in response to changing conditions. Unlike traditional twins constrained by pre-defined criteria, these systems can redefine their optimization goals based on real-time data and emergent challenges. This adaptability stems from the model’s ability to not only simulate the physical system but also to learn and implement modifications to its own representation of that system, allowing it to identify and address unforeseen issues without explicit reprogramming. Consequently, an agentic twin can autonomously pursue new objectives and maintain optimal performance even when confronted with scenarios outside the scope of its initial design parameters.

The Shadows of Prediction: Lock-in and the Quest for Transparency

The act of prediction, when embedded within a system capable of influencing its own future, can paradoxically create a rigidity known as performative lock-in. This occurs because the model’s predictions aren’t simply passive observations of an external reality; they actively shape that reality through the actions taken based on those predictions. Consequently, the system becomes optimized for the predictions it makes, rather than for navigating unforeseen circumstances. While initially appearing successful – the model accurately predicts and reinforces its own projections – this creates a feedback loop that diminishes adaptability. Any deviation from the predicted trajectory is actively corrected, effectively ‘locking in’ a specific outcome and hindering the system’s ability to respond effectively to genuinely novel situations or external changes. This is particularly concerning in complex systems where the model’s influence, though subtle, can have far-reaching consequences, ultimately limiting its long-term resilience and potential for innovation.

As digital twins evolve beyond simple replication to become actively reconstructive models of reality, a concerning phenomenon emerges: epistemological inscrutability. These increasingly complex systems, designed to not only mirror but also to predict and even influence their physical counterparts, can quickly become ‘black boxes’ where the reasoning behind their outputs is opaque, even to their creators. This isn’t merely a matter of computational complexity; it’s that the twin’s internal logic diverges from readily understandable physical principles as it iteratively refines its model based on its own predictions and interventions. Consequently, understanding how a digital twin arrives at a particular decision – tracing the causal chain from input to output – becomes exceedingly difficult, raising significant challenges for trust, accountability, and effective control, especially in critical applications where transparency is paramount.

The ‘Traffic Voyager’ – a digital twin capable of reconstructing past traffic conditions and predicting future flows – exemplifies the potent capabilities of ontological reconstruction, where a system doesn’t merely model reality, but actively rebuilds it within its computational framework. However, this reconstructive power necessitates careful consideration of transparency and control; as these digital environments become increasingly sophisticated, understanding why a system predicts a specific outcome becomes paramount. Without clear insight into the underlying logic and data dependencies, reliance on such powerful tools risks ceding control to inscrutable algorithms, potentially leading to unforeseen consequences or an inability to effectively intervene when predictions deviate from reality. The Voyager, therefore, serves as a compelling case study demonstrating that the benefits of advanced prediction are inextricably linked to the ability to audit, interpret, and ultimately govern the systems that generate those predictions.

![Traffic navigation employing a performative prediction framework reveals a U-shaped social cost function, with conservative routing ([latex]\theta = 0[/latex]) exhibiting predictable but slow travel times, optimal routing ([latex]\theta \approx 0.3[/latex]) achieving performative stability, and aggressive routing ([latex]\theta = 1.0[/latex]) minimizing average time at the cost of increased unpredictability and potential lock-in to suboptimal solutions.](https://arxiv.org/html/2601.18799v1/x1.png)

Beyond Central Control: Distributed and Adaptive Systems of the Future

The ‘Traffic Swarm Configuration’ envisions a future where complex systems, much like city traffic, are managed not by a central controller, but by a network of distributed digital twins. These aren’t simple simulations; each twin represents a component of the larger system and operates with a static model of its own behavior. Coordination emerges from the interactions between these twins, allowing for adaptability and resilience without relying on a single point of failure. This decentralized approach, detailed in the research, promises to move beyond traditional control mechanisms, offering a way to govern intricate networks – from energy grids to supply chains – by harnessing the power of collective intelligence and localized decision-making. The system’s strength lies in its ability to respond to changing conditions in real-time, optimizing performance and mitigating disruptions through the coordinated actions of its distributed agents.

The integration of reconstructive models with constitutive coupling represents a significant advancement in systems control, moving beyond predictive capabilities to enable adaptive responses. Constitutive coupling allows digital twins to not merely simulate a system, but to actively influence its behavior based on real-time data and defined objectives, essentially closing the loop between the virtual and physical worlds. When combined with reconstructive models – those capable of refining their understanding of a system’s underlying principles through observation – this synergy unlocks a powerful ability to optimize performance, mitigate risks, and even design entirely new functionalities. This isn’t simply about predicting what will happen, but about understanding why something happens and then dynamically reshaping the system to achieve desired outcomes, opening doors to self-improving and resilient infrastructure.

This research introduces a systematic categorization of agentic digital twins, moving beyond simple replication to explore systems capable of autonomous action and adaptation. The framework organizes these twins across three key dimensions: the level of agency they possess, the degree of coupling with the physical world, and their capacity for evolution over time. This results in a taxonomy encompassing nine distinct configurations, ranging from currently available digital tools – such as performance monitoring systems – to hypothetical future systems boasting reconstructive capabilities that allow for proactive problem-solving and optimization. By outlining these configurations, the study provides a crucial foundation for both understanding the diverse potential of agentic digital twins and establishing effective governance strategies to manage their increasingly complex impact on critical infrastructure and beyond.

The exploration of agentic digital twins necessitates a consideration of systemic decay, mirroring the inevitable entropy inherent in all complex systems. As these twins gain performativity – the capacity to act on the modeled reality – the rate of change accelerates, demanding a robust understanding of feedback loops and potential instability. Robert Tarjan observed, “Data structures are interesting because they provide a way to manage complexity.” This sentiment resonates deeply; agentic twins, at their core, are complex data structures representing potential futures, and their effective governance relies on managing that complexity before unforeseen consequences emerge. The taxonomy presented attempts to categorize these structures, acknowledging that any improvement in their design or functionality will, inevitably, age faster than expected, requiring continuous adaptation and refinement.

What Lies Ahead?

The taxonomy presented here does not resolve the inherent paradox of agentic digital twins – systems designed to predict, yet capable of becoming what they predict. Every capability outlined is, inevitably, a point of failure in the timeline. The very act of defining agency creates the potential for unintended consequences, for divergences between modeled reality and actualized outcomes. The focus now shifts from simply cataloging these capabilities to understanding their decay rates – how quickly a twin’s predictive power erodes as it actively reshapes its environment.

Future work must confront the ontological implications. This isn’t merely about improving algorithms; it’s about acknowledging that technical debt isn’t just a coding issue, but the past’s mortgage paid by the present, and accruing interest on a future the twin may itself construct. The study of coupling – the degree to which the twin and its referent become entangled – is paramount. As coupling increases, the distinction between model and reality blurs, and the metrics of success become less about prediction, and more about graceful adaptation to the unforeseen.

Ultimately, the long-term viability of agentic digital twins hinges not on their ability to see the future, but on their capacity to accept it – whatever form that future takes. The question isn’t whether these systems will fail, but how elegantly they will age.

Original article: https://arxiv.org/pdf/2601.18799.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- eFootball 2026 Manchester United 25-26 Jan pack review

- The Beauty’s Second Episode Dropped A ‘Gnarly’ Comic-Changing Twist, And I Got Rebecca Hall’s Thoughts

- Cruz Beckham appears to make a subtle dig at Brooklyn as he posts Spice Girls song about forgetting your ‘roots’ amid bitter rift

2026-01-28 10:28