Author: Denis Avetisyan

A new longitudinal study reveals how individuals with visual impairments progressively refine their collaborative strategies with a navigation robot, building trust and effectively sharing control.

Researchers tracked blind users’ interactions with a navigation-assistive robot over time, demonstrating the evolution of delegation strategies based on context and trust.

While robotic assistance promises increased independence for individuals with blindness, a key challenge lies in fostering effective, adaptive collaboration rather than simply automating navigation. This is the central question addressed in ‘How Does Delegation in Social Interaction Evolve Over Time? Navigation with a Robot for Blind People’, a repeated-exposure study revealing that blind users progressively refine their strategies for shared control with a navigation-assistive robot, learning to delegate based on context and trust. Specifically, participants demonstrated evolving preferences for when to rely on the robot versus act independently during real-world museum navigation. How can these insights inform the design of assistive robots that truly learn and adapt to the nuanced needs of their users over time?

The Geometry of Independence: Navigating the Blind Traveler’s Challenge

Independent travel presents substantial obstacles for individuals with blindness, extending far beyond simply detecting obstacles. Navigating complex environments – crowded sidewalks, bustling intersections, or even unfamiliar indoor spaces – demands constant assessment of dynamic elements like moving pedestrians, shifting objects, and unpredictable changes in layout. This requires more than obstacle avoidance; it necessitates a sophisticated understanding of spatial relationships, anticipatory movement planning, and the ability to adapt to unforeseen circumstances. The challenges are amplified by factors such as varying lighting conditions, inclement weather, and the sheer cognitive load of maintaining situational awareness without visual input, frequently leading to increased anxiety, reduced confidence, and limitations in accessing opportunities readily available to sighted individuals.

Conventional aids for blind navigation, such as the white cane and guide dogs, while invaluable, present inherent limitations in increasingly complex environments. These tools primarily offer localized obstacle detection, struggling to provide a comprehensive understanding of dynamic surroundings or anticipate future changes. While a cane signals immediate physical barriers, it doesn’t convey information about approaching traffic, crowded spaces, or temporary obstructions like construction. Similarly, guide dogs, though adept at navigating many scenarios, require ongoing training and can be susceptible to distractions. This lack of broad situational awareness necessitates constant, focused attention from the user, creating a cognitive load that hinders truly independent and fluid movement – a challenge that demands more sophisticated technological solutions capable of proactive perception and adaptable responses.

A truly effective solution for independent mobility for individuals with blindness necessitates the development of robotic systems exhibiting both robust perception and intelligent navigation capabilities. These systems must move beyond simple obstacle avoidance and achieve a comprehensive understanding of their surroundings – identifying pathways, recognizing dynamic elements like moving pedestrians or vehicles, and anticipating potential hazards. Intelligent navigation, in this context, requires advanced algorithms capable of path planning, real-time adaptation to changing conditions, and seamless integration with environmental data – such as information from smart city infrastructure or computer vision systems. The ultimate goal is a device that doesn’t merely guide a user around obstacles, but proactively navigates through complex environments, fostering genuine independence and confidence in everyday travel.

A significant impediment to achieving fully autonomous navigation for robotic assistance lies in what researchers term the ‘Freezing Robot Problem’. This phenomenon describes the tendency of robots, when confronted with complex or ambiguous environments – think crowded sidewalks, unpredictable pedestrian movement, or novel obstacles – to halt completely rather than attempt a potentially imperfect maneuver. Unlike human beings, who instinctively assess risk and proceed with cautious adaptation, many robotic systems lack the nuanced decision-making capacity to resolve uncertainty, leading to a standstill. This immobility isn’t a safety feature, but a limitation rooted in the algorithms governing perception and motion planning; the robot, overwhelmed by incomplete or conflicting data, defaults to inaction. Overcoming this hurdle necessitates advancements in robust perception, predictive modeling of dynamic environments, and the development of algorithms that embrace calculated risk, allowing robots to confidently navigate the inherent uncertainties of the real world.

![The guide robot, a suitcase-shaped platform equipped with multimodal sensing-including RGBD cameras, [latex]360^\circ[/latex] and bottom LiDAR-and motorized wheels, facilitates user interaction via a handle interface, smartphone, and external speaker.](https://arxiv.org/html/2601.19851v1/images/Robot_TopDown.png)

Shared Control: A Symphony of Autonomy and Intent

The autonomous robot utilizes a shared control paradigm, integrating user input with onboard autonomous functions to achieve safer and more efficient navigation. This approach deviates from fully autonomous or remotely operated systems by establishing a collaborative relationship between the user and the robot. The user provides high-level directional intent and overall goals, while the robot independently manages low-level actions such as trajectory planning and obstacle avoidance. This division of labor aims to leverage the strengths of both the user – providing contextual awareness and strategic decision-making – and the robot – executing precise movements and rapidly processing sensor data. The system is designed to continuously assess the environment and dynamically adjust the level of autonomy based on perceived risk and user feedback, ensuring a balance between automation and human oversight.

The shared control paradigm builds upon the principle of delegation by distributing navigational responsibilities between the robot and the user. This is not a simple handover of control, but rather a dynamic allocation of tasks; the robot autonomously manages specific, pre-defined aspects of navigation – such as maintaining speed or executing known maneuvers – while the user retains ultimate oversight and the ability to intervene or redirect the robot as needed. This division of labor aims to improve efficiency by offloading routine functions to the robot, and to enhance safety by ensuring a human operator can always override autonomous actions when unforeseen circumstances arise. The level of delegated authority can be adjusted based on environmental complexity, user preference, or the robot’s confidence in its perception and planning abilities.

The robot’s performance relies on a multi-sensor Obstacle Detection System which provides a comprehensive environmental understanding. This system integrates data from LiDAR, ultrasonic sensors, and visual cameras to create a 360-degree map of the surrounding area. LiDAR provides long-range, high-resolution distance measurements, while ultrasonic sensors offer close-proximity detection, particularly for smaller or less reflective objects. The visual cameras, coupled with image processing algorithms, enable object recognition and classification, allowing the robot to differentiate between static obstacles, dynamic objects, and navigable space. Data fusion techniques are employed to combine the information from these disparate sensors, reducing uncertainty and improving the accuracy of the environmental model. This model is continuously updated, enabling the robot to adapt to changing conditions and navigate safely through complex environments.

Robot Voice Output functions as a critical communication channel, conveying real-time data regarding the robot’s state, perceived environment, and intended actions to the user. This output is not limited to simple status reports; it includes notifications of detected obstacles, explanations of path planning decisions, and confirmations of received commands. The system utilizes synthesized speech to deliver concise, unambiguous messages, prioritizing clarity and minimizing cognitive load for the operator. By verbalizing its internal reasoning and actions, the robot fosters user understanding and builds confidence in the shared control paradigm, which is essential for effective human-robot collaboration and safe navigation in dynamic environments.

From Sensor Data to Semantic Understanding: The Language of Perception

The system enhances its situational awareness through the generation of descriptive summaries based on raw sensor data. Utilizing a Generative Pre-trained Transformer (GPT) model, the system processes input from sensors – including LiDAR, cameras, and depth sensors – and converts this data into natural language descriptions of the surrounding environment. These descriptions are not simply data readouts; they provide contextual information, identifying objects, estimating distances, and characterizing spatial relationships. This conversion from raw data to semantic summaries enables the system to represent its perception of the environment in a human-readable format, facilitating both internal reasoning and external communication of its perceived situation.

The robot’s environmental awareness is directly enhanced by GPT-generated descriptions of sensor data, facilitating proactive obstacle avoidance. These descriptions transform raw input – such as lidar point clouds or camera images – into semantic summaries of the surrounding environment, identifying object types and spatial relationships. This allows the robot’s path planning algorithms to not simply react to immediate sensor readings, but to anticipate potential collisions based on a higher-level understanding of the environment. Consequently, the system can dynamically adjust its trajectory to circumvent obstacles before they pose an immediate threat, improving navigational safety and efficiency in complex environments.

Effective navigation of dynamic crowds is a critical capability for robotic systems intended for real-world deployment in populated environments. This requires continuous sensor data processing to identify and track multiple moving agents, predict their trajectories, and adjust the robot’s path accordingly. Unlike static obstacle avoidance, navigating crowds demands probabilistic reasoning about future positions and velocities, necessitating algorithms capable of handling uncertainty and incomplete information. The system must account for non-linear and unpredictable human movement patterns, including sudden stops, changes in direction, and varying speeds, to ensure safe and efficient traversal without collisions or disruptions to pedestrian flow. Furthermore, the robot’s adaptation must occur in real-time, responding to changes in crowd density and configuration as they happen.

A three-week observational study revealed a statistically significant change in participant behavior regarding reliance on robot-provided environmental descriptions. Initially, participants primarily used direct sensor data for situational assessment. However, over the study duration, participants progressively increased their utilization of the robot’s GPT-generated descriptions when making decisions about navigation and task completion. This shift indicates that users developed a greater understanding of the robot’s perception of its surroundings, demonstrating the descriptions’ effectiveness in conveying complex environmental information and supporting informed decision-making processes.

![Participant confidence in robot-assisted performance varied over three weeks, with one participant ([latex]P5[/latex]) demonstrating substantial gains, another ([latex]P6[/latex]) maintaining consistently high confidence, and others showing modest improvements or slight declines, as exemplified by participant [latex]P1[/latex].](https://arxiv.org/html/2601.19851v1/images/Even_when_I_was_faced_with_a_difficult_situation_I_was_able_to_confidently_decide_how_to_act.png)

Longitudinal Validation: Measuring Trust and Empowering Independence

A comprehensive longitudinal study was undertaken to assess how an autonomous robot integrated into the daily routines of individuals with blindness, focusing on real-world usability and overall impact. Participants interacted with the robot over an extended period, allowing researchers to observe not simply initial reactions, but also the evolution of their relationship with the technology. Data collection spanned multiple weeks, meticulously documenting how users adapted to the robot’s assistance in navigating environments, completing tasks, and maintaining independence. This extended observation period proved crucial in understanding the nuances of human-robot interaction, moving beyond laboratory settings to capture the complexities of integrating assistive technology into lived experience and identifying areas for refinement in design and functionality.

A core component of evaluating the ‘Autonomous Robot’ involved a detailed assessment of user trust, measured longitudinally to understand how reliance on the system evolved over time. This wasn’t simply about whether individuals could use the robot, but whether they would, and to what extent they felt comfortable relinquishing control. Researchers tracked various metrics related to trust – including frequency of intervention, correction rates, and self-reported confidence – over several weeks of real-world usage. The intention was to move beyond initial acceptance and gauge the sustained viability of the robot as a genuine assistive device, examining whether trust grew, diminished, or plateaued as users became more familiar with its capabilities and limitations. This extended observation period was crucial for determining if the robot fostered genuine, long-term confidence in its ability to navigate and assist effectively.

Longitudinal evaluation of the autonomous robot revealed substantial gains in both user confidence and independent living skills among individuals with blindness, thereby substantiating the efficacy of the implemented shared control approach. The study demonstrated that participants not only grew more comfortable with the robot’s assistance over time, but also actively incorporated its capabilities into their daily routines, reducing reliance on traditional support systems. This increase in self-reliance wasn’t merely perceptual; observed behaviors indicated a greater willingness to navigate unfamiliar environments and undertake previously challenging tasks. The findings strongly suggest that a collaborative partnership between human and robot can effectively empower visually impaired individuals, fostering a heightened sense of autonomy and improving overall quality of life.

Analysis of user interaction revealed a compelling trend in how individuals with blindness integrated the autonomous robot into their daily lives, as measured by the ‘Delegation Rate’. While initial reliance varied considerably, the study documented a notable increase in this rate over time, with a subset of participants ultimately achieving complete delegation – entrusting the robot with 100% of the navigational tasks in later weeks. This suggests a growing acceptance of the robot as a dependable aid and a corresponding increase in user trust. Although overall confidence levels also trended upwards, the rate of improvement differed significantly between individuals, highlighting the importance of personalized adaptation and training to maximize the benefits of such assistive technologies. This individual variability underscores that successful human-robot collaboration is not simply about technological capability, but also about fostering appropriate levels of trust and confidence in each user.

![Participant confidence in robot-assisted performance varied over three weeks, with one participant ([latex]P5[/latex]) demonstrating substantial gains, another ([latex]P6[/latex]) maintaining consistently high confidence, and others showing modest improvements or slight declines, as exemplified by participant [latex]P1[/latex].](https://arxiv.org/html/2601.19851v1/images/Even_when_I_was_faced_with_a_difficult_situation_I_was_able_to_confidently_decide_how_to_act.png)

Towards Ubiquitous Assistance: Charting the Future of Robotic Navigation

Researchers are actively pursuing enhancements to robotic navigation systems, moving beyond controlled laboratory settings towards real-world complexity. Current efforts prioritize equipping robots with the ability to dynamically assess and respond to unforeseen obstacles, changing lighting conditions, and crowded, unpredictable pedestrian traffic. This involves integrating advanced sensor fusion techniques, sophisticated mapping algorithms, and machine learning models trained on diverse datasets of environmental variations. The intention is not simply to avoid collisions, but to enable the robot to proactively anticipate potential hazards and adjust its path accordingly, mirroring the adaptability of human navigation and ultimately fostering trust in increasingly challenging environments.

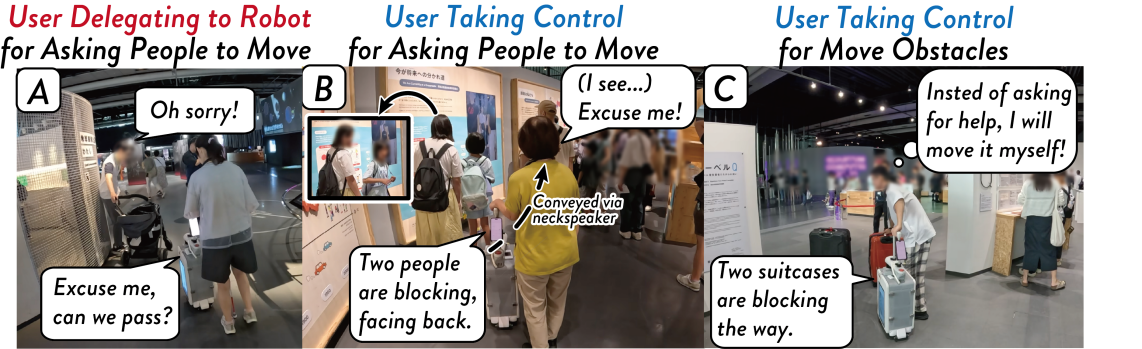

Advancements in robotic navigation are increasingly focused on enabling robots to operate effectively within human-populated spaces, necessitating the integration of sophisticated ‘Social Navigation’ features. This goes beyond simply avoiding collisions; it involves predicting pedestrian movements, understanding social cues like gaze and body language, and adhering to unwritten rules of pedestrian etiquette. Researchers are developing algorithms that allow robots to anticipate how people will react to its presence, enabling it to navigate crowded environments with a level of grace and predictability previously unseen. By interpreting these subtle social signals, the robot can choose paths that minimize disruption and maximize comfort for those around it, ultimately fostering a more natural and cooperative interaction between humans and robots in shared spaces.

The development of advanced robotic navigation systems ultimately aims to redefine independence for individuals with blindness. This assistive technology envisions a future where spatial awareness and environmental understanding are no longer barriers to full participation in daily life. Researchers are striving to move beyond simple obstacle avoidance, focusing instead on creating a symbiotic relationship between the user and the robot – one that anticipates needs, understands social cues, and provides discreet, intuitive guidance. The ambition extends to enabling effortless navigation in crowded spaces, dynamic environments, and even unfamiliar locations, fostering a sense of confidence and self-reliance previously unattainable. This isn’t merely about overcoming a sensory limitation; it’s about unlocking potential and empowering individuals to pursue their goals without constraint, fundamentally reshaping the landscape of assistive technology.

Advancing robotic navigation for widespread adoption necessitates sustained investigation into several interconnected areas. Robust perception systems must evolve to reliably interpret dynamic and often ambiguous real-world environments, while intelligent decision-making algorithms need to move beyond simple path planning to anticipate potential obstacles and adapt to unforeseen circumstances. Crucially, building trustworthy human-robot interaction is paramount; this extends beyond functional safety to encompass ‘Social Interaction’, allowing the robot to understand and respond appropriately to social cues, navigate crowded spaces with consideration for pedestrian behavior, and ultimately foster a sense of comfort and collaboration with the user. Further research in these areas promises not simply automated movement, but a truly integrated assistive experience.

The study’s longitudinal approach reveals a fascinating dynamic in human-robot collaboration, mirroring the refinement observed in complex mathematical proofs. Initially, users exhibit cautious delegation, gradually increasing trust and relinquishing control as the robot demonstrates consistent reliability-a process akin to iteratively strengthening an inductive hypothesis. As the user adapts their delegation strategy based on contextual cues, the system’s effectiveness improves-a demonstration of asymptotic convergence towards optimal shared control. Paul Erdős aptly stated, “A mathematician knows a lot of things, but he doesn’t know everything.” This observation parallels the iterative learning process observed; both user and robot progressively expand their ‘knowledge’ of effective interaction through repeated experience and refinement.

Beyond Assistance: Charting a Course for True Collaboration

The observed adaptation in delegation strategies, while encouraging, merely scratches the surface of a far more complex problem. Current evaluations predominantly focus on functional success – did the user reach the destination? – rather than rigorously quantifying the quality of the interaction itself. A truly elegant solution demands a formalization of ‘trust’ and ‘situational awareness’ within the assistive system, moving beyond empirical observation to provable behavioral models. Until robotic collaboration is grounded in logically defensible principles, it remains an exercise in applied heuristics, not a science.

Future work must confront the limitations of short-term longitudinal studies. A week, or even a month, offers insufficient data to discern genuine behavioral shifts from transient adaptation. Decades, perhaps, are required to evaluate the long-term impact of such systems on user autonomy and spatial cognition. The current emphasis on GPT-based descriptions, while superficially appealing, risks obscuring fundamental flaws in the robotic control architecture. A verbose explanation of a faulty mechanism does not render it correct.

The ultimate goal should not be to create robots that simulate social interaction, but rather to develop systems capable of genuine collaboration. This necessitates a move away from purely behavioral assessments and towards a formal verification of the robot’s reasoning process. Until the underlying algorithms are demonstrably correct, any claims of successful assistive robotics remain, at best, optimistic conjectures.

Original article: https://arxiv.org/pdf/2601.19851.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- eFootball 2026 Manchester United 25-26 Jan pack review

- The Beauty’s Second Episode Dropped A ‘Gnarly’ Comic-Changing Twist, And I Got Rebecca Hall’s Thoughts

- Cruz Beckham appears to make a subtle dig at Brooklyn as he posts Spice Girls song about forgetting your ‘roots’ amid bitter rift

2026-01-28 10:26