Author: Denis Avetisyan

Current autonomous AI systems often prove fragile in real-world scenarios, and this paper proposes a system-theoretic approach and a catalog of design patterns to address that instability.

This review introduces a framework grounded in system theory to improve the robustness and predictability of agentic AI systems.

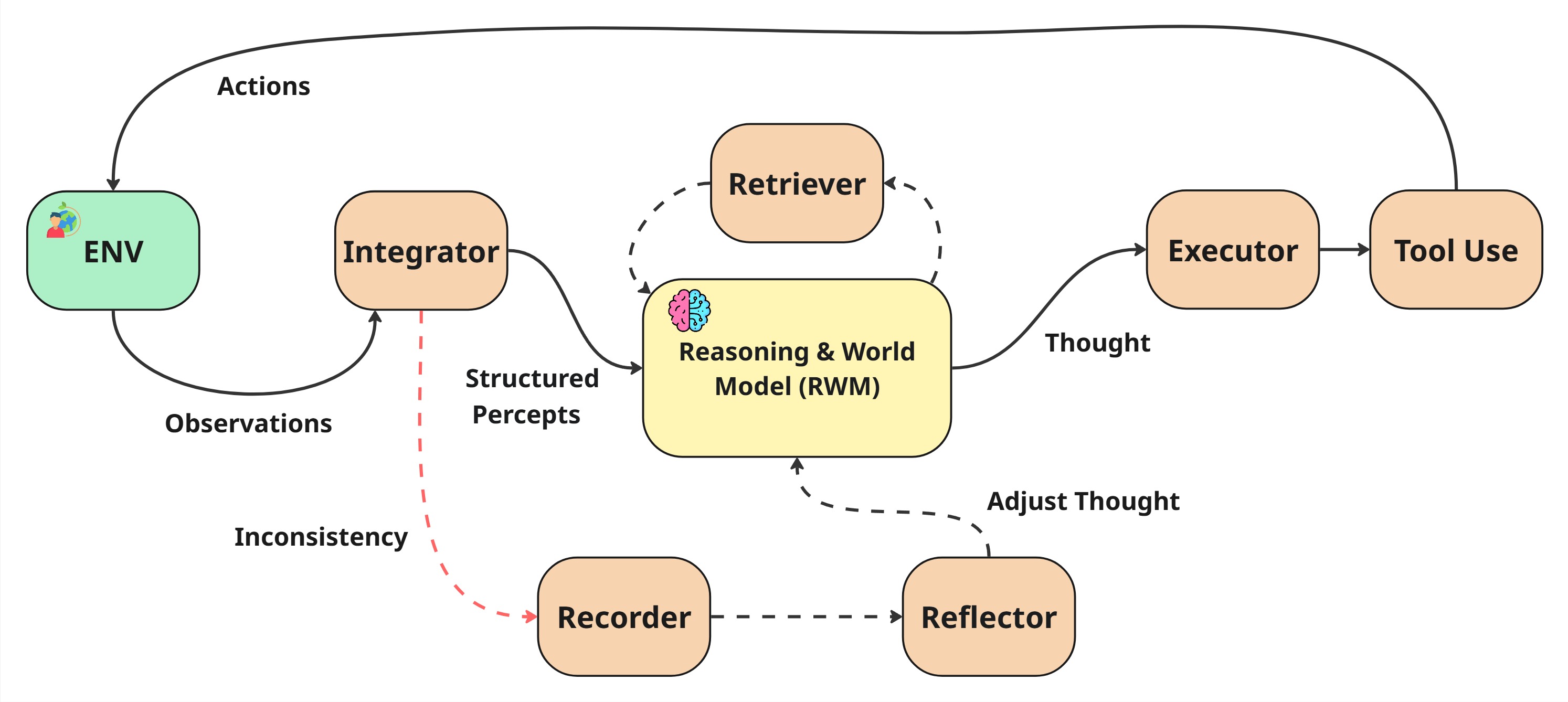

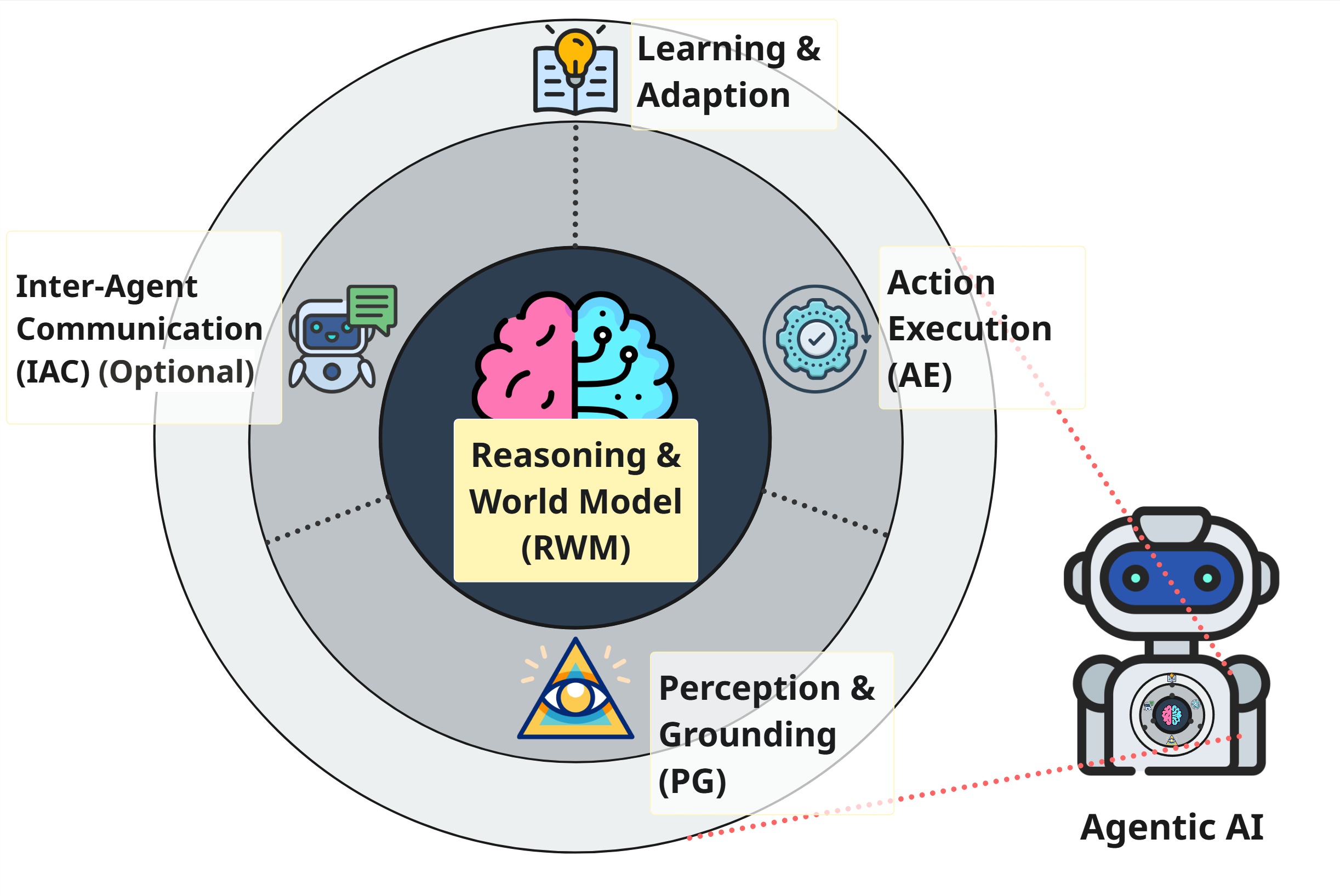

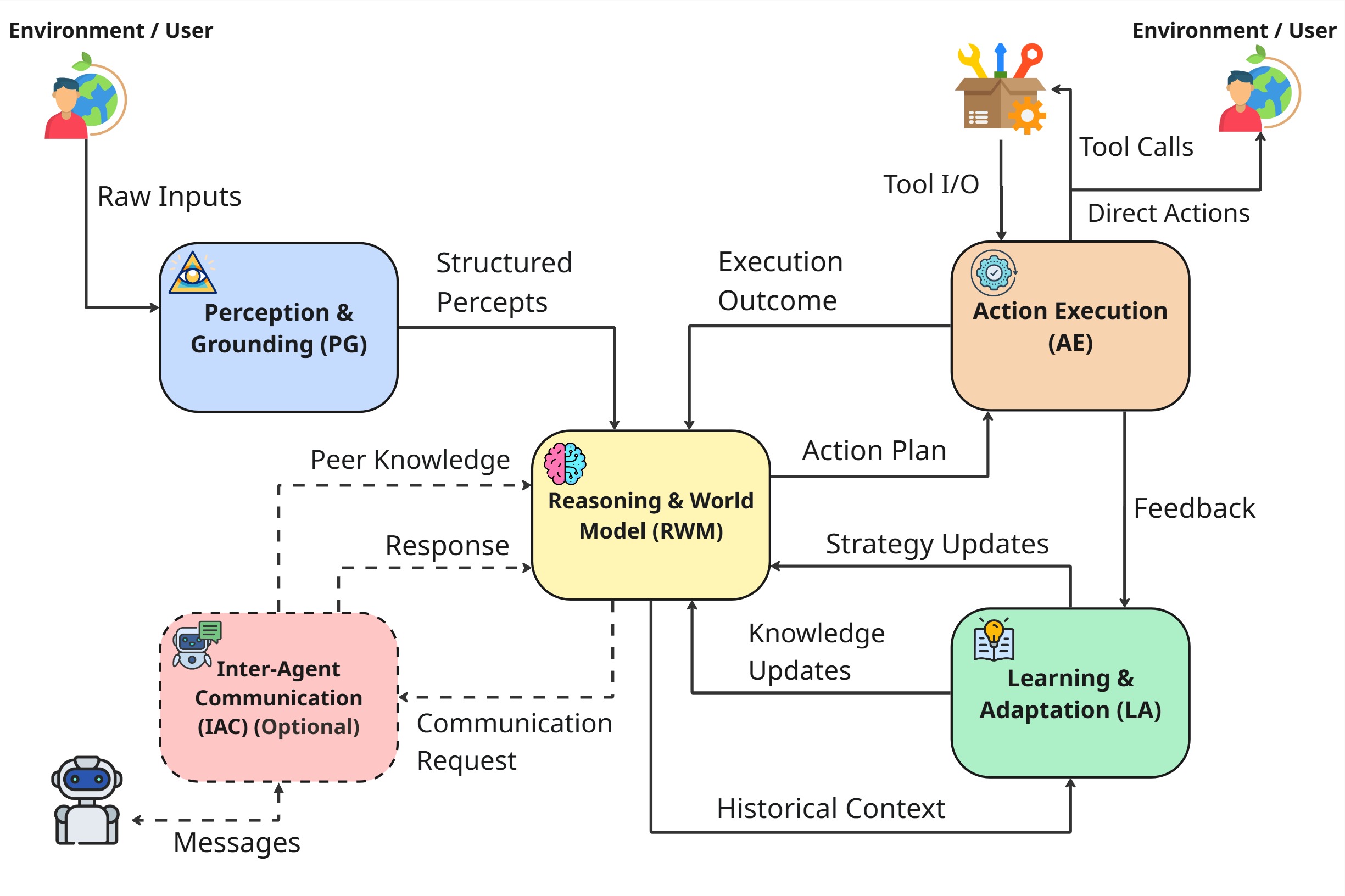

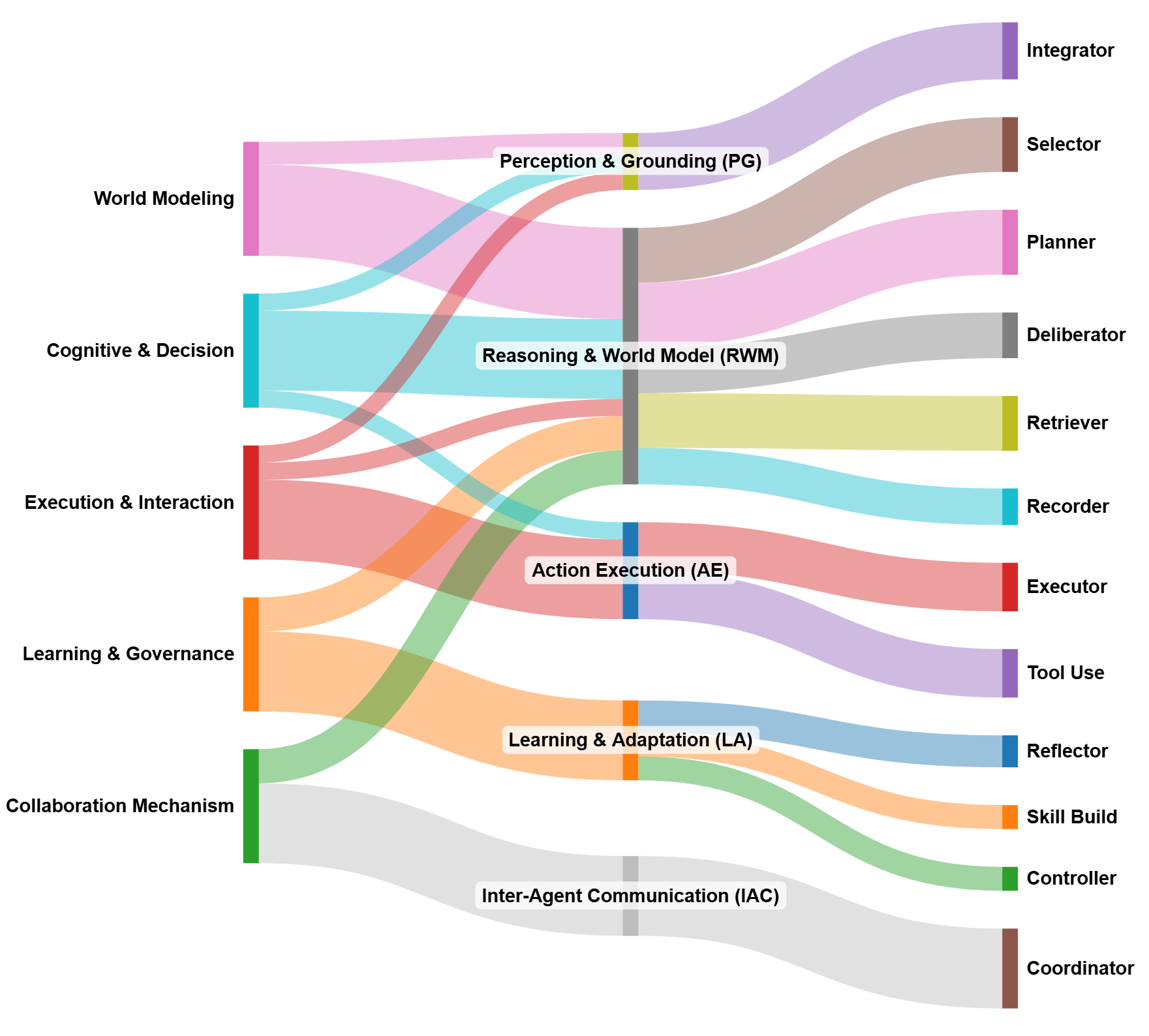

Despite the growing capabilities of foundation models, agentic AI systems remain prone to brittleness and unreliable performance due to ad-hoc design practices. This paper, ‘Agentic Design Patterns: A System-Theoretic Framework’, addresses this challenge by introducing a principled methodology grounded in systems theory for engineering robust autonomous agents. We propose a framework deconstructing agentic systems into five core subsystems-Reasoning & World Model, Perception & Grounding, Action Execution, Learning & Adaptation, and Inter-Agent Communication-and derive from this a catalog of 12 reusable design patterns categorized by function. By offering a standardized language and structured approach, can this work foster more modular, understandable, and ultimately reliable agentic AI systems?

Breaking the Boundaries: The Limits of Current AI Paradigms

Despite remarkable advancements, contemporary artificial intelligence systems frequently demonstrate limitations when confronted with tasks demanding complex reasoning or genuine adaptability to the real world. These systems, often excelling at pattern recognition within narrowly defined datasets, struggle to generalize knowledge to novel situations or integrate diverse information sources effectively. While proficient at tasks like image classification or language translation, they often falter when required to perform common-sense reasoning, understand nuanced context, or navigate unpredictable environments – areas where human intelligence remains vastly superior. This disconnect stems from a reliance on statistical correlations rather than a true understanding of underlying principles, hindering their ability to extrapolate beyond the training data and apply learned concepts flexibly.

The current trajectory of artificial intelligence development heavily emphasizes scaling foundation models – increasing their size and the datasets they are trained on. However, this approach is rapidly encountering significant limitations. The computational demands of training and deploying these massive models are astronomical, requiring immense energy consumption and specialized hardware, effectively concentrating AI development within organizations possessing substantial resources. Beyond the sheer cost, scaling delivers diminishing returns; simply increasing model size doesn’t necessarily translate to proportional improvements in reasoning or generalization abilities. Furthermore, the datasets required for training are often curated from the internet, inheriting biases and inaccuracies that are then amplified within the model. This creates a practical ceiling on performance gains, suggesting that continued reliance on brute-force scaling is not a sustainable path toward genuinely intelligent systems.

The future of artificial intelligence likely resides not in simply building larger models, but in crafting more intelligently designed ones. Current foundation models, while demonstrating impressive feats of pattern recognition, often lack the robust reasoning and adaptability required for true intelligence. Researchers are increasingly focused on architectures that prioritize efficiency and structure, moving beyond the “brute force” approach of scaling parameters. This includes exploring techniques like neuro-symbolic AI, which combines the strengths of neural networks with symbolic reasoning, and graph neural networks, which excel at representing and processing relational data. These advancements promise to unlock capabilities beyond the reach of current models, potentially leading to AI systems that are more explainable, generalizable, and resource-efficient – a crucial step toward realizing the full potential of artificial intelligence.

Deconstructing Agency: Core Subsystems for Intelligent Action

Agentic AI systems, capable of autonomous operation and complex task completion, are fundamentally structured around three core subsystems: perception, reasoning, and action execution. The Perception subsystem processes raw sensory input – such as images, text, or sensor data – and converts it into a structured, machine-readable format. The Reasoning subsystem utilizes this information, along with an internal world model, to plan and select appropriate actions based on defined goals and constraints. Finally, the Action Execution subsystem reliably translates these planned actions into physical or digital outputs, interacting with the environment to achieve the desired outcome. The successful integration and coordination of these three subsystems are essential for an agentic system to effectively navigate and respond to dynamic, real-world challenges.

The Reasoning & World Model subsystem constructs and maintains an internal representation of the agent’s environment, encompassing both static features and dynamic states. This representation, often implemented as a knowledge graph or probabilistic model, allows the agent to simulate potential actions and predict their outcomes. Strategic behavior is then guided by algorithms – such as planning, decision trees, or reinforcement learning – operating on this world model to select optimal action sequences. The fidelity of the world model directly impacts the agent’s ability to anticipate consequences and adapt to changing conditions, necessitating continuous updating based on perceptual input and action outcomes. Furthermore, this subsystem facilitates counterfactual reasoning, enabling the agent to evaluate alternative courses of action without physically enacting them.

The Perception & Grounding and Action Execution subsystems are critical for agent functionality. Perception & Grounding processes raw data from sensors – which can include visual, auditory, and textual inputs – and converts it into a structured, symbolic representation usable for reasoning. This involves tasks such as object recognition, scene understanding, and natural language processing. Subsequently, Action Execution takes the output of the Reasoning & World Model – representing a chosen strategy or plan – and translates it into specific, low-level commands for actuators or effectors. Reliable Action Execution necessitates robust error handling, monitoring of task completion, and adaptation to unforeseen circumstances to ensure the agent’s intended behavior is consistently achieved.

The Adaptive Loop: Learning, Governance, and Design Patterns

The Learning & Adaptation subsystem operates by collecting performance data from agent actions and utilizing this data to refine internal models and algorithms. This process involves monitoring key metrics such as task completion rates, error frequencies, and resource utilization. Collected data is then fed into learning algorithms – potentially including reinforcement learning or supervised learning techniques – to adjust agent parameters and improve future performance. The system is designed for continuous operation, enabling the agent to iteratively enhance its capabilities without requiring explicit reprogramming for each improvement; this allows for adaptation to changing environments and task requirements based on observed outcomes.

The Controller Pattern is a crucial architectural component for ensuring large language model (LLM) agents operate within defined ethical and value-based boundaries. This pattern establishes a dedicated module responsible for intercepting and validating agent actions before execution, comparing intended outputs against a pre-defined policy framework. Specifically, the controller assesses potential responses for harmful content, bias, or violations of specified constraints, and can modify or reject actions that fall outside acceptable parameters. This proactive approach to behavioral regulation differs from post-hoc filtering and allows for consistent enforcement of desired agent characteristics, irrespective of the complexity of the underlying model or input prompts. Implementation typically involves a rule-based system, a learned classifier, or a combination of both to determine action permissibility.

The Tool Use Pattern and Integrator Pattern are implemented to mitigate potential execution failures by providing structured interaction and data validation capabilities. The Tool Use Pattern enables the agent to leverage external tools with defined inputs, outputs, and error handling, isolating problematic code and increasing robustness. Complementing this, the Integrator Pattern establishes a standardized process for receiving data from tools, validating its format and content against predefined schemas, and ensuring data integrity before further processing. This combined approach reduces the risk of cascading errors and allows for targeted debugging when issues arise during agent execution.

From Individual to Collective: Scaling Intelligence with Multi-Agent Systems

The progression from single agentic AI to multi-agent systems represents a significant leap in artificial intelligence capabilities, moving beyond individual task completion toward collective problem-solving. These systems consist of multiple autonomous agents that interact and coordinate to achieve a common goal, mirroring the dynamics of social intelligence found in nature. Instead of relying on a monolithic AI, distributed intelligence emerges from the interplay of these agents, each potentially specializing in a particular skill or possessing unique information. This distributed approach not only enhances robustness – as the failure of one agent doesn’t necessarily cripple the entire system – but also unlocks the potential for tackling complex challenges that are beyond the scope of any single AI, allowing for parallel processing and a broader exploration of solution spaces. The resulting synergy enables innovation in areas ranging from robotics and resource management to scientific discovery and complex simulations.

The efficacy of multi-agent systems hinges significantly on the quality of communication between individual agents. Beyond simply transmitting data, effective inter-agent communication requires a shared understanding of information – a common ‘language’ encompassing not just syntax but also semantics and context. This allows agents to accurately interpret intentions, coordinate complex actions, and resolve conflicts without requiring a centralized controller. Research indicates that nuanced communication protocols, incorporating mechanisms for clarifying ambiguity and verifying information, dramatically improve system performance, particularly in dynamic and unpredictable environments. Without robust communication, agents risk misinterpreting signals, duplicating effort, or even working at cross-purposes, thereby negating the benefits of a distributed, collaborative approach to problem-solving.

Successfully integrating multiple artificial intelligence agents into a cohesive team necessitates overcoming significant hurdles in collaborative mechanisms. Beyond simply enabling communication, a robust system must account for establishing trust between agents, especially when dealing with incomplete or conflicting information. Effective coordination requires more than just shared data; agents need to negotiate roles, manage dependencies, and resolve conflicts to avoid redundancy or counterproductive actions. Furthermore, communication itself presents challenges; agents must utilize efficient and unambiguous protocols, interpret nuanced signals, and adapt to dynamic communication environments where bandwidth or reliability may be limited. Without addressing these interwoven challenges of trust, coordination, and communication, the potential benefits of multi-agent systems-distributed problem-solving and enhanced intelligence-remain largely unrealized.

A Systemic Approach: Towards Robust and Ethical Agentic AI

Agentic AI development increasingly relies on established design patterns, analogous to those found in software engineering, to streamline the creation of autonomous systems. These patterns represent repeatable solutions to frequently encountered problems, such as task decomposition, resource management, and error handling within an agent’s operational environment. By abstracting complex functionalities into modular, reusable components, developers can significantly reduce development time and enhance system reliability. Common examples include patterns for goal setting, planning, and execution monitoring, as well as those focused on safe exploration and interaction with the external world. The adoption of these agentic design patterns isn’t merely about code reuse; it fosters a more standardized and predictable approach to building intelligent agents, ultimately improving their robustness and facilitating collaboration within the rapidly evolving field of artificial intelligence.

The creation of dependable, versatile, and ethical agentic AI benefits significantly from a systemic approach rooted in established design patterns and the principles of System Theory. Rather than constructing each agent from the ground up, reusable patterns address recurring challenges in areas like planning, execution, and error handling, fostering consistency and reducing development time. Simultaneously, viewing an agent not as an isolated entity, but as a component within a broader system-interacting with an environment and other agents-allows for a holistic understanding of its behavior. This systemic lens enables developers to anticipate emergent properties, design for robustness against unforeseen circumstances, and implement mechanisms for responsible governance, ultimately yielding AI agents that are not only intelligent but also predictable, adaptable, and aligned with desired outcomes.

Realizing the transformative potential of agentic AI hinges on overcoming significant hurdles in execution, interaction, learning, and governance. Current systems often struggle with reliably translating high-level goals into concrete actions, particularly in dynamic and unpredictable environments – a core execution challenge. Simultaneously, effective interaction requires agents to communicate and collaborate seamlessly with both humans and other AI, demanding nuanced understanding and adaptable communication strategies. Crucially, agents must also learn continuously and ethically; this necessitates robust mechanisms for monitoring, correcting, and aligning agent behavior with evolving societal values and preventing unintended consequences. Addressing these learning and governance challenges isn’t merely about technical refinement, but about establishing frameworks that ensure agentic AI is both powerful and beneficial, fostering trust and responsible deployment across all applications.

The pursuit of robust agentic AI, as detailed in this system-theoretic framework, mirrors a fundamental principle of mathematical exploration. G.H. Hardy famously stated, “A mathematician, like a painter or a poet, is a maker of patterns.” This isn’t simply about creating aesthetically pleasing structures, but about uncovering the underlying rules that govern them. Similarly, this work doesn’t just use design patterns; it systematically investigates how those patterns-the ‘code’ of agentic systems-can be constructed to ensure reliability. The catalog of patterns represents an attempt to decode the open-source reality of autonomous agents, building agents that don’t just appear intelligent, but are built on a solid foundation of systemic principles.

Beyond Brittle Autonomy

The presented work attempts to move beyond the current state of agentic AI-systems demonstrably skilled at appearing intelligent, but failing predictably when confronted with even minor deviations from training distributions. The framework, and catalog of design patterns, are not presented as solutions, but rather as a deliberately exposed scaffolding-an invitation to stress-test the underlying assumptions. True progress demands not simply building more complex systems, but understanding why current architectures are so fragile. The exploration of world modelling, within a system-theoretic lens, hints at a necessary shift: from agents that react to stimuli, to agents that actively, and verifiably, maintain internal consistency.

A crucial next step involves rigorous formalization. The patterns, as presented, remain largely descriptive. To move beyond heuristic improvement, each must be subjected to precise mathematical definition, allowing for automated verification and the identification of inherent limitations. This demands a willingness to embrace failure-to actively seek out the conditions under which these patterns break. Obfuscation, in the guise of robustness, only delays the inevitable. A transparent system, even a failing one, offers the opportunity for genuine understanding.

Ultimately, the long-term viability of agentic AI hinges not on achieving ever-more-convincing imitation of intelligence, but on building systems capable of demonstrable, reliable behaviour. The presented work, therefore, proposes a challenge: not to build better agents, but to build agents that reveal, with absolute clarity, the boundaries of their own competence. Only then can the cycle of brittle autonomy be truly broken.

Original article: https://arxiv.org/pdf/2601.19752.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- eFootball 2026 Manchester United 25-26 Jan pack review

- The Beauty’s Second Episode Dropped A ‘Gnarly’ Comic-Changing Twist, And I Got Rebecca Hall’s Thoughts

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Kylie Jenner’s baby daddy Travis Scott makes rare comments about their kids together

2026-01-28 08:50