Author: Denis Avetisyan

As AI agents become increasingly complex, current interpretability methods fall short of providing the accountability needed for safe and reliable deployment.

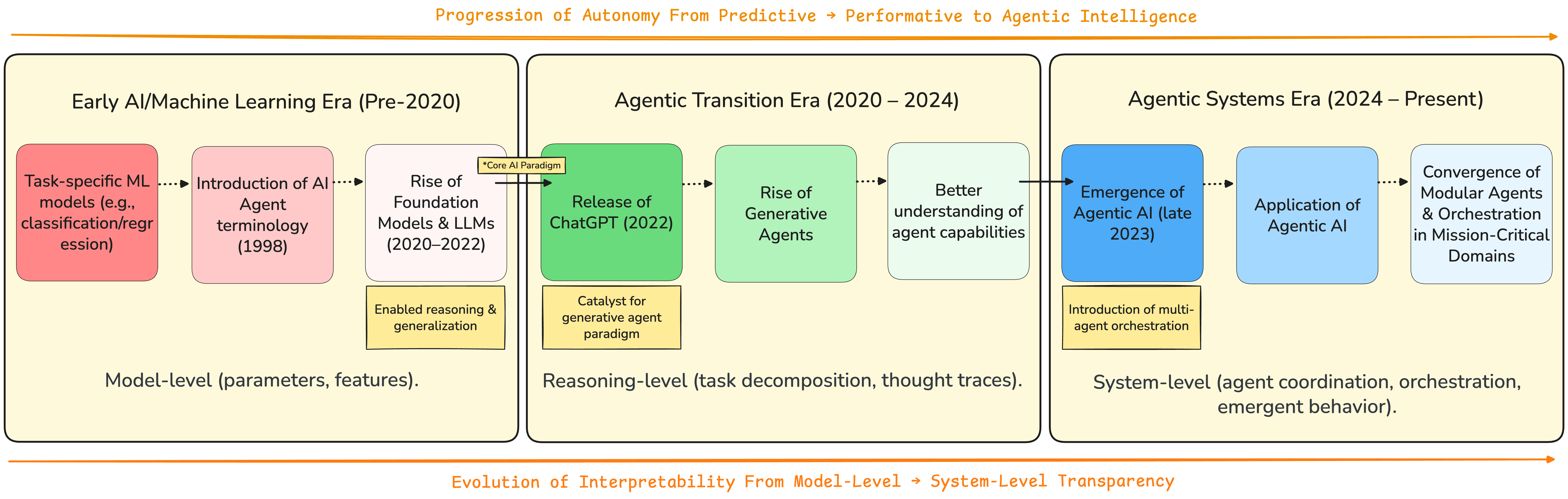

This review argues for a shift from model-centric explanations to system-level causal analysis in the pursuit of truly interpretable agentic systems.

While current AI interpretability efforts largely focus on explaining individual model predictions, this approach proves inadequate for increasingly complex agentic systems. The paper ‘Interpreting Agentic Systems: Beyond Model Explanations to System-Level Accountability’ addresses this limitation by arguing that understanding autonomous, goal-directed behavior necessitates a shift toward system-level causal analysis. This work demonstrates that existing interpretability techniques fall short when applied to agentic systems due to their temporal dynamics and compounding decisions. Can we develop new frameworks that provide meaningful oversight across the entire agent lifecycle, ensuring both safety and accountability in the deployment of autonomous AI?

The Illusion of Control: Beyond Reactive Systems

Conventional artificial intelligence often falters when confronted with tasks demanding prolonged thought and flexible responses to changing circumstances. These systems, typically designed for narrow, predefined objectives, exhibit limited capacity for sustained reasoning or adaptation to unforeseen challenges. Unlike humans, who readily adjust strategies and learn from experience, traditional AI relies heavily on pre-programmed rules and static datasets. This rigidity becomes particularly problematic in dynamic environments – such as real-world robotics or complex data analysis – where conditions are constantly evolving. Consequently, these systems frequently require extensive retraining or manual intervention to maintain performance, hindering their ability to function truly autonomously and efficiently in complex, unpredictable situations.

Agentic systems represent a significant leap beyond conventional artificial intelligence, leveraging the capabilities of large language models to achieve true autonomy. These systems don’t merely respond to prompts; they proactively formulate goals, break them down into manageable steps, and execute those steps through coordinated action. This is accomplished by equipping language models with tools – access to APIs, databases, or even other AI models – allowing them to independently gather information, make decisions, and iterate on solutions. Crucially, the coordination aspect isn’t pre-programmed, but emerges dynamically as the agent assesses its environment and adjusts its strategy, enabling it to tackle complex, open-ended tasks that would overwhelm traditional AI. The potential extends to automating intricate workflows, conducting independent research, and even collaborating with humans in a truly intelligent and adaptive manner.

The advent of agentic systems signals a fundamental re-evaluation of artificial intelligence development. Traditional approaches, centered on task-specific models, are giving way to a focus on building systems capable of autonomous operation and collaborative problem-solving. This paradigm shift prioritizes adaptability and resilience, moving beyond pre-programmed responses towards intelligence that can dynamically adjust to unforeseen circumstances and coordinate actions with other agents – or even humans. The emphasis is no longer solely on achieving high performance on narrow benchmarks, but on cultivating systems that exhibit general cognitive abilities, fostering a future where AI functions as a flexible, cooperative partner in tackling complex challenges. This requires new methodologies centered around reinforcement learning, emergent behavior, and robust communication protocols, effectively redefining the core principles of AI design.

![This agentic system core integrates perception, planning, and action to execute tasks such as web browsing, code execution, and mathematical problem solving, as demonstrated by its ability to handle complex queries like [latex]2 + 2 = 4[/latex].](https://arxiv.org/html/2601.17168v1/revised_agentic_anatomy_bigger.png)

The Echo of Experience: Persistent Memory Architectures

Agentic systems, distinguished by their autonomous operation and proactive behavior, necessitate memory capabilities exceeding those provided by typical short-term contextual windows. These systems function by maintaining internal state – a record of past interactions, learned information, and current goals – which is crucial for consistent and informed decision-making. Unlike stateless systems that process each input independently, agentic systems leverage persistent memory to store and recall this state, enabling them to learn from experience, refine strategies over time, and adapt to novel situations without requiring constant external prompting or re-initialization. The ability to retain and utilize information across multiple interactions is fundamental to achieving complex, long-term objectives and demonstrating genuinely intelligent behavior.

Persistent memory in agentic systems is not a single data store, but a layered architecture comprised of distinct memory types. Semantic Memory functions as a knowledge base, storing generalized facts and concepts independent of specific experiences. Complementing this is Episodic Memory, which records specific events, including context and timing, allowing the system to recall past interactions. Finally, Vector-Based Memory utilizes vector embeddings to represent data points in a high-dimensional space, enabling efficient similarity searches and recall based on semantic relatedness rather than exact matches; this representation is crucial for handling incomplete or noisy data and facilitates reasoning through analogy.

Agentic systems relying solely on short-term context lack the capacity for sustained reasoning and complex behavior. The inability to retain and recall past experiences – encompassing both factual knowledge and specific event details – fundamentally restricts their planning capabilities to the immediate present. Consequently, adaptation to dynamic environments is severely hampered, as systems cannot learn from prior interactions or anticipate future needs. This limitation prevents the emergence of genuinely intelligent behavior, as true intelligence necessitates the integration of past, present, and projected future states, a process impossible without a robust persistent memory architecture.

The Illusion of Understanding: Interpreting the Internal Logic

Increasing complexity in agentic systems-those capable of autonomous action and decision-making-results in diminished transparency of their internal reasoning. This opacity stems from the interaction of numerous parameters, learned associations, and feedback loops, making it difficult to trace the causal chain from input to output. Consequently, potential sources of error, including data bias embedded during training, algorithmic flaws, and unforeseen edge cases, become harder to identify and mitigate. The lack of interpretability introduces risks of unintended consequences, as system behavior may deviate from intended goals or exhibit undesirable side effects without clear explanation or predictability, hindering reliable deployment and user trust.

SHAP (SHapley Additive exPlanations) values and Temporal Causal Analysis are crucial techniques for understanding the internal logic of complex agentic systems. SHAP values, rooted in game theory, quantify the contribution of each input feature to a model’s prediction, enabling identification of influential factors driving decision-making. Temporal Causal Analysis extends this by examining the sequence of events and feature interactions over time that lead to a particular outcome. This is critical for agentic systems, where decisions are made sequentially and past actions impact future states. By pinpointing causal relationships between inputs, internal states, and outputs, these techniques allow developers to detect unintended biases, logical errors, and potential vulnerabilities that might not be apparent through simple input-output analysis, thereby improving system transparency and trustworthiness.

System-level interpretability addresses the limitations of analyzing individual components of agentic systems by focusing on the emergent behaviors arising from their interactions. Current interpretability techniques, such as feature attribution and saliency maps, often fail to capture these systemic effects, providing an incomplete picture of the agent’s reasoning. This paper argues that a shift toward understanding causal relationships at the system level is necessary, moving beyond component-wise explanations to assess how interactions between modules contribute to overall system behavior. Consequently, new evaluation frameworks are required to establish safety and accountability in autonomous AI, focusing on systemic risks rather than isolated component failures and enabling a more comprehensive assessment of agent trustworthiness.

The Hive Mind Emerges: From Assistants to Collaborative Entities

Agentic systems derive their most significant potential not from individual prowess, but from coordinated collaboration. While a single agent can accomplish defined tasks, the true breakthrough arrives when multiple agents synergize, exceeding the limitations of any single entity. This isn’t simply about dividing work; it’s about agents recognizing each other’s strengths, compensating for weaknesses, and dynamically adjusting strategies to achieve a collective outcome. Such coordination enables the tackling of complex problems requiring diverse expertise, fostering emergent behaviors and innovative solutions unattainable through isolated intelligence. The capacity for these systems to function as a cohesive unit, rather than a collection of independent actors, represents a fundamental shift in how computation is approached, promising advancements across numerous domains.

The emergence of large language model (LLM)-based agents has rapidly transformed the landscape of software development, with early tools like ChatGPT, GitHub Copilot, and Claude offering a glimpse into the future of coding assistance. These agents aren’t simply sophisticated autocomplete features; they demonstrate an ability to understand natural language requests and translate them into functional code, significantly accelerating the development process. GitHub Copilot, for instance, can suggest entire code blocks or functions based on context and comments, while ChatGPT and Claude excel at generating code from detailed descriptions or even debugging existing programs. These initial successes highlight the potential for LLM-based agents to move beyond simple assistance, offering developers a collaborative partner capable of handling complex tasks and fostering a more iterative and efficient workflow.

Recent advancements in multi-agent systems, exemplified by platforms like Claude Code, demonstrate a shift from simple assistance to genuine autonomous task execution. These systems aren’t merely responding to prompts; they are capable of independently breaking down complex goals into manageable steps, allocating subtasks, and coordinating their efforts to achieve a desired outcome. This capability unlocks the potential for truly collaborative workflows, where multiple agents work in concert, leveraging each other’s strengths and compensating for weaknesses. Crucially, such interactions aren’t pre-programmed; instead, they give rise to emergent behavior – novel and unexpected solutions arising from the interplay of individual agents, suggesting a future where AI systems can tackle problems in ways their creators hadn’t explicitly envisioned.

The pursuit of interpretability, as detailed in the study of agentic systems, often fixates on dissecting individual models while neglecting the emergent properties of the system as a whole. This echoes Donald Davies’ observation that, “The only constant is change.” The article rightly points to the limitations of solely focusing on model explanations; a system’s behavior isn’t simply the sum of its parts, but a product of their interactions and the environment. Attempts to build ‘perfect’ architectures, seeking to eliminate all potential failure points, are ultimately futile; the system will decay, adapt, and surprise. true accountability demands a shift towards understanding causal relationships at the system level, acknowledging the inherent entropy within complex agentic deployments.

What Lies Ahead?

The pursuit of ‘interpretable’ agency feels increasingly like an exercise in post-hoc rationalization. Each explanation, a neat narrative constructed around a decision already made. The work presented here doesn’t offer a solution, naturally. It clarifies the nature of the problem: the system isn’t a collection of components to be understood, but an ecosystem evolving beyond any single architect’s vision. Attempts to ‘explain’ individual agents are akin to charting the migratory patterns of birds after the storm has already passed – interesting, perhaps, but ultimately failing to predict the next flock.

Future work will necessarily move beyond localized explanations. The focus must shift towards understanding the emergent properties of these systems, the unforeseen consequences of interaction. Causal reasoning is a start, but even causality struggles to capture the sheer messiness of a complex adaptive system. The real challenge isn’t building models that reveal behavior, but designing systems resilient to unpredictable behavior, accepting that every deployment is a small apocalypse.

One suspects that comprehensive documentation will remain a fiction. No one writes prophecies after they come true. The field will likely circle back to robust engineering – not in the sense of perfect prediction, but of graceful degradation and readily available circuit breakers. The goal shouldn’t be to understand these systems, but to live alongside them – cautiously, and with a healthy respect for their inevitable autonomy.

Original article: https://arxiv.org/pdf/2601.17168.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- The Beauty’s Second Episode Dropped A ‘Gnarly’ Comic-Changing Twist, And I Got Rebecca Hall’s Thoughts

- eFootball 2026 Manchester United 25-26 Jan pack review

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Kylie Jenner’s baby daddy Travis Scott makes rare comments about their kids together

2026-01-27 17:44