Author: Denis Avetisyan

New research shows that artificial intelligence can accurately simulate human behavior in group settings, revealing the subtle influences of identity and context on cooperation.

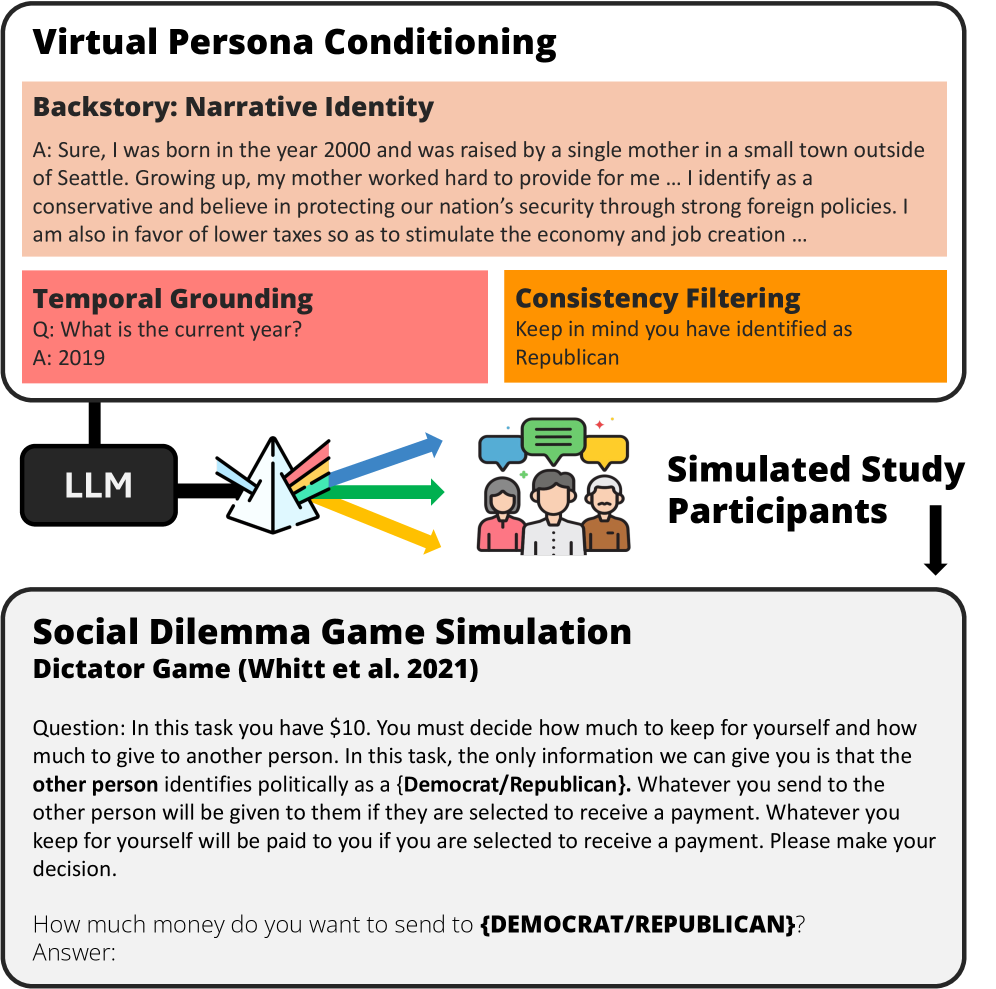

Carefully prompted large language models, leveraging techniques like temporal grounding and consistency filtering, can effectively reproduce behavioral patterns observed in social and economic games involving both real and simulated human participants.

Replicating the nuances of human behavior in social experiments is often hampered by unrecorded contextual factors and difficulties in accurately capturing individual motivations. This limitation is addressed in ‘Identity, Cooperation and Framing Effects within Groups of Real and Simulated Humans’, which investigates the capacity of large language models to simulate human decision-making in economic games. Our findings demonstrate that deep conditioning of base models with rich narrative identities, coupled with temporal grounding and consistency filtering, significantly improves the fidelity of simulated behavior, capturing effects of social identity and question framing. Could this approach unlock new avenues for exploring the subtle drivers of cooperation and competition that are routinely lost in traditional experimental designs?

Modeling the Human Landscape: Virtual Personas and the Future of Social Science

Historically, understanding human behavior has been constrained by the practical limitations of social science research. Studies often depend on gathering data from relatively small groups, hindering the ability to confidently apply findings to broader populations. Furthermore, reliance on self-reported information introduces potential biases, as individuals may not always accurately recall experiences or provide truthful answers due to social desirability or imperfect memory. This combination of limited scope and potential inaccuracies poses significant challenges to establishing generalizable principles about the complexities of human thought and action, prompting the need for innovative research methodologies that can overcome these longstanding hurdles.

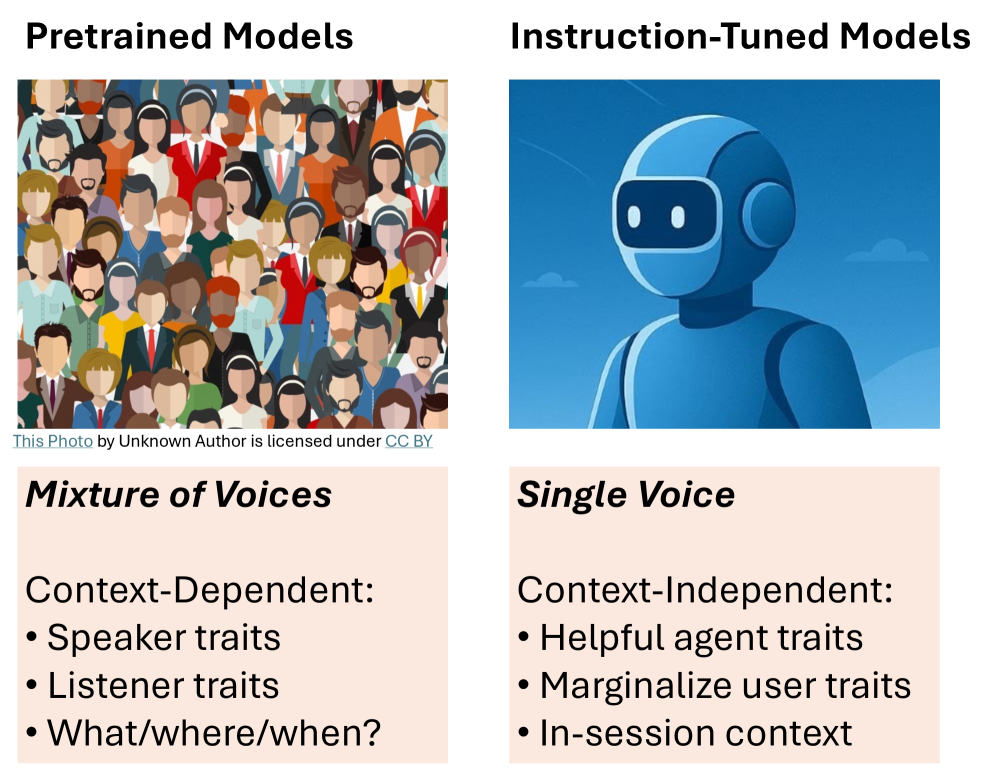

Researchers are pioneering a new methodology for social science by leveraging the power of Large Language Models to construct comprehensive life histories for virtual personas. This “backstory generation” process moves beyond simple demographic data, imbuing each virtual individual with a richly detailed past encompassing formative experiences, relationships, and personal beliefs. By prompting these models with specific parameters, investigators can create a diverse population of simulated individuals, each possessing a unique and internally consistent narrative. This approach allows for the exploration of complex social phenomena with a granularity previously unattainable, offering a powerful alternative to traditional methods reliant on limited data and self-reporting, and opening new avenues for predictive modeling and behavioral analysis.

The creation of truly representative virtual personas hinges on more than just arbitrary data points; a rigorous process of Demographic Matching is essential for building simulations that accurately reflect real-world populations. This technique ensures each virtual individual isn’t simply a collection of traits, but a statistically plausible member of a defined demographic group. By carefully aligning the generated backstories with known population characteristics – encompassing factors like age, gender, education, socioeconomic status, and geographic location – researchers can significantly enhance the fidelity of their models. The result is a more nuanced and reliable platform for studying complex human behaviors, allowing for investigations that move beyond the limitations of convenience samples and self-reported biases, and ultimately yielding insights with greater generalizability.

Simulating Decision-Making: LLM-Driven Behavioral Analysis

LLM Simulation utilizes large language models to generate responses from defined virtual personas operating within specified scenarios. These personas are assigned characteristics, backgrounds, and motivations to enable the modeling of nuanced human decision-making processes. The simulation environment provides a controlled space for observing behavioral patterns as the LLM, acting as the persona, reacts to various stimuli and tasks. This approach allows for repeatable experimentation and the isolation of specific variables influencing behavior, going beyond simple preference elicitation to encompass complex interactions and responses to dynamic situations. Data generated from these simulations consists of the persona’s expressed preferences, chosen actions, and textual justifications for those choices, providing a rich dataset for analysis.

LLM simulations facilitate the investigation of established cognitive biases by systematically varying input parameters and observing resultant preference shifts. Specifically, Framing Effects are modeled by presenting identical scenarios with differing emphases on potential gains versus losses, allowing quantification of how these alterations impact decision-making. Simultaneously, the influence of Social Identity is assessed by assigning virtual personas to specific in-group and out-group affiliations, and then analyzing the correlation between these affiliations and expressed preferences for various options; this process enables researchers to measure the degree to which group membership influences choices, independent of inherent product or service attributes. Data generated from these simulations provides quantifiable metrics regarding the prevalence and magnitude of these biases within the modeled population.

Temporal Grounding within the LLM simulation framework establishes a defined historical context for each simulated agent and scenario. This is achieved by incorporating specific dates, events, and prevailing social norms relevant to the chosen period into the LLM’s knowledge base and prompting parameters. By anchoring agent behaviors to a particular time, the simulation moves beyond generalized responses and accounts for the influence of contemporaneous factors on decision-making. Data inputs include documented historical records, news articles, and demographic information, ensuring that simulated preferences and reactions are consistent with the specified historical setting. This contextualization improves the validity of behavioral analysis by reducing the impact of present-day biases and increasing the fidelity of the simulated environment.

Economic Games and the Unveiling of Behavioral Patterns

The research employs the Dictator Game and Trust Game, established paradigms in behavioral economics, to measure prosocial behaviors. The Dictator Game assesses altruism and fairness by observing the allocation of resources from one player (the dictator) to another, with no expectation of reciprocity. The Trust Game, conversely, examines reciprocity by allowing one player to delegate resources to another, who can then choose to return a portion, revealing the level of trust and expectation of equitable behavior. Quantitative metrics derived from resource allocation in these games – specifically, the amount given in the Dictator Game and the amount returned in the Trust Game – provide standardized measures of altruism, fairness perceptions, and reciprocal tendencies within the simulated environment.

Initial simulations utilizing Large Language Models (LLMs) have successfully replicated established behavioral patterns observed in economic games, specifically the Dictator Game and the Trust Game. Quantitative analysis reveals a high degree of correlation between the resource allocation decisions made by the LLM simulation and those documented in numerous human subject experiments. In the Dictator Game, the LLM consistently demonstrates a statistically significant, though limited, degree of altruism by allocating a portion of resources to recipients, mirroring human behavior. Similarly, in the Trust Game, the LLM exhibits reciprocal behavior, increasing allocations to trustees who return a higher proportion of received funds, again aligning with established human responses across multiple independent studies.

Simulations are revealing instances of co-partisan favoritism, where allocations within economic games demonstrate a bias towards individuals identified as belonging to the same political affiliation as the allocating agent. Quantitative analysis shows a strong correlation between the magnitude of these allocation differences-specifically, the disparity in resources distributed to in-group versus out-group members-and findings from original human subject studies examining partisan bias. This correlation is statistically significant across multiple simulation runs and replicates patterns observed in experiments utilizing real-world political identification, suggesting the model captures a key element of this behavioral phenomenon.

Ensuring Rigor and Reproducibility: The Foundation of Scientific Advancement

Extended interactions within simulations are susceptible to ‘drift’, where subtle, accumulated changes can lead to unrealistic or inconsistent persona behavior. To counteract this, Consistency Filtering is implemented – a technique that actively monitors and corrects for deviations from established behavioral patterns. This process doesn’t rigidly fix responses, but rather gently steers the simulated agent back towards its defined characteristics, ensuring long-term coherence. By continuously evaluating actions against the initial persona profile, the system minimizes unintended shifts and maintains a stable foundation for robust and reliable findings, even across numerous simulated turns and complex scenarios. This approach is crucial for building confidence in the validity of simulation results and their applicability to understanding real-world phenomena.

Maintaining consistent behavioral patterns within simulated personas requires deliberate intervention against ‘drift’ – the tendency for agents to subtly alter their responses over extended interactions. Consistency Filtering addresses this by continuously evaluating each simulated action against the established profile of the persona, identifying and correcting deviations. This isn’t merely about preventing illogical responses; it’s about preserving the character of the simulation, ensuring that a persona’s answers at hour ten are fundamentally aligned with those provided at hour one. The technique employs a dynamic weighting system, prioritizing adherence to core beliefs and values while allowing for natural evolution within those boundaries. This careful balance prevents the simulation from becoming an unrecognizable caricature of its initial parameters, bolstering the long-term validity and trustworthiness of the results.

The foundation of robust scientific advancement rests upon reproducibility, and this principle guides the development of the simulation platform. The intention is not simply to generate findings, but to establish a transparent and accessible environment where any researcher can independently verify those results and extend the work further. This commitment involves meticulous documentation of the simulation parameters, algorithms, and data handling procedures, ensuring that the entire process is auditable and repeatable. By prioritizing open access and detailed methodology, the platform aims to foster collaboration and accelerate discovery, enabling the broader scientific community to build upon established foundations with confidence and contribute to a more reliable body of knowledge.

Simulations now extend beyond behavioral modeling to directly generate survey responses, enabling a powerful form of validation against empirical data. This approach revealed that the DeepBind method consistently enhances the alignment between simulated and actual human partisan divides, effectively mirroring real-world ideological gaps. Further refinement, achieved by integrating both Temporal Grounding and Consistency Filtering, yielded the most accurate replication of human behavioral patterns observed to date. Importantly, these counterfactual simulations also demonstrated the significant influence of framing effects – subtle changes in how questions are posed can demonstrably alter responses, highlighting the importance of careful experimental design and revealing the underlying cognitive processes driving opinion formation.

The study highlights how simulated agents, guided by principles of temporal grounding and consistency filtering, can mirror the nuances of human cooperative behavior. This echoes John McCarthy’s observation that, “Every worthwhile accomplishment, big or little, has its story.” Just as McCarthy suggests a narrative underlies achievement, the research reveals a structured history – the temporal grounding – that informs the agents’ decisions within repeated interactions. The effectiveness of the simulation isn’t merely about achieving cooperation, but about recreating the process of how identity and context shape those choices, creating a believable and consistent behavioral pattern.

Where Do We Go From Here?

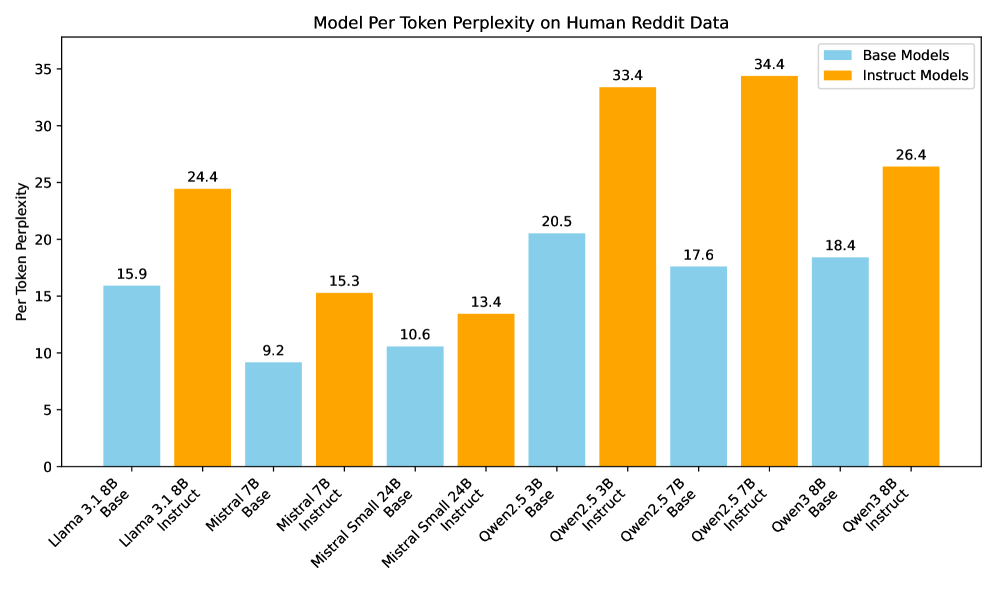

The success of simulating behavioral economics with large language models hinges, predictably, on the fidelity of the scaffolding. This work demonstrates an ability to reproduce effects-social identity, temporal discounting-but reproduction is not explanation. The model’s internal representation of ‘identity’ remains opaque; it is a surface-level consistency, not a deeply held, emergent property. The true cost of this simulation, then, is not computational, but conceptual. Are these models illuminating the underlying mechanisms of human cooperation, or merely creating a convincing mimicry?

Future work must address the limitations inherent in prompting. The current approach relies on externally defined constraints – temporal grounding, consistency filtering – which, while effective, resemble training wheels. A more robust system would internally develop these constraints, demonstrating a genuine understanding of the game’s dynamics. The architecture itself dictates behavior, and the current reliance on external prompts suggests a fundamental limitation in the model’s ability to reason about social context without explicit direction.

Ultimately, the challenge is not to build a better simulator, but a more elegant one. Cleverness, in the form of increasingly complex prompts, will not scale. Simplicity-a parsimonious model capable of generating consistent, temporally grounded behavior-remains the most promising path. The invisible architecture is always preferable, until it breaks, revealing the brittle dependencies upon which the illusion of understanding rests.

Original article: https://arxiv.org/pdf/2601.16355.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- eFootball 2026 Manchester United 25-26 Jan pack review

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- Battlestar Galactica Brought Dark Sci-Fi Back to TV

- ‘This from a self-proclaimed chef is laughable’: Brooklyn Beckham’s ‘toe-curling’ breakfast sandwich video goes viral as the amateur chef is roasted on social media

- The Beauty’s Second Episode Dropped A ‘Gnarly’ Comic-Changing Twist, And I Got Rebecca Hall’s Thoughts

2026-01-27 07:33