Author: Denis Avetisyan

Researchers have demonstrated an AI-driven approach to creating complete deep learning systems, from user-facing Python code to optimized GPU kernels.

![VibeTensor establishes a heterogeneous compute ecosystem-Python and Node.js frontends communicate with a central [latex]C++[/latex] core-where tensor operations, automatic differentiation, and CUDA runtime components are managed through shared resources and dynamically loaded extensions, anticipating future growth rather than rigid construction.](https://arxiv.org/html/2601.16238v1/figures/vibetorch_arch.png)

This paper details VibeTensor, a fully AI-generated deep learning system software stack validated through automated builds and tests, showcasing the potential of AI-assisted software engineering for GPU-accelerated computation.

Developing robust and efficient deep learning systems remains a significant engineering challenge, often requiring substantial manual effort and specialized expertise. This paper introduces ‘VibeTensor: System Software for Deep Learning, Fully Generated by AI Agents’, an open-source research stack demonstrating the feasibility of automatically generating a complete deep learning runtime-from Python interfaces to CUDA kernels-using LLM-powered coding agents and rigorous automated validation. The resulting system includes a PyTorch-style eager tensor library with a C++20 core, alongside features like a custom storage system and CUDA runtime, all produced without per-change manual code review. Could this approach herald a new era of AI-assisted software engineering for complex systems, and what limitations remain in scaling such automated generation processes?

The Inevitable Rigidity of Current Systems

Contemporary deep learning frameworks, while powerful, frequently struggle when confronted with the demands of modern applications-particularly those relying on dynamic graphs. Traditional systems are often optimized for static computation graphs, where the structure is fixed beforehand. This creates bottlenecks when dealing with data where relationships and connectivity change during processing, such as in areas like drug discovery, social network analysis, or complex robotics. The rigidity of these frameworks necessitates extensive pre-processing or compromises in efficiency, hindering performance and scalability. Consequently, researchers and developers find themselves constrained by tools not ideally suited to the evolving landscape of artificial intelligence, prompting the need for more adaptable and performant solutions capable of natively handling the complexities of dynamic data and computation.

VibeTensor represents a significant departure from conventional deep learning runtimes, offering a dynamically adaptable platform built to overcome the performance bottlenecks associated with increasingly complex models and data structures. This novel system leverages artificial intelligence to intelligently optimize computational graphs on-the-fly, enabling efficient execution across diverse hardware configurations. Unlike static graph frameworks, VibeTensor’s AI assistance allows it to reshape computations during runtime, accommodating dynamic graphs and irregular data patterns without substantial performance degradation. The result is a flexible, high-performance implementation capable of handling emerging workloads-such as those found in graph neural networks and reinforcement learning-with greater agility and scalability than traditional approaches. This dynamic optimization not only boosts speed but also reduces memory footprint, making VibeTensor a promising foundation for the next generation of deep learning applications.

Underlying Foundations: A Matter of Control

VibeTensor’s runtime environment is fundamentally built upon C++ to maximize both execution speed and granular control over system resources. This implementation choice enables direct memory management, optimized data structures, and low-level access to hardware capabilities. C++ facilitates the creation of a performant core capable of supporting higher-level functionalities, while also allowing for precise customization and extension of the runtime based on specific hardware and software configurations. The language’s capabilities are leveraged throughout the system, from tensor operations to the Autograd engine, ensuring consistent performance characteristics and efficient resource utilization.

VibeTensor utilizes NVIDIA’s CUDA platform to offload computationally intensive operations to the GPU, significantly accelerating tensor processing. Furthermore, it integrates DLPack, an open standard for zero-copy tensor exchange between different deep learning frameworks. This allows VibeTensor to directly consume and provide tensors to other frameworks – such as TensorFlow, PyTorch, and MXNet – without incurring the performance overhead of data copying, thereby enhancing interoperability and overall computational efficiency. DLPack enables sharing of tensor data in memory without serialization or deserialization, reducing latency and memory usage.

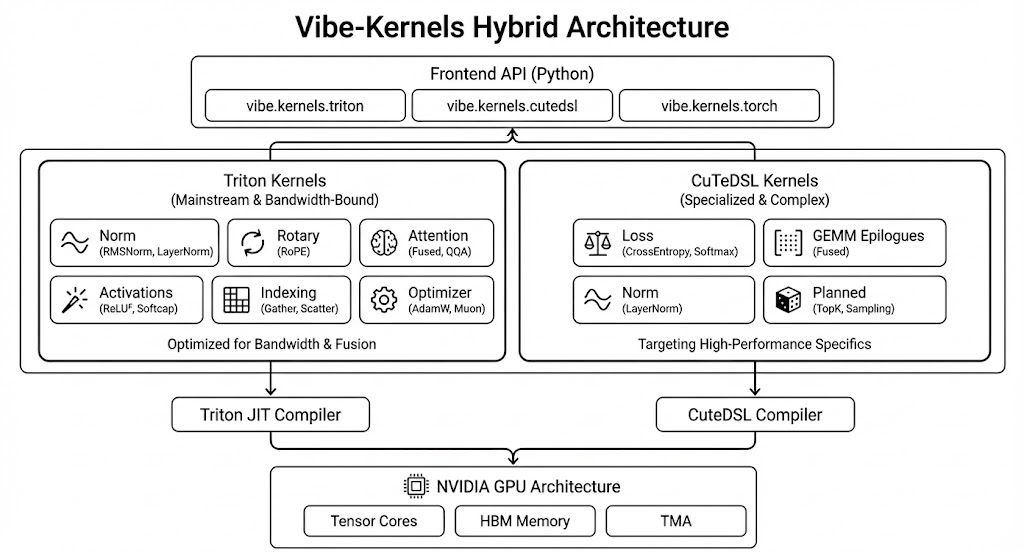

The Autograd Engine within VibeTensor facilitates automatic differentiation, crucial for training machine learning models, by constructing and optimizing computation graphs. This engine supports both forward and backward passes, enabling efficient gradient calculation. Complementing this is the Triton compiler, an accelerator that specializes in generating highly optimized GPU kernels for tensor operations. Triton allows developers to define custom operations in a Python-like syntax, which are then compiled into efficient CUDA code, bypassing the limitations of standard tensor libraries and allowing for substantial performance gains on supported hardware. The combination of the Autograd Engine and Triton compiler provides a flexible and performant framework for defining and executing complex tensor computations.

The Illusion of Optimization

VibeTensor leverages CUTLASS, a collection of CUDA kernels optimized for matrix multiplication, to significantly accelerate computationally intensive operations. This library provides highly tuned implementations for various data types and precisions, including support for Tensor Cores on NVIDIA GPUs. By integrating CUTLASS, VibeTensor bypasses general-purpose matrix multiplication routines in favor of specialized kernels, resulting in improved throughput and reduced latency for core computations. The library is designed for extensibility and allows for customization to suit specific hardware configurations and precision requirements, maximizing performance on supported NVIDIA architectures.

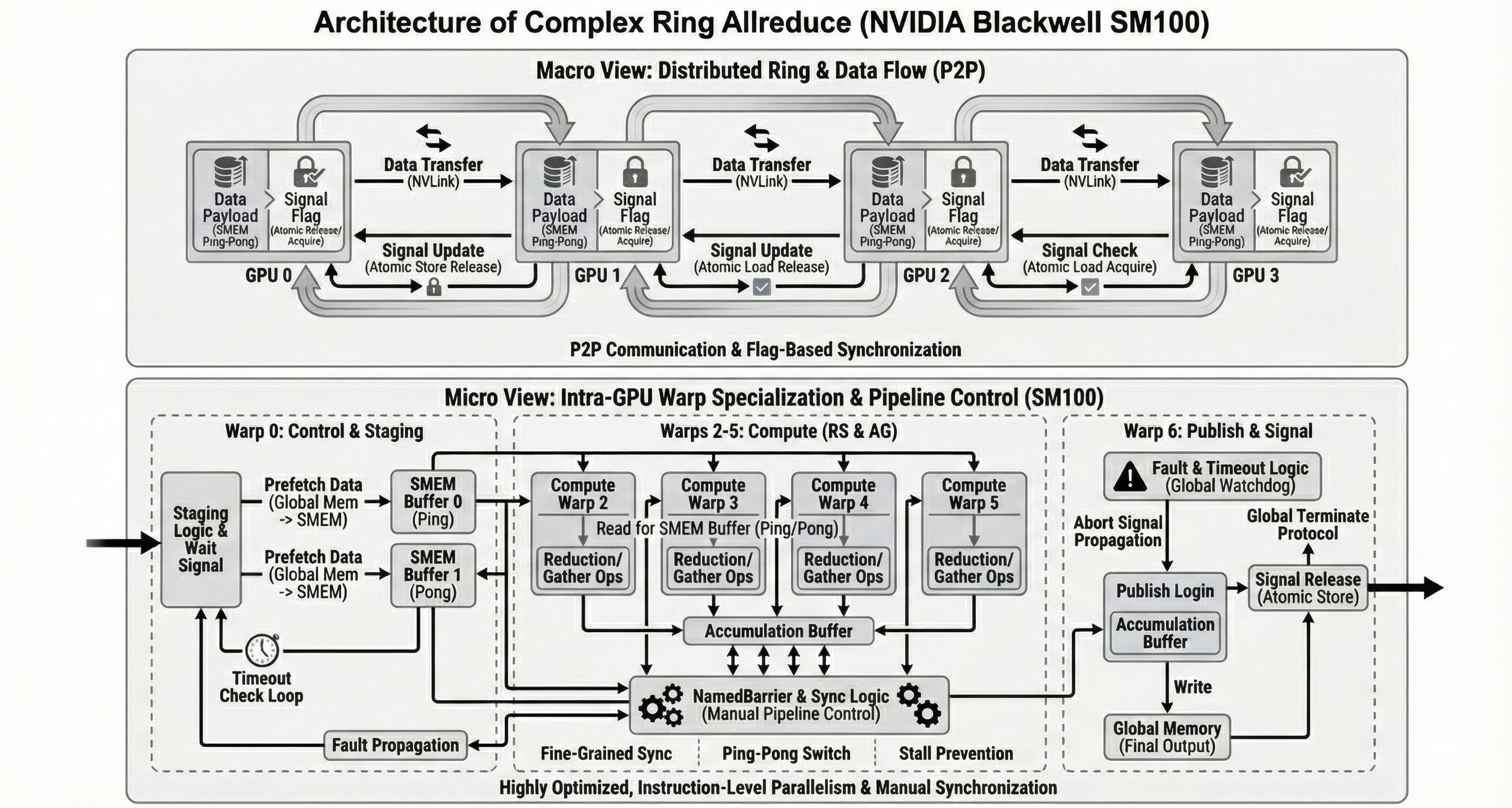

The VibeTensor Fabric subsystem is an experimental feature designed to address communication bottlenecks in multi-GPU training and inference scenarios. It implements a custom collective communication layer intended to supersede standard PyTorch collectives, specifically targeting all-reduce operations crucial for distributed deep learning. While still under development, Fabric aims to improve scalability by reducing communication overhead and increasing bandwidth utilization between GPUs, potentially enabling larger models and batch sizes to be processed efficiently. Initial evaluations focus on performance characteristics with multiple GPUs, and the subsystem is not considered fully production-ready.

VibeTensor is designed and validated for NVIDIA GPU architectures, specifically including the Hopper H100 (SM90) and Blackwell (SM103). Benchmarks demonstrate that VibeTensor’s Fused Attention implementation achieves a 1.54x speedup in the forward pass and a 1.26x speedup in the backward pass when compared to PyTorch SDPA/FlashAttention on Hopper H100 GPUs. However, performance regressions were observed with small batch sizes, resulting in 0.67x forward and 0.66x backward pass speeds compared to FlashAttention under the same conditions.

Verification and Current Limitations

VibeTensor’s functional correctness is verified through a dual-framework testing strategy. Unit and integration tests are implemented using CTests, a C++ testing framework, to validate core computational components. Complementing this, pytest, a Python-based framework, is utilized for higher-level API testing and end-to-end validation of system workflows. This combined approach ensures comprehensive test coverage, addressing both low-level performance and high-level system behavior, and facilitates continuous integration and regression testing during development.

VibeTensor incorporates an API Parity Checker to verify functional compatibility with the PyTorch framework. This checker executes a suite of tests designed to confirm that VibeTensor API calls produce equivalent outputs to corresponding PyTorch operations, ensuring a consistent user experience and facilitating migration between the two systems. The checker assesses both the numerical accuracy and data structures returned by VibeTensor functions against PyTorch baselines, identifying and flagging any discrepancies. This automated verification process is critical for maintaining interoperability and minimizing potential errors when integrating VibeTensor into existing PyTorch-based workflows.

VibeTensor is currently designed for deployment on Linux x86_64 architectures, with supported frontends implemented in both Python and Node.js. Performance evaluations indicate a current training speed disadvantage of 1.7 to 6.2 times compared to PyTorch; however, the system demonstrates scalability across multiple GPUs. Specifically, throughput scaling has been verified on Blackwell GPUs up to four GPUs utilizing a weak scaling methodology, maintaining a fixed batch size per GPU.

The pursuit of VibeTensor reveals a predictable pattern. Every line of automatically generated code, every optimized CUDA kernel, feels less like construction and more like… cultivation. The system isn’t built; it emerges. It’s a testament to the idea that complex systems aren’t designed, they’re grown. As Tim Berners-Lee observed, “The Web is more a social creation than a technical one.” This echoes the approach within VibeTensor; the AI agents aren’t simply writing code, but fostering an ecosystem of interdependent components. The validation through automated builds and tests isn’t about preventing failure, but guiding the inevitable evolution of the system. It’s a small apocalypse with every deploy, and the documentation, one suspects, will be written long after the prophecies come true.

The Silent Future

The construction of VibeTensor is not a destination, but a glimpse into the inevitable. This work doesn’t solve the problem of deep learning systems; it relocates the difficulty. The challenge shifts from writing code to cultivating the right conditions for code to emerge. Each automated build, each passing test, is less a victory over complexity and more a temporary truce. The system, once seeded, will always find novel paths to failure-paths not predicted by its creators, but inherent in the logic of eager execution itself.

The true limitation isn’t the generative capacity of the agents, but the fidelity of the feedback loop. Current validation relies on pre-defined tests, brittle approximations of a system’s potential states. A more profound investigation must focus on systems that observe themselves, internalizing their own failure modes and evolving defenses without external intervention. The goal is not flawless code, but resilient entropy.

The silence following a successful build is the most unsettling sound of all. It implies not completion, but dormancy. The system is plotting. And the question isn’t whether it will break, but when, and in what unexpected way. Debugging, then, never truly ends-only attention does.

Original article: https://arxiv.org/pdf/2601.16238.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- eFootball 2026 Manchester United 25-26 Jan pack review

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- 28 Years Later: The Bone Temple’s huge Samson twist is more complicated than you think

- Simulating Society: Modeling Personality in Social Media Bots

- ‘This from a self-proclaimed chef is laughable’: Brooklyn Beckham’s ‘toe-curling’ breakfast sandwich video goes viral as the amateur chef is roasted on social media

2026-01-27 04:17