Author: Denis Avetisyan

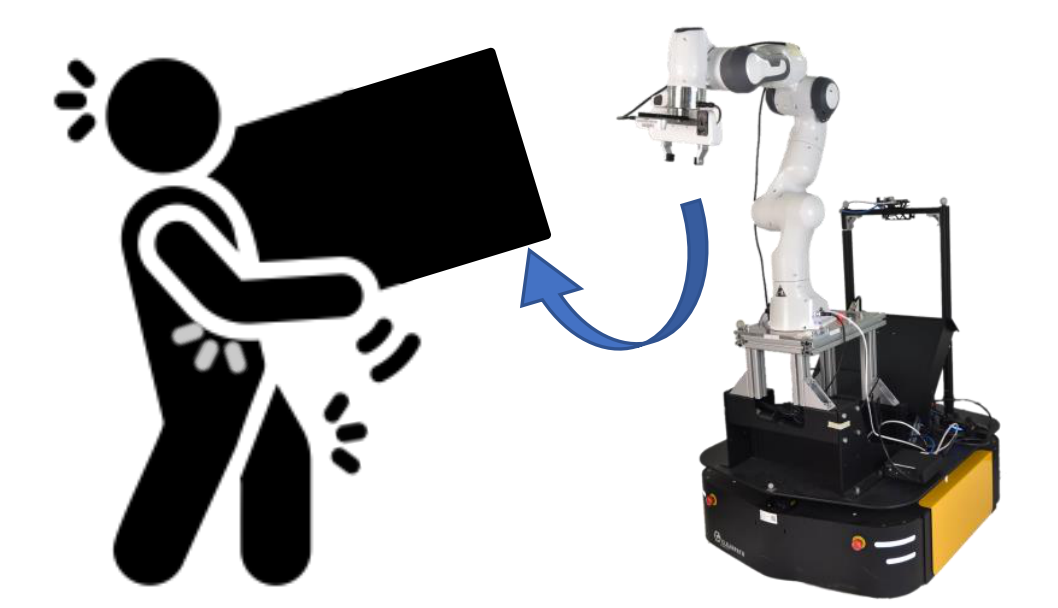

A new control framework combines the strengths of artificial intelligence and predictive algorithms to enable safer and more efficient human-robot collaboration in complex environments.

This paper introduces ARMS, an adaptive hybrid control system that seamlessly switches between reinforcement learning and model predictive control for robust and secure navigation.

Achieving truly collaborative navigation between humans and robots remains challenging due to the need for both responsive maneuverability and stringent safety guarantees. This paper introduces ‘Adaptive Reinforcement and Model Predictive Control Switching for Safe Human-Robot Cooperative Navigation’, a novel hybrid framework that seamlessly integrates learned agility with analytical safety filtering. The core innovation lies in an adaptive neural switcher which dynamically fuses reinforcement learning and model predictive control, favoring conservative behavior in high-risk scenarios while leveraging learned policies for efficient navigation in cluttered environments. Could this approach unlock more natural and robust human-robot interactions in increasingly complex real-world settings?

Navigating the Unpredictable: The Human Factor in Robotics

Conventional navigation systems, designed for static or predictable environments, face significant hurdles when operating amongst people. These systems often rely on pre-programmed maps and trajectories, proving inadequate in the face of spontaneous human movements and unforeseen obstacles. The inherent unpredictability of human behavior-a pedestrian suddenly stepping into the path, a child chasing a ball-demands a fundamentally different approach to robotic navigation. Current limitations necessitate a shift towards systems capable of not just reacting to dynamic changes, but proactively anticipating them, necessitating greater adaptability and robust safety measures to ensure seamless and secure operation within human-populated spaces. This need extends beyond simple obstacle avoidance; truly effective navigation requires an understanding of potential human intentions and a capacity to adjust plans accordingly, a feat beyond the capabilities of many existing robotic platforms.

Conventional navigation systems frequently operate on the premise of pre-planned routes, a methodology proving increasingly inadequate in real-world settings teeming with people and unforeseen obstacles. These systems struggle when confronted with the inherent unpredictability of human movement – a pedestrian darting across a path, a sudden gathering of people, or even subtle shifts in gait indicating an intention to change direction. The rigidity of pre-defined trajectories leaves little room for adaptation, forcing robots to either halt, recalculate, or potentially collide in complex scenarios. Consequently, these limitations hinder the deployment of autonomous systems in dynamic, human-populated environments, demanding a shift toward more responsive and intelligent navigation strategies capable of accommodating the nuances of human behavior and the ever-changing landscape of shared spaces.

Current navigation systems frequently operate with a fundamental disconnect: a reliance on either pre-planned routes or purely reactive obstacle avoidance. This separation presents a significant limitation, as rigidly defined paths struggle to accommodate dynamic environments and unpredictable actors, while purely reactive systems, though adept at immediate safety, often lack the foresight needed for efficient, goal-directed movement. The challenge lies in creating a unified framework where proactive planning and reactive safety mechanisms are not simply layered on top of one another, but are intrinsically interwoven. Such an integration would allow a system to anticipate potential hazards while simultaneously pursuing a desired objective, seamlessly adjusting its trajectory based on both long-term goals and immediate environmental feedback – a crucial step toward truly adaptive and intelligent navigation.

Truly collaborative robotics necessitates navigation systems that move beyond simply reacting to human presence and instead actively predict and accommodate human intentions. Current research explores frameworks where robots don’t just sense a person’s movement, but infer their goals – are they approaching, attempting to cross a path, or simply pausing in thought? This involves leveraging machine learning techniques to model human behavior, predicting likely trajectories based on subtle cues like gaze direction, body language, and even prior interactions. By anticipating these intentions, a robot can proactively adjust its path, offering assistance, maintaining a safe distance, or seamlessly coordinating movement – creating a more natural and efficient partnership. This shift from reactive avoidance to proactive accommodation is crucial for robots operating in shared spaces, fostering trust and enabling genuinely collaborative tasks.

ARMS: A System That Learns to Anticipate, Not Just React

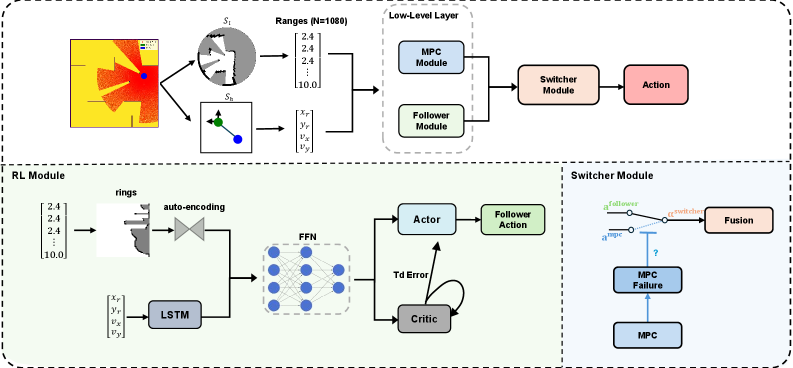

ARMS is an Adaptive Reinforcement and Model Predictive Control (MPC) Switching framework developed to enhance the robustness and efficiency of robot navigation, particularly in collaborative scenarios. The system integrates reinforcement learning (RL) – used for long-term planning and adaptation – with MPC, a control method known for its ability to satisfy constraints and guarantee stability. ARMS dynamically switches between these two control layers, allowing it to leverage the strengths of both approaches depending on the operational context and environmental conditions. This adaptive switching strategy is central to achieving reliable navigation in complex and dynamic environments where pre-programmed behaviors may be insufficient.

The ARMS framework employs a Switching Strategy to dynamically alternate control between a Reinforcement Learning (RL) policy and a Model Predictive Control (MPC) layer. This strategy doesn’t rely on a fixed schedule; instead, the system evaluates current conditions to determine which control method is optimal. The RL component utilizes a learned policy, enabling long-horizon planning and adaptation to complex scenarios, while the MPC layer provides reactive, safety-guaranteed control based on a local model of the environment. Switching occurs to capitalize on the strengths of each approach – leveraging the learned policy when appropriate and defaulting to the safety of MPC when faced with uncertainty or critical situations.

The ARMS framework combines reinforcement learning (RL) and Model Predictive Control (MPC) to achieve robust navigation. RL provides the capacity for long-term planning, enabling the robot to learn optimal strategies for reaching goals over extended horizons. However, RL policies can be susceptible to unforeseen circumstances or model inaccuracies. To mitigate these risks, ARMS integrates MPC, which guarantees stability and safety by explicitly considering system constraints and optimizing control actions over a finite prediction horizon. This combination allows the robot to benefit from the strategic planning of RL while relying on the safety-critical capabilities of MPC, particularly in dynamic or uncertain environments.

The Adaptive Reinforcement and Model Predictive Control Switching (ARMS) framework dynamically adjusts its control methodology through continuous environmental assessment. This assessment involves real-time data acquisition regarding the robot’s surroundings, including obstacle detection, path feasibility, and predicted dynamic changes. Based on this input, ARMS selects between a learned policy derived from reinforcement learning and a reactive trajectory generated by model predictive control. The switching logic is not pre-programmed but rather informed by the current environmental state, allowing the system to prioritize long-term planning in predictable environments and safety-critical reaction in dynamic or uncertain scenarios. This adaptive behavior is crucial for robust and collaborative navigation, enhancing performance and reliability across diverse operational conditions.

Decoding Human Intent: The Foundation of Predictive Navigation

The observation design within the ARMS architecture utilizes a multi-modal input approach, combining data from a LiDAR sensor with temporally encoded information. Raw sensor data, specifically point clouds generated by the LiDAR, provides a direct representation of the surrounding environment. This is then integrated with output from a Long Short-Term Memory (LSTM) network. The LSTM is trained on sequences of human motion data, enabling the system to capture and represent the temporal dynamics of observed behaviors. This fusion of static environmental data and dynamic behavioral predictions creates a comprehensive observation space used for subsequent state representation and control planning.

The Long Short-Term Memory (LSTM) network within the ARMS architecture is utilized to model and predict human motion trajectories. This is achieved by training the LSTM on a dataset of historical human movement patterns, allowing it to learn temporal dependencies and anticipate future positions. Specifically, the LSTM receives sequences of human pose data as input and outputs predicted future pose estimations. This predictive capability enables the robot to proactively adjust its actions, improving its ability to navigate alongside humans and react to their intended movements before they occur, thus enhancing anticipatory behavior.

The NavRep, or Navigation Representation, functions as a condensed state estimate utilized throughout the ARMS architecture. This representation distills raw sensory data and predicted human motion into a fixed-length vector, significantly reducing the computational burden for downstream modules. Specifically, both the reinforcement learning agent, responsible for high-level strategic decision-making, and the Model Predictive Control (MPC) component, handling low-level trajectory optimization, ingest NavRep as their primary state input. By providing a consistent and informative state representation, NavRep facilitates efficient learning and control, enabling ARMS to operate effectively in complex and dynamic environments.

The Autonomous Robot Manipulation System (ARMS) integrates data from multiple perceptual sources – including raw LiDAR data and predictions generated by a Long Short-Term Memory (LSTM) network processing historical human motion – to achieve environmental and intent recognition. This fusion process allows ARMS to construct a holistic representation of the robot’s surroundings, encompassing static obstacles and dynamic elements like people. Specifically, the LSTM’s predictive capabilities enable the system to anticipate human actions, providing crucial information for proactive planning and safe interaction. The combined data stream is then utilized to inform both the reinforcement learning and Model Predictive Control (MPC) components, facilitating robust and adaptable behavior in complex, human-populated environments.

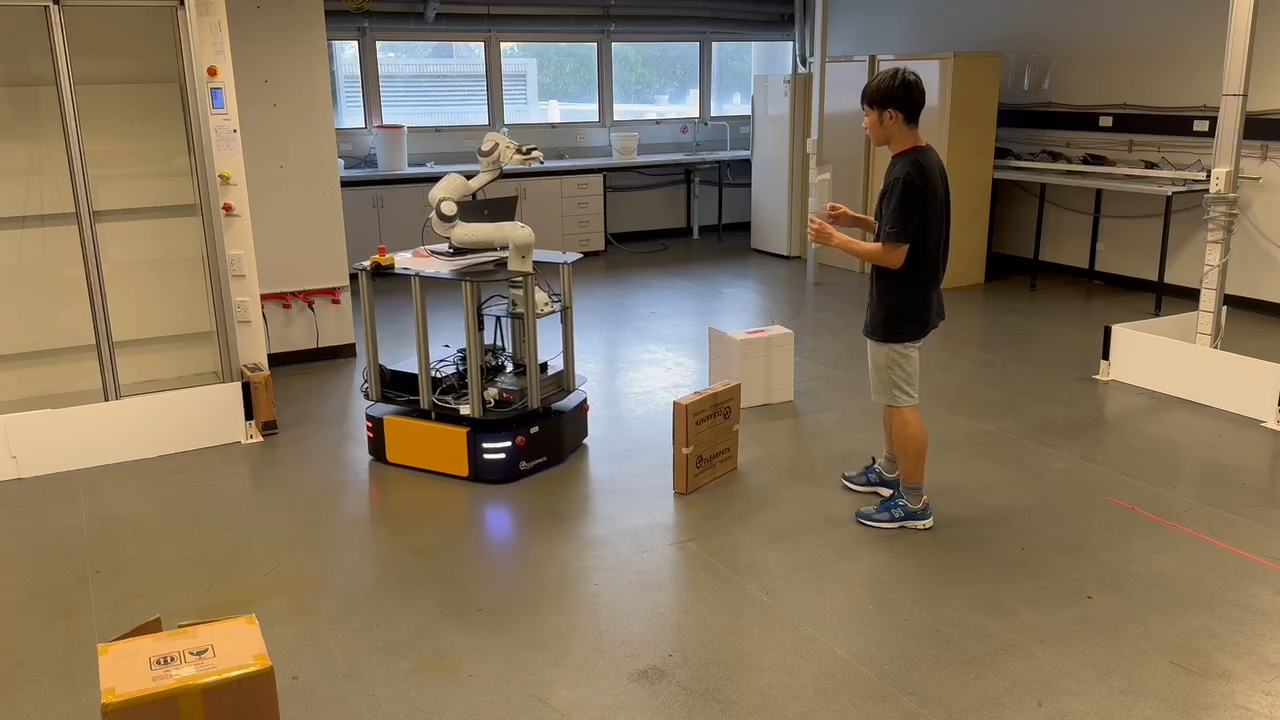

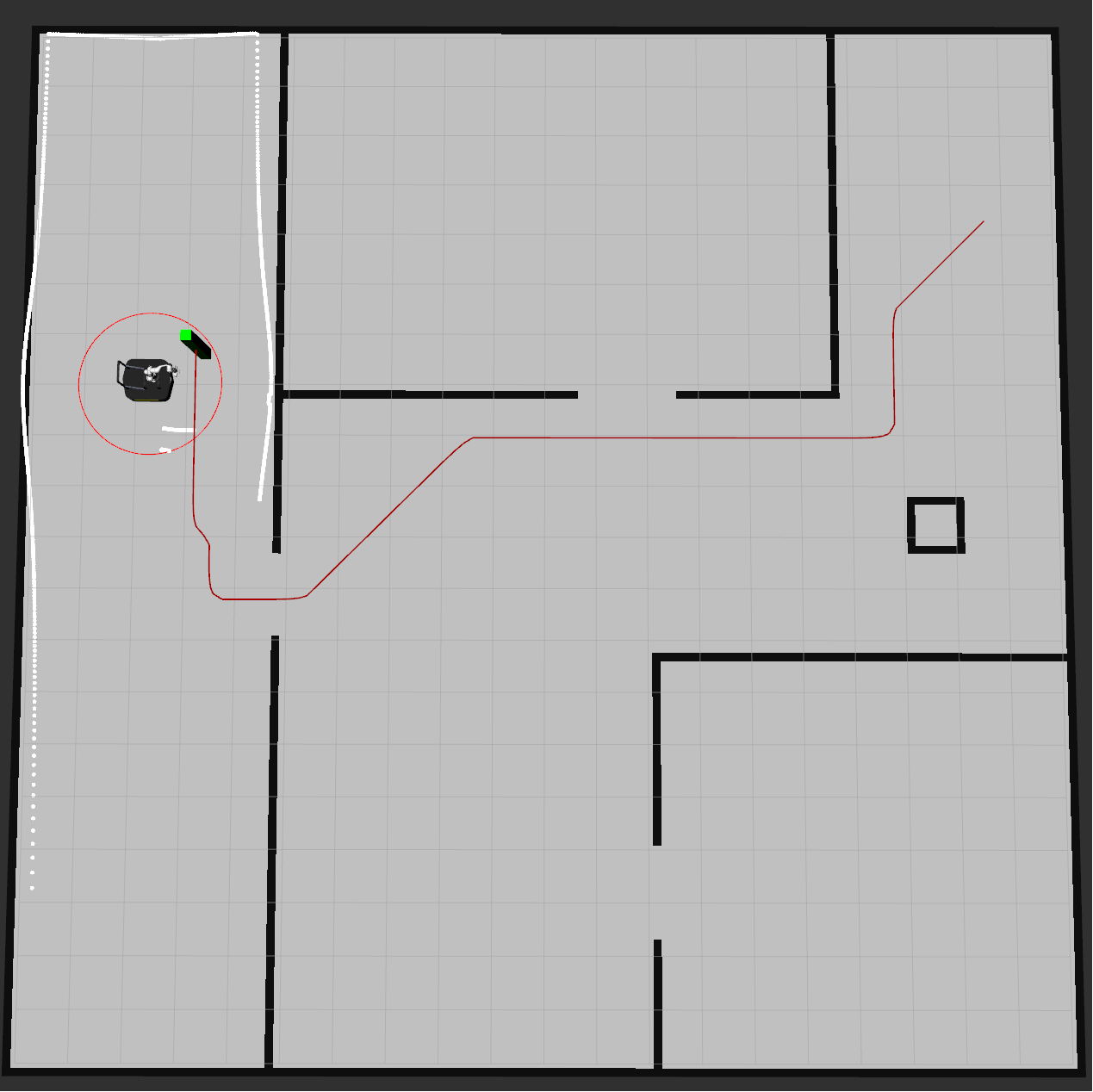

Validating Intelligence: Performance in the Crucible of Simulation

The Adaptive Robotic Movement System (ARMS) underwent rigorous validation within the Gazebo simulator, a widely adopted platform for robotics research and development. This virtual environment allowed for controlled experimentation across a spectrum of challenging navigation scenarios, including dynamic obstacles, pedestrian traffic, and tight spaces. By simulating real-world complexities, researchers could comprehensively assess ARMS’s performance without the risks and logistical constraints of physical testing. These simulations were crucial for refining the system’s algorithms and ensuring its reliability before deployment in physical environments, offering a scalable and repeatable method for evaluating the framework’s core functionalities and identifying areas for improvement.

The efficacy of the autonomous robotic maneuvering system was rigorously assessed through a ‘Success Rate’ metric, quantifying the robot’s ability to reliably navigate within dynamic, human-populated environments. This evaluation moved beyond simple path completion, demanding successful operation alongside human actors – a crucial requirement for real-world deployment. The system’s performance was measured by the percentage of trials where the robot reached its designated goal without collision, while simultaneously maintaining a safe and predictable trajectory relative to any present humans. High success rates demonstrated the framework’s robustness in handling the inherent uncertainties of human behavior and the complexities of shared spaces, validating its potential for seamless integration into collaborative robotics applications.

Evaluations within simulated environments demonstrate that the Adaptive Robotic Movement System (ARMS) consistently surpasses the performance of established navigation methods when tasked with both avoiding obstacles and reaching designated goals. Specifically, ARMS achieved a significantly improved success rate in complex scenarios when contrasted with Dynamic Window Approach (DWA), a one-step Quadratic Programming (QP) baseline, and a Proximal Policy Optimization (PPO)-only follower. This enhanced performance indicates ARMS’s capacity to effectively balance safety-maintaining collision-free trajectories-with efficiency, enabling quicker and more reliable navigation in dynamic and potentially crowded spaces. The results suggest a substantial step forward in robotic navigation, particularly for applications requiring close proximity to humans and demanding reliable goal achievement.

Evaluations demonstrate that the implemented adaptive switching strategy markedly improves a robot’s capacity to handle unpredictable conditions and changing environments, thereby fostering more natural and effective human-robot interaction. This approach allows the robot to dynamically select the most appropriate control method, resulting in increased robustness during collaborative tasks. Crucially, this adaptive system achieves a 33% reduction in computational latency when contrasted with traditional multi-step Model Predictive Control (MPC) baselines, enabling quicker response times and smoother, more fluid movements during real-time interaction. The enhanced efficiency not only improves performance but also lowers the processing demands, making the framework suitable for deployment on robots with limited computational resources.

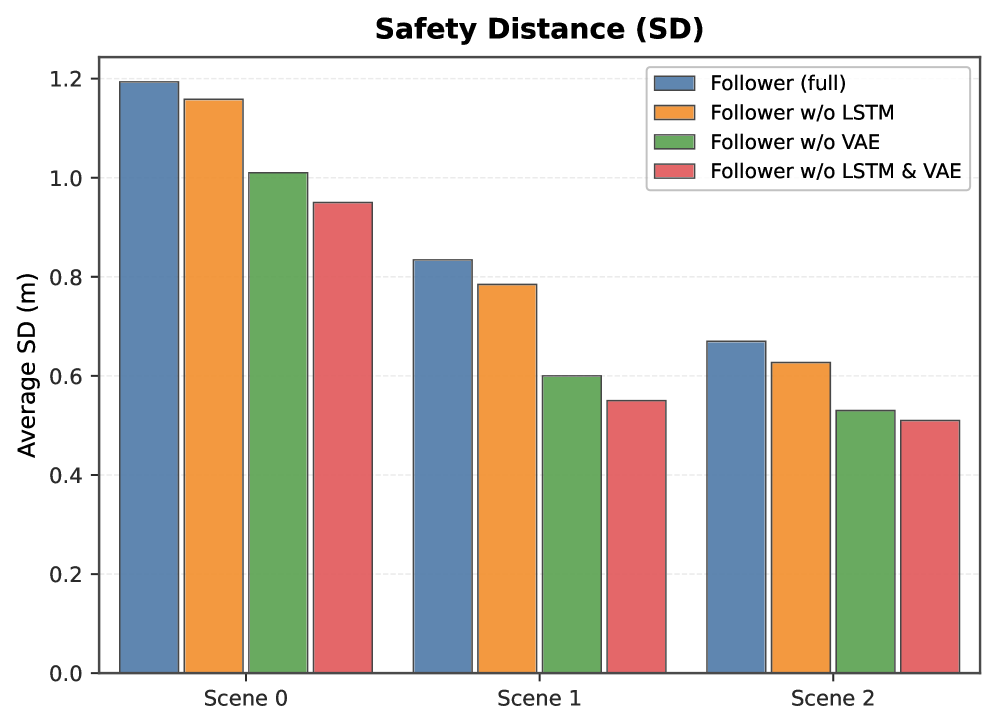

![Ablation studies in a navigation scenario demonstrate that the switcher architecture's success relies on both effective feature selection and fusion, as indicated by the [latex]95%[/latex] confidence intervals derived from 300 trials.](https://arxiv.org/html/2601.16686v1/x4.png)

Beyond Prediction: Charting a Course for Truly Collaborative Machines

The Adaptive Robot Manipulation System (ARMS) is poised for advancement through the integration of increasingly nuanced human intention prediction models. Current systems often rely on simplified assumptions about a person’s goals, limiting a robot’s ability to anticipate needs and react proactively. Future iterations of ARMS will leverage techniques from machine learning – including recurrent neural networks and Bayesian inference – to build more accurate predictive models, factoring in subtle cues like gaze direction, body posture, and even physiological signals. This enhanced capability will allow the robot to not only understand what a person is doing, but also why, enabling a smoother, more intuitive collaborative experience and minimizing the risk of unintended interactions. By moving beyond reactive responses to proactive assistance, ARMS aims to become a truly intelligent partner in complex, shared workspaces.

The architecture of the Adaptive Risk Management System (ARMS) will be significantly strengthened through the incorporation of a dedicated ‘Risk Feature’ assessment module within its switching strategy. This enhancement moves beyond simply reacting to immediate hazards; the system will proactively evaluate potential risks based on environmental observations and predicted human behavior. By quantifying factors such as proximity, velocity of nearby humans, and the potential for collisions, ARMS can anticipate dangerous scenarios before they unfold. This allows for a more nuanced and preemptive transition between autonomous and teleoperated control, or even an anticipatory adjustment of the robot’s trajectory. The result is a substantial improvement in both operational safety and the system’s ability to maintain consistent performance even in dynamic and unpredictable human-robot interaction scenarios, fostering greater trust and reliability in collaborative robotics.

To rigorously evaluate the advancements offered by the Adaptive Risk Management System (ARMS), future research will benchmark its performance against a computationally efficient baseline: One-Step Model Predictive Control (MPC). This approach, which optimizes control actions for only the immediate next step, provides a valuable point of comparison due to its lower computational demands. By contrasting ARMS’s more complex, anticipatory strategies with the simplicity of One-Step MPC, researchers can precisely quantify the trade-offs between computational cost and improvements in safety, efficiency, and overall collaborative performance. This comparative analysis will not only validate the benefits of ARMS’s adaptive risk management but also illuminate the minimum computational requirements for achieving robust and safe human-robot interaction, guiding the development of future collaborative robotics systems.

The Adaptive Robotic Monitoring System (ARMS) signifies a crucial advancement in the field of human-robot collaboration, moving beyond traditional automation towards genuinely cooperative machines. This framework prioritizes not simply task completion, but the seamless and secure integration of robots into spaces designed for human activity. By continuously monitoring human behavior and proactively adapting its operational parameters, ARMS aims to anticipate and accommodate human intentions, thereby minimizing potential conflicts and maximizing efficiency. The system’s design is fundamentally rooted in the principle of shared situational awareness, allowing robots to function not as isolated agents, but as intuitive and responsive partners within complex, human-centric environments – a critical step towards realizing the long-held vision of robots working alongside people as trusted collaborators.

The pursuit of robust cooperative navigation, as demonstrated by the ARMS framework, echoes a fundamental tenet of understanding any complex system: its limits are revealed through rigorous testing. Andrey Kolmogorov observed, “The shortest way to learn is through immediate application.” This sentiment perfectly encapsulates the approach taken within the research; the adaptive switcher and safety filtering aren’t merely theoretical constructs, but components designed for real-world application and iterative refinement. By combining reinforcement learning’s exploratory power with model predictive control’s precision, the system actively ‘tests’ the boundaries of safe operation, learning through immediate feedback in dynamic, cluttered environments. The resulting hybrid control doesn’t simply predict behavior; it actively probes the code of reality, seeking vulnerabilities and strengthening resilience.

What’s Next?

The presented framework, while demonstrating a functional synthesis of learning and prediction, ultimately reveals the inherent brittleness of any system attempting to model a genuinely unpredictable partner – in this case, a human. The ‘safe’ navigation isn’t a solution, but a temporary deferral of inevitable edge cases. A bug, one might assert, is the system confessing its design sins – the assumptions embedded within the predictive model, the limitations of the reinforcement learning reward function, and the unavoidable gap between simulation and the chaotic reality of shared space. Future work isn’t about refining the switcher, but about acknowledging its fundamental inadequacy.

The true challenge lies not in predicting human behavior, but in accepting its inherent randomness. Current approaches treat deviation from expectation as an error to be mitigated. A more robust system would view such deviations as information, adapting not by correcting the human, but by refining its understanding of human unpredictability. This requires a shift from control to co-evolution – a framework where the robot learns with the human, not from a pre-defined model of the human.

Ultimately, the pursuit of ‘safe’ collaboration is a misdirection. The goal shouldn’t be to eliminate risk, but to create a system capable of gracefully handling – even benefiting from – the unexpected. The next iteration shouldn’t ask ‘how do we prevent collisions?’ but ‘how do we learn from them?’

Original article: https://arxiv.org/pdf/2601.16686.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- Dec Donnelly admits he only lasted a week of dry January as his ‘feral’ children drove him to a glass of wine – as Ant McPartlin shares how his New Year’s resolution is inspired by young son Wilder

- Invincible Season 4’s 1st Look Reveals Villains With Thragg & 2 More

- eFootball 2026 Manchester United 25-26 Jan pack review

- The five movies competing for an Oscar that has never been won before

2026-01-26 18:10