Author: Denis Avetisyan

Researchers have developed a new framework and dataset to improve how robots interpret ambiguous commands during assistive tasks, paving the way for more natural and effective human-robot collaboration.

This work introduces a multimodal dataset collected via a Wizard-of-Oz study, designed to enhance dialogue systems for assistive robots and resolve ambiguity in mobile manipulation scenarios.

Despite advances in assistive robotics, natural and intuitive control remains a significant challenge, particularly when users rely on dialogue to guide complex manipulation tasks. This is addressed in ‘A Multimodal Data Collection Framework for Dialogue-Driven Assistive Robotics to Clarify Ambiguities: A Wizard-of-Oz Pilot Study’, which introduces a novel framework and pilot dataset designed to capture the nuances of human-robot interaction during ambiguous dialogue-driven control of wheelchair-mounted robotic arms. The framework-validated through a Wizard-of-Oz study recording synchronized RGB-D video, audio, IMU data, and robot state-demonstrates its ability to elicit natural user behavior and capture diverse ambiguity types. Will this richer dataset enable the development of more robust and user-friendly ambiguity-aware assistive control systems for individuals with motor limitations?

Bridging the Gap: Towards Intuitive Robotic Assistance

Individuals with spinal cord injury often encounter substantial challenges performing everyday tasks, significantly impacting their independence and quality of life. Simple actions that are typically effortless – such as preparing a meal, dressing, or maintaining personal hygiene – can become complex and require considerable effort or assistance. These barriers extend beyond the physical limitations; they frequently encompass secondary complications like pressure sores, muscle atrophy, and psychological distress. The loss of motor function and sensory feedback disrupts the seamless coordination required for Activities of Daily Living, creating a cascade of difficulties that necessitate reliance on caregivers or specialized equipment. Consequently, research into technologies – particularly assistive robotics – aims to restore a degree of autonomy, enabling individuals to perform these essential tasks with greater ease and dignity, and fostering a sense of self-reliance despite their physical constraints.

For individuals with spinal cord injury, regaining independence often hinges on the efficacy of assistive robotic devices. However, conventional control systems – relying on joysticks, buttons, or complex programmed sequences – present significant challenges. These methods frequently demand precise motor skills and cognitive load that are difficult, if not impossible, for many users to manage, leading to frustration and ultimately, limited real-world application. The inherent difficulty in translating intended actions into robotic movements through these interfaces creates a disconnect, hindering the seamless integration of assistance into daily life and negating the potential benefits of the technology. This cumbersome interaction diminishes user agency and underscores the need for more natural and intuitive control paradigms.

The promise of assistive robotics – devices that genuinely enhance independence for individuals with limited mobility – hinges on the creation of interfaces that feel natural and responsive. Current control schemes often require specialized training or precise, deliberate commands, creating a barrier to seamless interaction. However, a truly intuitive interface transcends these limitations by allowing users to direct robotic assistance using familiar methods, such as gestures, voice commands, or even inferred intent. This shift demands advancements in areas like machine learning and sensor technology, enabling robots to not just respond to instructions, but to anticipate needs and adapt to changing circumstances. Successfully bridging this gap between human intention and robotic action is not simply a matter of technological refinement; it’s about fostering a sense of agency and control, ultimately empowering individuals to live more fulfilling and autonomous lives.

Achieving seamless assistance through robotics necessitates a shift beyond precise, pre-programmed commands; instead, systems must adeptly decipher the inherent ambiguity of everyday language. Humans rarely issue instructions with robotic precision, often relying on context, implication, and nuanced phrasing. Consequently, research focuses on developing algorithms capable of interpreting incomplete or vague requests – for example, understanding “bring me the water” without specifying which water or how it should be delivered. This demands advancements in natural language processing, allowing robots to not only parse words but also infer intent, resolve ambiguity through contextual awareness, and even anticipate user needs – ultimately creating a more fluid and intuitive interaction that feels less like controlling a machine and more like collaborating with a helpful partner.

Dialogue-Based Control: A Pathway to Natural Interaction

Dialogue-based interaction presents a viable control method for complex robotic systems, specifically offering advantages in usability for devices like wheelchair-mounted robotic arms. Traditional control schemes, such as joysticks or direct manipulation, can require significant training and fine motor skills. Conversely, dialogue-based systems utilize natural language input, allowing users to issue commands verbally. This approach lowers the barrier to entry for individuals with limited mobility or dexterity, and potentially enables more intuitive and efficient operation of the robotic arm for tasks including object manipulation, environmental interaction, and assistance with daily living activities. The feasibility of this approach is increasingly supported by advancements in speech recognition, natural language understanding, and robotic control architectures.

Dialogue-based control of robotic systems utilizes Natural Language Processing (NLP) and Large Language Models (LLMs) to convert human speech into actionable commands. The process typically involves speech recognition to transcribe spoken language into text, followed by NLP techniques such as semantic parsing and intent recognition to determine the user’s desired action. LLMs, pre-trained on vast datasets of text and code, are then employed to map the identified intent to specific robot control parameters or sequences. This translation allows users to interact with the robot using intuitive, conversational language, eliminating the need for specialized programming or direct teleoperation interfaces. The system effectively bridges the semantic gap between human commands and robotic actuators, enabling more natural and efficient human-robot interaction.

Robust performance in dialogue-based robotic control is challenged by inherent ambiguities present in natural language. Spatial ambiguity arises when commands lack specific location details – for example, “move the arm there” requires contextual understanding of “there”. Referential ambiguity occurs when pronouns or vague references are used, such as “put it down”, necessitating the system to correctly identify the referent object. Intent ambiguity involves difficulty in discerning the user’s ultimate goal from a given command; a request to “grab that” could indicate a desire to manipulate, inspect, or simply acknowledge the object. Effectively addressing these ambiguities through contextual reasoning and disambiguation techniques is crucial for reliable robotic operation.

Disambiguation within a dialogue-based control system is crucial for reliable operation, necessitating the resolution of uncertainties present in natural language input. The system must employ techniques to identify the correct interpretation of user commands when multiple possibilities exist; this includes resolving references to objects or locations – known as referential ambiguity – and determining the user’s intended goal despite potentially imprecise phrasing – intent ambiguity. Successful disambiguation relies on contextual understanding, incorporating prior interactions, environmental data, and knowledge of the robotic system’s capabilities to constrain the solution space and select the most probable action, thereby minimizing execution errors and ensuring the robot responds as intended.

Validating Intuitive Control: A Rigorous Evaluation

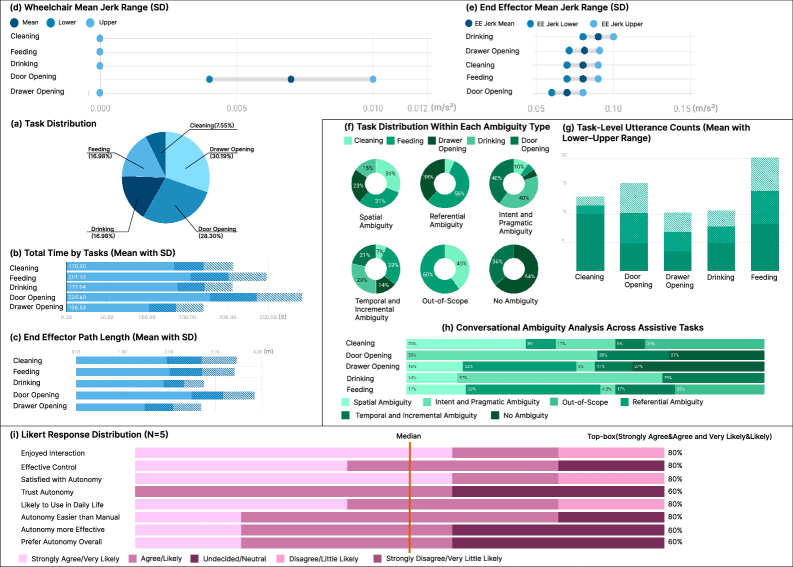

A Wizard-of-Oz protocol was utilized to collect data regarding user interaction with the robotic system and to identify areas for improvement in the dialogue system’s functionality. This involved simulating a fully autonomous system while a human operator surreptitiously controlled the robotic arm in response to user commands. Data collection consisted of 53 trials performed with a total of 5 participants, generating a multimodal dataset encompassing user utterances, corresponding robot actions, and task completion metrics. This approach allowed for the observation of natural user behavior and the identification of potential issues prior to full system automation.

The Wizard of Oz protocol facilitated the evaluation of user interaction with the robotic system by presenting a simulated autonomous experience. During operation, user commands were received and interpreted, but rather than being executed directly by an autonomous system, a human operator remotely controlled the robotic arm to respond in real-time as if it were acting independently. This approach allowed for the capture of natural user behavior and identification of usability issues without the limitations imposed by the current capabilities of fully autonomous control algorithms. The collected data, reflecting user input and corresponding robotic actions, was then used to refine the system’s dialogue management and control strategies.

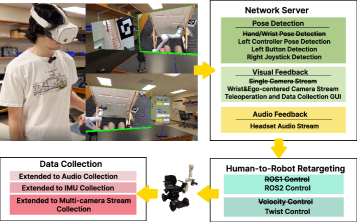

The robotic system’s architecture leveraged Robot Operating System 2 (ROS 2) for inter-process communication and device control, enabling modularity and scalability. ROS 2 facilitated the transmission of user commands and sensor data between software components. To translate high-level user-defined poses into actionable motor commands, the system implemented Inverse Kinematics (IK) solvers. These solvers calculated the required joint angles of the robotic arm to achieve the desired end-effector position and orientation, effectively bridging the gap between task-level goals and low-level motor control. The IK implementation allowed the system to navigate the workspace and manipulate objects based on user input, while respecting the arm’s kinematic constraints.

The evaluation dataset comprised user speech data captured as utterances, corresponding robotic arm actions executed in response, and binary task completion flags. Quantitative analysis of robot motion revealed a maximum wheelchair jerk of less than 0.3 m/s³, satisfying established comfort thresholds, and an end-effector jerk of approximately 0.1 m/s³, indicating consistently smooth trajectories. These metrics were calculated across all 53 trials with 5 participants and used to assess the intuitiveness and user acceptance of the control scheme.

Real-World Impact and Future Directions

Dialogue-based control represents a paradigm shift in assistive robotics, offering a more intuitive and accessible interface than traditional methods. This approach moves beyond complex joystick maneuvers or pre-programmed sequences, instead allowing users to direct robotic assistance through natural language instructions. Recent studies indicate this method substantially enhances usability, as users can simply tell the robot what to do, rather than show it through physical controls. The benefit extends beyond ease of use; dialogue-based systems can dynamically adapt to changing environments and user needs, improving the effectiveness of robotic assistance in real-world scenarios. This is particularly impactful for individuals with limited mobility, as it reduces the cognitive load associated with operating assistive devices and promotes greater independence.

A key component of this research involved the creation of a dedicated platform for testing dialogue-based robotic control – specifically, the integration of a Kinova Gen3 robotic arm with a WHILL Model CR2 power wheelchair. This pairing represents a functional and realistic testbed, allowing researchers to evaluate the system’s performance in a scenario mirroring the needs of individuals with limited mobility. The WHILL CR2 provides a stable, independently-powered base, while the Kinova Gen3 arm offers a versatile manipulation capability, together enabling the assessment of complex task execution guided by natural language input. This physical setup facilitates rigorous evaluation of the system’s usability and effectiveness, moving beyond simulation to address the practical challenges of real-world application and paving the way for assistive technologies that seamlessly integrate into daily life.

The research demonstrates a novel application of Virtual Reality (VR) teleoperation, significantly augmented by the developed dialogue-based control system. This approach allows individuals to practice and hone their robotic arm manipulation skills within a completely safe, yet remarkably immersive, virtual environment. Users can repeatedly attempt complex tasks – such as grasping objects or navigating simulated obstacles – without risk of physical harm or damage to equipment. This repeated practice, guided by the system’s conversational interface, facilitates the development of muscle memory and intuitive control strategies, ultimately improving performance when operating the robotic arm in the real world. The VR environment serves not merely as a training ground, but as a dynamic platform for refining control techniques and building user confidence before deployment in everyday scenarios.

Evaluations of the dialogue-based robotic control system revealed a high degree of user satisfaction, with an impressive 80% of participants selecting the most positive option on Likert scale questionnaires – a metric known as the “top-box” percentage. This result suggests the conversational interface fosters an enjoyable and intuitive experience for users interacting with the assistive robotic arm. Ongoing development efforts are now directed towards broadening the system’s capabilities to accommodate more intricate tasks and a wider range of user needs, aiming for a truly versatile and accessible assistive technology for diverse populations.

The presented framework prioritizes a holistic understanding of human-robot interaction, much like designing a well-functioning city. Data collection isn’t merely about recording commands; it’s about capturing the nuances of dialogue that clarify ambiguities-essential for assistive robotics serving individuals with motor limitations. This approach echoes Bertrand Russell’s sentiment: “The point of education is to teach people to think, not to memorize facts.” The system doesn’t aim to simply react to commands, but to understand intent, requiring a flexible infrastructure capable of evolving without requiring a complete overhaul, mirroring a city’s ability to adapt its infrastructure without rebuilding entire blocks. The collected multimodal dataset, therefore, facilitates a deeper, more adaptable intelligence in robotic assistance.

What’s Next?

The pursuit of truly intuitive assistive robotics invariably circles back to the fundamental problem of representation. This work, while providing a valuable multimodal dataset, merely clarifies the shape of the unknown. A framework for collecting ambiguous interactions is, after all, a framework for finding more ambiguity. The system, if it looks clever, is probably fragile. The real challenge lies not in building a robot that responds to precisely defined commands, but one that gracefully navigates the inevitable imprecision of human intention.

Future iterations should resist the temptation to prematurely optimize for specific tasks. A robot adept at fetching a glass of water is still a failure if it cannot handle the unscripted request for ‘something to read’ or the subtly expressed desire for companionship. The architecture of assistance is, fundamentally, the art of choosing what to sacrifice – speed for robustness, precision for adaptability.

The field will likely shift towards methods that prioritize lifelong learning and compositional generalization. A robot that understands ‘bring me the red book’ should, without retraining, be able to infer the meaning of ‘put the blue one back.’ This requires moving beyond feature engineering and embracing representations that capture the underlying structure of human communication. Simplicity, predictably, will prove more resilient than sophistication.

Original article: https://arxiv.org/pdf/2601.16870.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- CS2 Premier Season 4 is here! Anubis and SMG changes, new skins

- Streaming Services With Free Trials In Early 2026

- Binance’s Bold Gambit: SENT Soars as Crypto Meets AI Farce

- How to have the best Sunday in L.A., according to Bryan Fuller

2026-01-26 13:13