Author: Denis Avetisyan

A new approach combines the power of large language models with rapid, context-aware analysis to better detect and mitigate toxic behavior in live video chats.

This paper introduces ToxiTwitch, a hybrid moderation agent that leverages emote context and LLM embeddings for improved accuracy and reduced latency in toxicity detection on platforms like Twitch.

Despite the increasing sophistication of content moderation techniques, effectively policing toxic behavior in fast-paced, high-volume online environments remains a significant challenge. This work introduces ToxiTwitch: Toward Emote-Aware Hybrid Moderation for Live Streaming Platforms, an exploratory study focused on improving toxicity detection on Twitch through the incorporation of emote context. Our approach, ToxiTwitch, leverages large language models to generate embeddings of both text and emotes, combined with traditional machine learning classifiers to achieve up to 80% accuracy-a 13% improvement over baseline BERT models. Can this hybrid approach pave the way for more nuanced and scalable solutions to online toxicity, and what further challenges remain in understanding multimodal communication within live streaming platforms?

The Rising Tide of Toxicity: A System Under Strain

The vibrant communities cultivated on live-streaming platforms like Twitch are facing a growing challenge from toxic interactions. While designed to connect individuals through shared interests, these platforms often become breeding grounds for harassment, hate speech, and aggressive behavior. This isn’t simply a matter of isolated incidents; research indicates a significant and escalating prevalence of toxicity within Twitch chat, impacting both streamers and viewers alike. The very features that enable real-time interaction – the speed of chat, the anonymity afforded by usernames, and the culture of rapid-fire commentary – ironically contribute to the problem. This pervasive negativity can stifle genuine connection, drive away valuable community members, and ultimately undermine the positive experiences the platform aims to provide, necessitating innovative approaches to moderation and community management.

Current automated systems for identifying harmful language often falter when applied to the fast-moving environment of live-streaming platforms. These tools, typically trained on static datasets of text and comments, struggle with the sheer velocity of chat on platforms like Twitch – thousands of messages appearing every minute. More critically, they frequently misinterpret context, sarcasm, or emerging slang specific to online communities. The subtlety of indirect aggression, or the use of seemingly benign phrases with malicious intent, often evades detection. Consequently, a significant proportion of genuinely toxic interactions slip through the filters, while harmless messages are incorrectly flagged, creating a frustrating experience for both viewers and content creators. This inability to adapt to the dynamic and nuanced communication styles inherent in real-time chat represents a major hurdle in maintaining safe and positive online spaces.

Twitch chat presents a unique linguistic landscape that confounds conventional toxicity detection. Beyond standard text-based abuse, the platform thrives on a rapidly evolving lexicon of slang, in-jokes, and, crucially, emotes – pictorial representations used in place of, or alongside, text. These emotes, often layered with multiple meanings and cultural references, function as a complex visual language that traditional natural language processing models struggle to interpret. A seemingly innocuous image can carry veiled insults or participate in coordinated harassment campaigns, while text-based toxicity can be disguised within emote-heavy streams. Consequently, existing algorithms, trained on standard datasets, frequently misclassify benign content as harmful, or fail to recognize subtle forms of abuse embedded within this visual and textual interplay, necessitating the development of specialized models capable of understanding Twitch’s distinct communicative style.

The sustained health of online communities, particularly on platforms like Twitch, hinges decisively on effective moderation practices. Beyond simply enforcing rules, robust moderation cultivates an environment where positive interactions are not only permitted but actively encouraged, fostering a sense of belonging and psychological safety for all participants. This proactive approach moves beyond reactive punishment of toxic behavior to emphasize the amplification of constructive dialogue and the celebration of positive contributions. A well-moderated space demonstrably increases user engagement, retention, and overall well-being, transforming a potentially hostile environment into a thriving ecosystem where individuals feel empowered to connect, create, and share without fear of harassment or negativity. Consequently, investment in sophisticated moderation tools and dedicated moderation teams represents not merely a cost of doing business, but a fundamental investment in the long-term viability and success of the platform itself.

Leveraging Language Models: A Shift in Detection Paradigms

Large Language Models (LLMs) demonstrate enhanced toxicity detection capabilities due to their inherent ability to process and understand contextual information within text. Traditional toxicity detection methods often rely on keyword spotting or rule-based systems, which struggle with nuanced language, sarcasm, or evolving slang. LLMs, trained on massive datasets, learn complex relationships between words and phrases, enabling them to identify toxic behavior even when it is not explicitly stated. This contextual understanding extends to recognizing implicit threats, coded language, and subtle forms of harassment that would be missed by simpler approaches. Furthermore, LLMs can analyze the entire conversational context, considering the relationship between users and the overall topic of discussion, to more accurately assess the intent and impact of a message.

Direct application of Large Language Models (LLMs) to the high-volume, real-time stream of Twitch chat data presents significant computational challenges. LLMs, while powerful, are resource-intensive, requiring substantial GPU memory and processing power for inference. The latency associated with processing each chat message through a full LLM can exceed acceptable thresholds for real-time moderation, potentially delaying the flagging of toxic content. Furthermore, the sheer scale of Twitch – with thousands of concurrent streams and millions of daily messages – necessitates careful optimization to manage infrastructure costs and prevent service disruptions. Considerations include model quantization, distributed inference, and efficient batching strategies to achieve a balance between detection accuracy and operational feasibility.

Zero-shot toxicity classification leverages the inherent contextual understanding of Large Language Models (LLMs) to identify toxic expressions without requiring task-specific training data. This is achieved through carefully designed prompts – known as prompt engineering – which frame the toxicity detection task as a natural language inference problem. By formulating prompts that instruct the LLM to assess whether a given text contains toxic language, the model can generalize to previously unseen toxic expressions and variations. The effectiveness of zero-shot classification is directly correlated with the quality and specificity of the prompt, requiring iterative refinement to optimize performance and minimize false positives or negatives. This approach contrasts with traditional supervised learning methods that necessitate labeled datasets for each specific type of toxicity.

Combining Large Language Model (LLM) embeddings with lightweight classifiers addresses the computational demands of real-time toxicity detection. LLMs generate dense vector representations – embeddings – that capture semantic meaning from text. These embeddings, rather than the raw text, are then used as input features for simpler, faster classifiers such as logistic regression or support vector machines. This approach reduces inference time and resource consumption compared to directly utilizing the LLM for classification, while still leveraging the LLM’s ability to understand nuanced language and generalize to unseen toxic expressions. The lightweight classifier learns to map these LLM-generated embeddings to toxicity labels, offering a scalable solution for high-volume data streams like those found on Twitch.

Introducing ToxiTwitch: A Hybrid Architecture for Enhanced Moderation

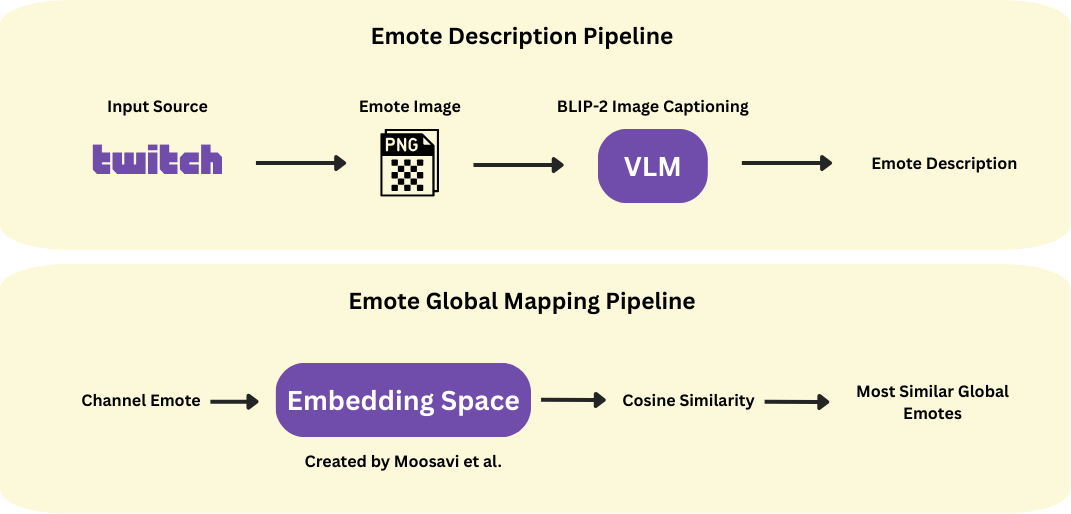

ToxiTwitch utilizes a hybrid architecture by combining Large Language Model (LLM)-generated contextual embeddings with traditional machine learning classifiers. Specifically, textual input is processed by an LLM to create numerical vector representations capturing semantic meaning. These embeddings are then fed as features into classifiers such as Random Forest and Support Vector Machine (SVM) for toxicity detection. This approach leverages the LLM’s ability to understand language nuance while benefiting from the efficiency and interpretability of established classification algorithms. The resulting model aims to improve upon the performance of end-to-end LLM solutions and other baseline toxicity detectors.

Emote Embedding within ToxiTwitch addresses the limitations of text-only toxicity detection by converting visual emote data into numerical vector representations. This is achieved through a process of feature extraction from emote images, generating a fixed-length vector that captures the visual characteristics of each emote. These vectors are then incorporated alongside text embeddings as input features for the classification models, allowing the system to analyze the contextual meaning conveyed by both textual content and visual cues. The resulting numerical representation enables quantitative analysis of emotes, effectively translating visual information into a format compatible with machine learning algorithms and enhancing the model’s understanding of communication context.

ToxiTwitch enhances contextual understanding by integrating textual data with emote embeddings, which translate visual cues into numerical vectors. This approach moves beyond solely analyzing text, recognizing that emotes frequently contribute significant semantic meaning in online communication. By representing both modalities as numerical data, ToxiTwitch facilitates a more comprehensive assessment of message intent and toxicity, capturing nuances often missed by text-only models. The model then utilizes these combined embeddings to provide a richer, more accurate interpretation of the communication context, leading to improved performance in identifying harmful content.

Performance validation of ToxiTwitch demonstrated a peak F1-score of 0.79 with an inference time of 60 milliseconds per message. This performance was established through comparative analysis against baseline models including Detoxify, HateSonar, and DistilBERT-ToxiGEN. HateSonar achieved an approximate F1-score of 0.68 in the same testing environment, indicating a measurable improvement with the ToxiTwitch architecture. These results were obtained through rigorous testing procedures designed to evaluate both accuracy and processing speed.

Beyond Detection: Deepening Contextual Understanding for Proactive Moderation

ToxiTwitch’s effectiveness relies heavily on its ability to decipher the distinct linguistic landscape of the Twitch platform, a space where text is often interwoven with a vibrant tapestry of emotes. These aren’t merely graphical substitutes for words; channel-specific and global emotes function as a complex, rapidly evolving shorthand, conveying nuance, intent, and emotional context often lost in traditional text analysis. The system doesn’t just identify potentially toxic words, but learns to interpret the meaning embedded within these visual cues, understanding that a specific emote can completely alter the sentiment of a message. Successfully capturing this unique communication style is paramount, as a failure to recognize emote-driven meaning would lead to both false positives – incorrectly flagging harmless banter – and false negatives, allowing genuine toxicity to slip through moderation filters.

To truly grasp the nuances of online communication on platforms like Twitch, the system employs a technique called Emote-Enabled Prompting. This method goes beyond simply identifying toxic words; it actively enriches the prompts fed to the Large Language Model (LLM) with contextual information derived from emotes. By incorporating the meaning and common usage of these visual cues – which often carry significant emotional weight and platform-specific slang – the LLM develops a more comprehensive understanding of the message’s intent. This allows for a more accurate assessment of toxicity, accounting for sarcasm, inside jokes, and the subtle emotional undercurrents frequently expressed through emotes, ultimately leading to more effective and contextually appropriate moderation decisions.

The foundation of ToxiTwitch’s ability to discern nuanced toxicity within the Twitch ecosystem relies heavily on meticulously curated, human-annotated data. A dedicated team of trained annotators carefully labeled a substantial corpus of Twitch chat logs, identifying instances of toxic behavior while also accounting for the platform’s unique communication style – including the proper interpretation of frequently used emotes and slang. This process wasn’t simply about flagging offensive language; annotators provided granular labels detailing the type of toxicity, its target, and the context surrounding the message. The resulting high-quality labeled dataset served as the crucial training ground for the language model, enabling it to learn the subtle indicators of harmful interactions and ultimately achieve a more sophisticated understanding of online toxicity beyond simple keyword detection. This human-in-the-loop approach proved essential in establishing a robust and reliable system for content moderation.

The ToxiTwitch model, leveraging a Random Forest algorithm applied to both Llama text data and Emote-Gram Matrix (EGM) representations, achieved an accuracy of 0.86 and a recall of 0.78 in identifying harmful content. This performance extends beyond simply flagging overtly toxic phrases; the model demonstrates a nuanced comprehension of context, recognizing how emotes and channel-specific language contribute to the overall meaning and potentially alter the intent behind a message. Consequently, moderation strategies can move beyond blunt censorship towards more sophisticated interventions, addressing harmful behavior while preserving constructive dialogue and the unique culture of individual Twitch channels. The ability to discern subtle cues enables a more precise and effective approach to maintaining a positive and inclusive online environment.

The Future of Real-Time Moderation: Expanding Horizons Beyond Detection

ToxiTwitch marks a considerable advancement in the ongoing challenge of maintaining positive online interactions, specifically within live-streaming platforms like Twitch. This novel system utilizes machine learning to detect toxic comments in real-time, going beyond simple keyword filtering to analyze contextual cues and nuanced language. Unlike previous moderation techniques, ToxiTwitch aims to identify subtle forms of harassment that often slip through traditional filters, thereby creating a more proactive defense against online abuse. The system’s architecture allows for rapid assessment of chat messages, enabling moderators to quickly address problematic behavior and foster a more inclusive environment for both streamers and viewers. Early trials suggest a significant improvement in the speed and accuracy of identifying and flagging toxic content, representing a crucial step towards scalable, effective, and automated moderation solutions for the ever-growing world of online broadcasting.

Current automated moderation systems often struggle with nuanced online communication, frequently missing subtle cues indicating toxicity or misinterpreting sarcasm as genuine hostility. Future development of models like ToxiTwitch prioritizes enhancing this contextual understanding. Researchers are exploring advanced natural language processing techniques, including improved sentiment analysis and the incorporation of pragmatic cues, to better detect veiled threats, ironic statements used to harass, and other forms of indirect aggression. This involves training the model on increasingly complex datasets that reflect the multifaceted nature of online discourse, ultimately aiming for a system that can differentiate between playful banter and genuinely harmful communication with greater accuracy and minimize false positives – a crucial step toward fostering healthier online interactions.

The current iteration of ToxiTwitch demonstrates promising results within the English-language Twitch environment, but its long-term efficacy hinges on scalability beyond these initial parameters. Expanding the model’s linguistic reach is a primary focus, requiring substantial datasets in diverse languages to accurately identify and flag toxic content that varies significantly across cultural contexts. Furthermore, adapting the model to function seamlessly across multiple online platforms – including those with different content formats and community guidelines – presents a considerable technical challenge. Successfully achieving this broader compatibility will not only amplify the model’s protective reach, safeguarding a far greater number of online users, but also establish a versatile framework for fostering safer digital spaces universally.

The development of tools like ToxiTwitch points towards a broader ambition: fostering digital spaces where participation isn’t curtailed by harassment or abuse. This pursuit recognizes that online communities thrive when individuals feel safe expressing themselves and engaging with others, regardless of background or identity. Creating truly inclusive environments requires proactively addressing toxic behavior, not simply reacting to it after harm has occurred. The ultimate aim extends beyond mere content filtering; it envisions a paradigm shift where preventative measures and community support systems work in concert to cultivate respectful interactions and empower all users to contribute positively, thereby unlocking the full potential of online connection and collaboration.

ToxiTwitch demonstrates a commitment to holistic system design, mirroring the belief that structure dictates behavior. The paper’s hybrid approach-integrating Large Language Models with lightweight machine learning-isn’t merely about achieving higher accuracy in toxicity detection, but about crafting a system where each component informs the other. This echoes a core tenet of robust engineering; understanding how individual parts contribute to emergent behavior is crucial. As Linus Torvalds famously stated, “Talk is cheap. Show me the code.” ToxiTwitch doesn’t just theorize about contextual reasoning; it implements it, showcasing how emote awareness can refine toxicity detection and reduce latency, providing a practical demonstration of elegant system architecture.

Beyond the Twitch: Charting a Course for Adaptive Moderation

The pursuit of automated content moderation, as demonstrated by ToxiTwitch, perpetually encounters the inherent messiness of natural communication. The system’s reliance on embedding spaces and lightweight models represents a pragmatic concession – a recognition that comprehensive understanding is an asymptotic goal. Future work must address the fragility of these embeddings when confronted with novel slang, evolving emote meanings, and the deliberate obfuscation employed by those seeking to bypass detection. The architecture, while efficient, still treats context as a relatively static feature; a truly resilient system will need to model the dynamics of conversation – the shifts in meaning that occur over time.

A critical, often overlooked, aspect is the inherent subjectivity of ‘toxicity’ itself. Definitions vary across communities, and what is considered acceptable banter in one space may be deeply offensive in another. The field risks ossifying biases into algorithmic rules if it fails to incorporate mechanisms for community-specific adaptation and nuanced judgment. The challenge lies not simply in detecting harmful content, but in understanding why it is considered harmful within a given context.

Ultimately, the success of these systems will be measured not by their precision and recall, but by their ability to foster genuinely healthy online communities. This requires a shift in focus – from policing content to cultivating environments where constructive dialogue can flourish. The most elegant solution may not be a more powerful algorithm, but a more thoughtfully designed social structure.

Original article: https://arxiv.org/pdf/2601.15605.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- The Beauty’s Second Episode Dropped A ‘Gnarly’ Comic-Changing Twist, And I Got Rebecca Hall’s Thoughts

- Dec Donnelly admits he only lasted a week of dry January as his ‘feral’ children drove him to a glass of wine – as Ant McPartlin shares how his New Year’s resolution is inspired by young son Wilder

- Invincible Season 4’s 1st Look Reveals Villains With Thragg & 2 More

- eFootball 2026 Manchester United 25-26 Jan pack review

2026-01-26 06:23