Author: Denis Avetisyan

Researchers are leveraging subtle skin deformations on the back of the hand to dramatically improve the accuracy of hand tracking in first-person views.

DeltaDorsal utilizes dorsal skin features to overcome self-occlusion challenges and enhance hand pose estimation in egocentric vision systems.

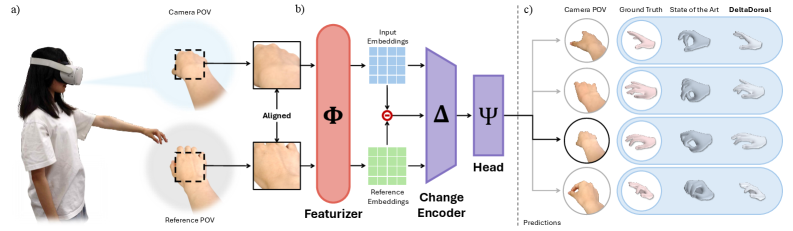

Accurate hand pose estimation from egocentric views remains a challenge despite the growing prevalence of XR devices, largely due to frequent self-occlusions. This paper introduces ‘DeltaDorsal: Enhancing Hand Pose Estimation with Dorsal Features in Egocentric Views’, a novel approach that leverages subtle deformations in the dorsal skin to improve pose accuracy. By employing a dual-stream delta encoder contrasting dynamic and relaxed hand states, we demonstrate an 18% reduction in [latex]MPJAE[/latex] in heavily occluded scenarios-outperforming methods reliant on full hand geometry and larger models. Could this focus on localized dorsal features unlock more robust and efficient hand tracking for nuanced interaction paradigms beyond simple gesture recognition?

The Algorithmic Imperative: Defining Accurate Hand Pose Estimation

The ability to accurately track the three-dimensional position and orientation of the human hand is becoming increasingly vital across a spectrum of technologies. Beyond simply recognizing gestures, precise hand pose estimation unlocks immersive experiences in virtual and augmented reality, allowing for natural and intuitive interaction with digital environments. This capability extends to advancements in human-computer interaction, enabling control schemes that move beyond traditional interfaces like keyboards and mice. Furthermore, applications range from remote manipulation of robotic systems – envisioning surgeons controlling instruments from a distance – to sophisticated sign language recognition and the creation of more realistic and responsive animated characters. Consequently, robust and reliable hand pose estimation is no longer a niche research area, but a foundational component for the next generation of interactive technologies.

The reliance of conventional hand pose estimation techniques on direct visual input presents significant challenges in practical applications. These methods frequently encounter difficulties when hands partially obscure themselves – a phenomenon known as self-occlusion – or when ambient lighting fluctuates. The algorithms struggle to accurately interpret the depth and position of fingers hidden from the camera’s view, leading to inaccurate pose estimations. Similarly, poor or inconsistent lighting conditions can create shadows and reflections that mislead the visual processing algorithms, further diminishing their performance. Consequently, the robustness of these traditional approaches is often compromised in real-world scenarios where unconstrained hand movements and dynamic lighting are commonplace, necessitating the exploration of alternative sensing modalities and robust algorithms.

Conventional motion capture systems, while offering precise hand tracking, present significant limitations in practical application. These systems typically rely on external sensors, specialized equipment, and controlled laboratory settings, rendering them impractical for dynamic, real-world scenarios. The need for calibration, the susceptibility to signal obstruction, and the tethered nature of the hardware impede natural movement and restrict the user to a confined space. Furthermore, the post-processing demands of raw motion capture data introduce delays, making it challenging to achieve the low-latency interaction essential for applications such as virtual reality and teleoperation. Consequently, researchers are actively pursuing markerless, vision-based alternatives that offer greater flexibility and the potential for truly unconstrained hand pose estimation.

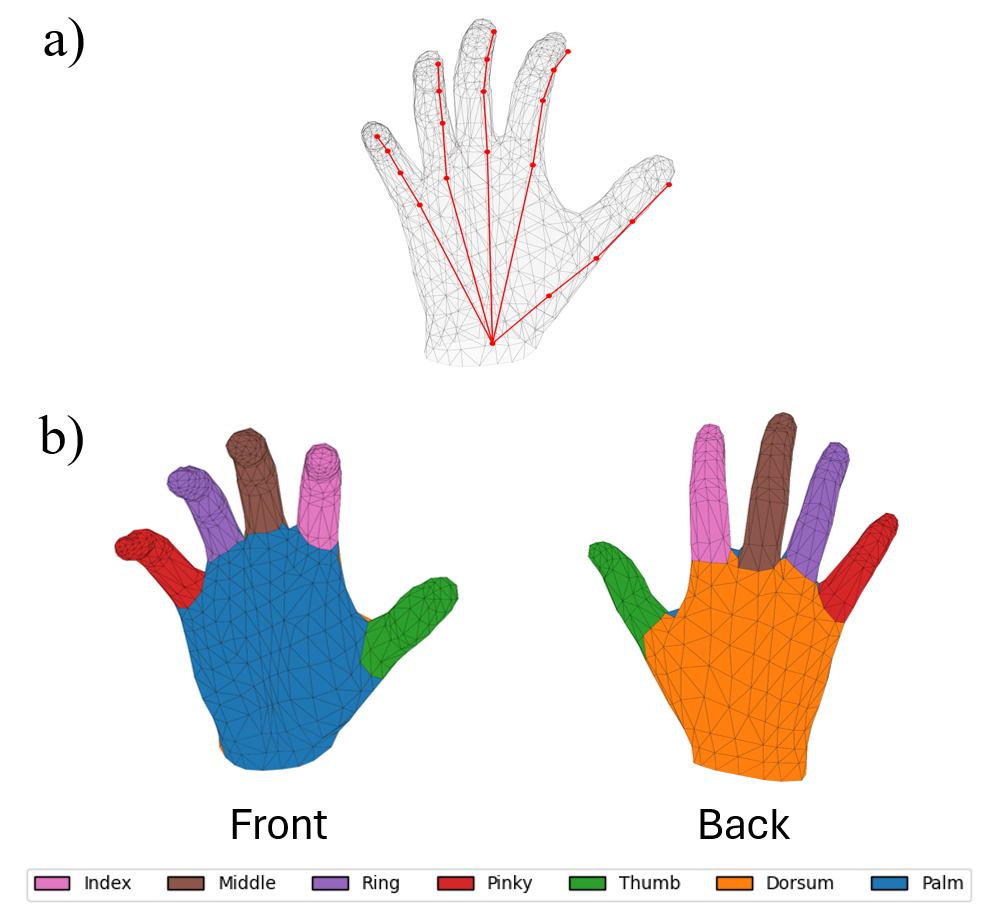

Biomechanical Certainty: Leveraging Dorsal Skin Deformation

DeltaDorsal is a novel system for 3D hand pose estimation that utilizes data acquired from measuring deformation of the skin on the dorsum, or back, of the hand. Unlike traditional vision-based methods, DeltaDorsal prioritizes biomechanical signals derived from these skin changes as the primary input. This approach involves capturing and analyzing how the skin stretches and wrinkles during hand movements to infer the underlying joint angles and overall hand configuration. The system is designed to provide pose estimation from an egocentric perspective, meaning data is captured as if viewed from the user’s own eyes, and relies on advanced feature extraction techniques to translate dorsal skin deformation into quantifiable pose data.

DeltaDorsal employs an egocentric viewpoint, meaning data acquisition is performed from the user’s perspective, simulating what the user directly observes. This is achieved through wearable sensors positioned to capture deformation patterns on the dorsal surface of the hand. Captured sensor data undergoes processing via advanced feature extraction techniques, including dimensionality reduction and filtering, to isolate relevant biomechanical signals. These extracted features represent the hand’s configuration and are then used as input to a regression model for 3D hand pose estimation. The combination of an egocentric view and sophisticated feature extraction enables the system to operate effectively even with limited or noisy visual information.

DeltaDorsal exhibits improved performance in challenging conditions due to its reliance on biomechanical data derived from dorsal skin deformation. Unlike vision-based hand pose estimation systems susceptible to errors caused by self-occlusion and variable lighting, DeltaDorsal’s input is less affected by these factors. Quantitative evaluation demonstrates this robustness, with the system achieving a Mean Per Joint Angle Error (MPJAE) of 6.41° – a metric indicating the average angular difference between estimated and ground truth joint angles. This result suggests a significant advantage in scenarios where visual information is limited or unreliable.

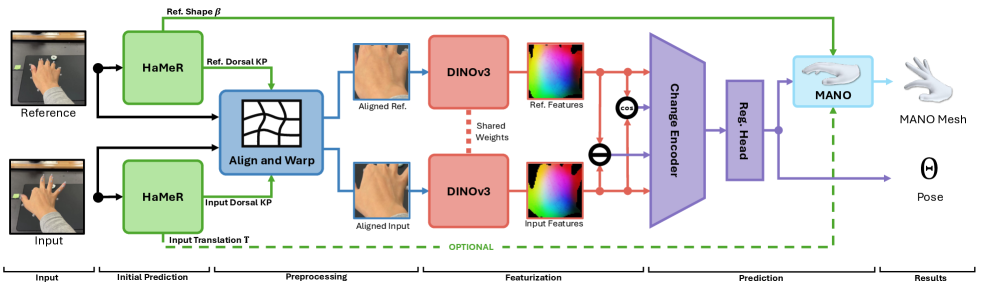

Algorithmic Precision: Deconstructing the System Architecture

The DeltaDorsal system utilizes DINOv3, a self-supervised vision transformer, to generate robust feature embeddings from egocentric RGB imagery. DINOv3 is employed due to its demonstrated capacity for learning effective representations without requiring labeled training data, which is particularly advantageous in dynamic environments with varying lighting and viewpoints. The resulting feature vectors capture essential visual information relevant to hand geometry and are subsequently used as input for downstream processing stages, specifically the quantification of dorsal skin deformations. This approach prioritizes computational efficiency and adaptability by leveraging a pre-trained model rather than training a feature extractor from scratch.

Following feature extraction via DINOv3, the system analyzes the resulting data to detect and measure minute changes in the dorsal skin surface. This quantification relies on identifying patterns within the extracted features that correspond to specific skin deformations, such as wrinkles or stretches. These deformations are then mapped to corresponding changes in joint angles, providing a direct link between visual data and skeletal pose. The magnitude and location of these subtle deformations are key inputs for the subsequent 3D hand pose estimation process, allowing the system to infer hand configuration even with limited visibility.

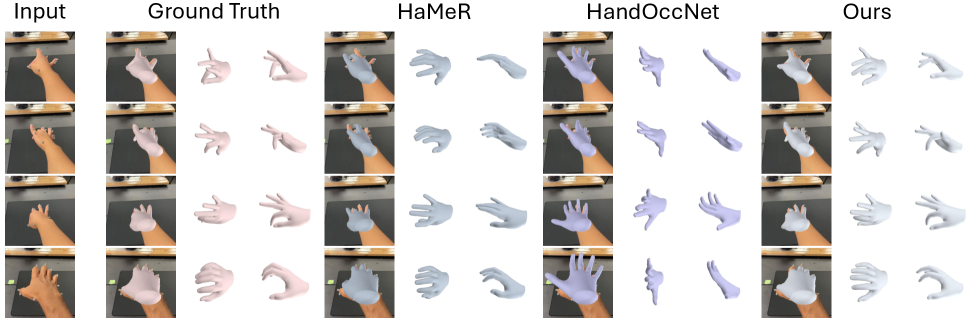

The presented 3D hand pose estimation system achieves accuracy levels comparable to current state-of-the-art methods, notably while relying exclusively on features derived from the dorsal surface of the hand. Quantitative evaluation demonstrates a reduction of 4.59° in Mean Per Joint Angle Error (MPJAE) specifically in scenarios involving self-occlusion, indicating improved robustness in challenging conditions where portions of the hand are obscured from view. This performance is achieved without requiring data from the palmar side or incorporating explicit depth information, representing an efficiency gain in data dependency.

Expanding the Boundaries of Interaction: A New Paradigm

DeltaDorsal distinguishes itself through a robust capability to interpret hand pose even when visual data is incomplete, opening doors to a diverse range of applications previously constrained by the need for clear, unobstructed views. This resilience stems from the system’s advanced algorithms, which effectively predict hand configurations based on subtle cues and learned patterns, rather than relying on complete visual information. Consequently, DeltaDorsal isn’t hampered by partial occlusions, low-light conditions, or fast movements – factors that often plague traditional hand-tracking systems. The implications are considerable, extending from more intuitive virtual and augmented reality experiences to the development of highly responsive and accessible interfaces for individuals with limited mobility, and even enabling seamless interaction with devices in challenging environments.

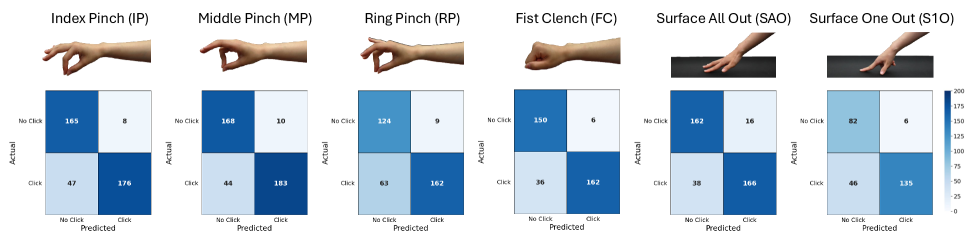

The DeltaDorsal system introduces a novel approach to user interaction through the detection of isometric clicks – registering selections without any visible hand movement. This capability moves beyond traditional click mechanics, offering a more subtle and potentially more efficient means of control. Utilizing advanced algorithms, the system accurately identifies a user’s intent to ‘click’ based on nuanced changes in hand posture, achieving an impressive 0.85 accuracy rate. This is particularly impactful for scenarios demanding precision or where physical movement is limited, opening doors for more intuitive interfaces in virtual and augmented reality, as well as assistive technologies designed to empower individuals with motor impairments.

Surface click detection, achieved with an accuracy of 0.84, represents a considerable advancement in human-computer interaction. This capability allows users to register selections and commands simply by making contact with a surface, even without traditional button presses or discernible movement. The implications extend significantly into the realms of virtual and augmented reality, where intuitive and precise input methods are crucial for immersive experiences. Furthermore, this technology holds considerable promise for assistive applications, offering individuals with motor impairments an alternative means of interacting with digital systems, potentially restoring a degree of independence and control previously unattainable. By removing the need for fine motor skills, surface click detection broadens accessibility and opens new avenues for inclusive design.

The pursuit of robust hand pose estimation, as demonstrated by DeltaDorsal, echoes a fundamental principle of algorithmic design. The system’s innovative use of dorsal skin features to address self-occlusion isn’t merely a practical improvement; it represents a commitment to deriving solutions from inherent geometric properties. As Andrew Ng aptly stated, “Machine learning is about building systems that can learn from data.” DeltaDorsal exemplifies this by extracting meaningful information from subtle deformations-data often overlooked-to achieve a more accurate and reliable understanding of hand kinematics, prioritizing provable accuracy over heuristic approximations.

What Remains Constant?

The presented work addresses the persistent challenge of hand pose estimation, specifically mitigating self-occlusion through the incorporation of dorsal skin features. However, let N approach infinity – what remains invariant? The current approach, while demonstrating improvement, still relies on learned correlations within a finite dataset. The fundamental problem isn’t merely one of feature extraction, but of establishing a truly geometric understanding of the hand – a provable solution, rather than a statistically probable one. Future work must move beyond the purely data-driven and embrace a more rigorous, mathematically grounded framework.

A critical limitation lies in the assumption of a relatively static environment. The system’s performance will inevitably degrade when faced with complex lighting conditions, deformable objects interacting with the hand, or significant variations in skin texture. To achieve genuine robustness, the field requires algorithms that are invariant to these perturbations – algorithms that can deduce pose from first principles, independent of superficial appearances. Consider the implications of truly generalizable solutions for robotic manipulation, surgical assistance, or even the creation of entirely synthetic, yet anatomically plausible, digital hands.

The incorporation of dorsal features is a step in the right direction, but it merely shifts the complexity, rather than eliminating it. The true elegance, the ultimate solution, will not be found in increasingly sophisticated feature engineering, but in a complete, provable model of hand kinematics and dynamics. Until then, the pursuit remains a fascinating, yet fundamentally incomplete, exercise in applied approximation.

Original article: https://arxiv.org/pdf/2601.15516.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- EUR ILS PREDICTION

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- eFootball 2026 Manchester United 25-26 Jan pack review

- The five movies competing for an Oscar that has never been won before

- CS2 Premier Season 4 is here! Anubis and SMG changes, new skins

- Streaming Services With Free Trials In Early 2026

2026-01-26 02:51