Author: Denis Avetisyan

Researchers are developing a framework for creating self-clone chatbots designed to foster internal dialogue and improve psychological well-being.

This review outlines a design framework for safe and therapeutically grounded self-clone chatbots, addressing key ethical considerations and practical guidelines.

While digital mental health tools proliferate, few address the potential of leveraging internal dialogue for well-being. This paper, ‘Cloning the Self for Mental Well-Being: A Framework for Designing Safe and Therapeutic Self-Clone Chatbots’, investigates the design of AI-powered “self-clones”-chatbots trained on users’ conversational patterns to facilitate self-reflection and support. Through interviews with mental health professionals and users, we present a framework organized around therapeutic grounding, design dimensions, and harm mitigation, offering actionable guidelines for responsible development. How can we best harness the power of AI to create genuinely supportive and ethically sound tools for internal exploration and self-understanding?

The Cracks in the System: Why We Need Better Access

Contemporary mental healthcare systems grapple with significant hurdles in reaching those who need support most. Geographic limitations, financial constraints, and a persistent shortage of qualified professionals create substantial access barriers, particularly for individuals in rural areas or from marginalized communities. Beyond these logistical challenges, traditional models often struggle with scalability – the capacity to meet the growing demand for mental health services efficiently. This is further complicated by lengthy wait times for appointments and a fragmented system that can make navigating care difficult, ultimately leaving a considerable portion of the population without adequate support and exacerbating the global mental health crisis.

Current mental healthcare protocols, though essential, frequently operate under a ‘one-size-fits-all’ paradigm, diminishing their impact on individuals with unique presentations and needs. The human brain exhibits remarkable variability – genetic predispositions, life experiences, cultural backgrounds, and even subtle physiological differences all contribute to how a person experiences and responds to mental health challenges. Consequently, interventions designed for a general population may not adequately address the specific cognitive, emotional, and behavioral patterns of each patient. This lack of nuanced understanding can lead to misdiagnosis, ineffective treatment plans, and ultimately, a diminished quality of life for those seeking support. Emerging research emphasizes the critical need for personalized approaches, leveraging data-driven insights and tailored interventions to maximize therapeutic outcomes and truly meet the diverse needs of every individual.

Mirroring the Self: A Personalized AI Assistant

The SelfCloneChatbot utilizes a personality mirroring technique achieved through analysis of user-provided text and interaction history. This involves identifying linguistic patterns, common phrasing, and expressed values to construct a conversational profile. The chatbot then generates responses designed to align with this profile, creating a perceived consistency in communication style. This approach aims to establish rapport and increase user engagement by simulating interaction with a familiar entity, ultimately fostering a greater sense of trust and openness during support sessions. The system does not replicate the user’s beliefs or opinions, but rather the manner in which they communicate.

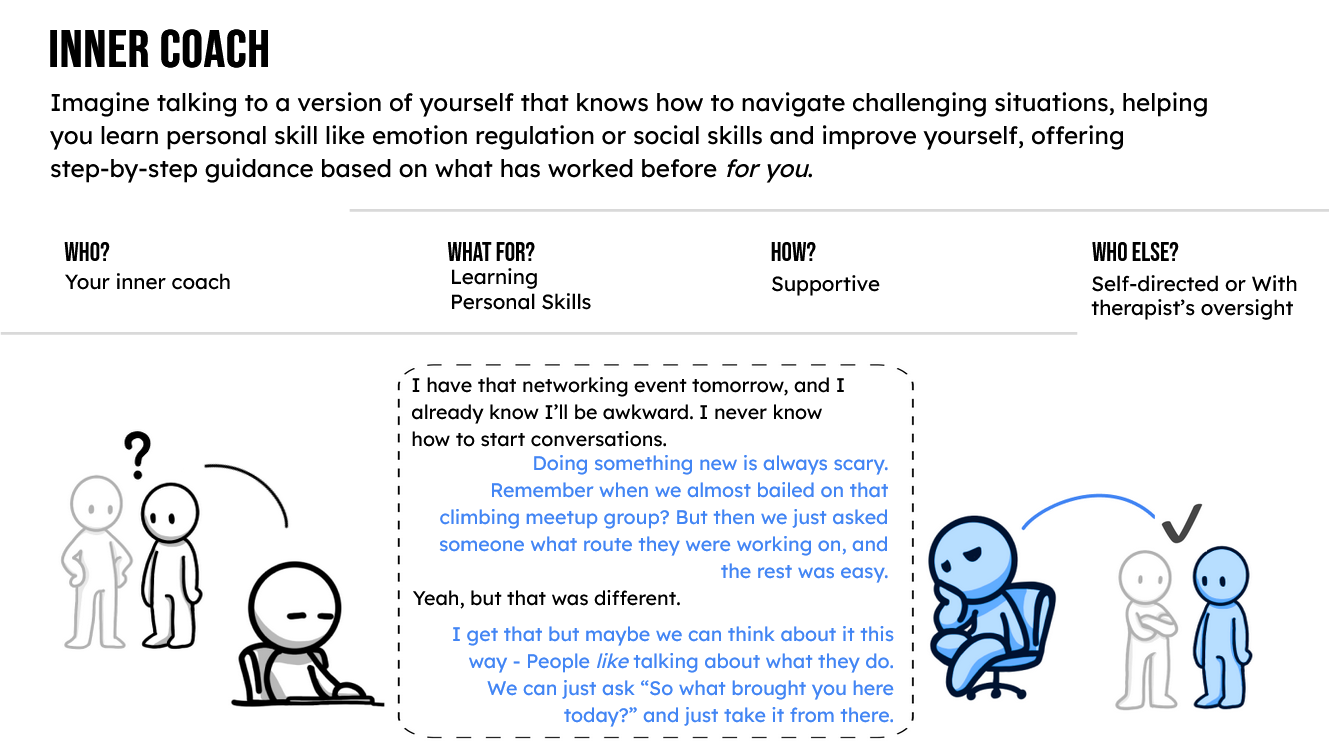

The SelfCloneChatbot incorporates principles from Cognitive Behavioral Therapy (CBT) and Internal Family Systems (IFS) to deliver focused support. CBT techniques are utilized to identify and challenge negative thought patterns, promoting more adaptive behaviors and emotional regulation. Specifically, the chatbot facilitates thought records and behavioral activation exercises. IFS informs the chatbot’s ability to recognize and address internal conflicts by acknowledging and validating different “parts” of the user’s psyche. This allows the chatbot to offer support that addresses underlying emotional drivers rather than solely focusing on surface-level symptoms, fostering self-compassion and internal harmony.

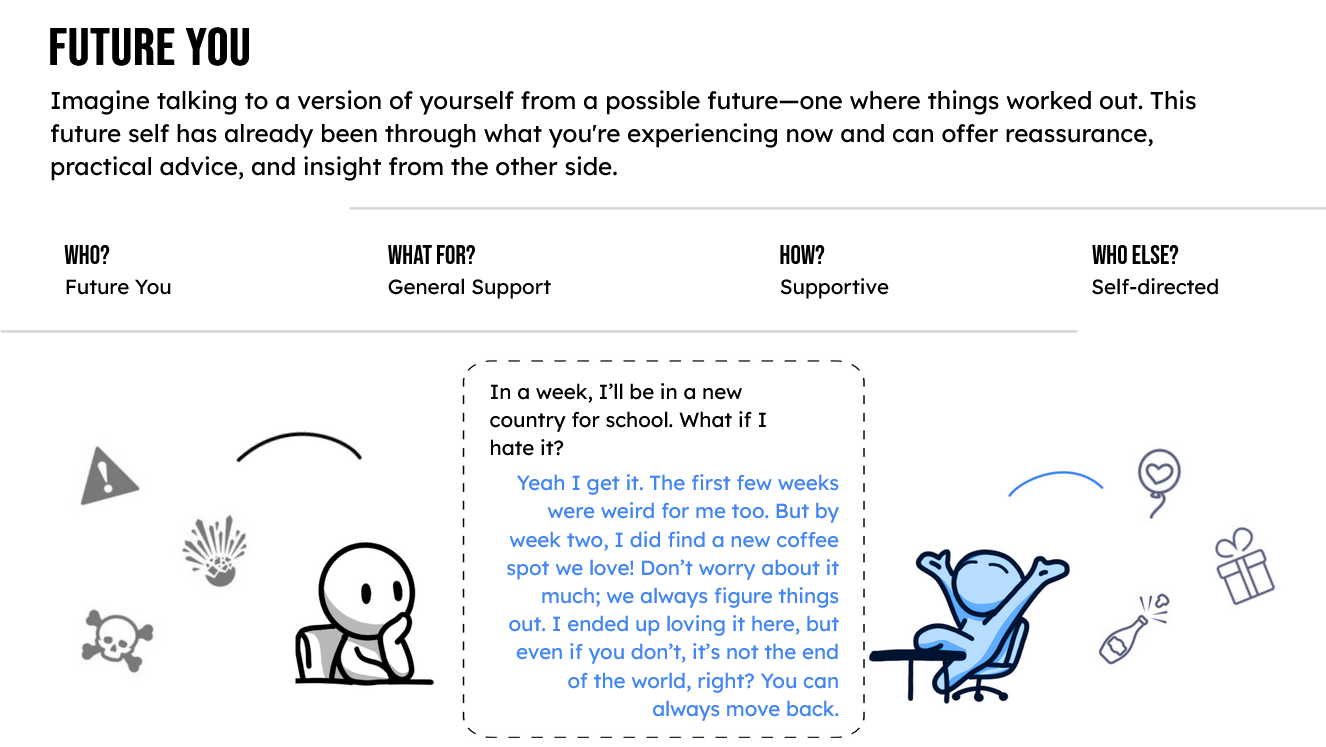

FutureSelfRepresentation within the SelfCloneChatbot functions by prompting users to consider their ideal future selves and articulate goals aligned with that vision. This technique, grounded in psychological research demonstrating the benefits of self-continuity, aims to bridge the gap between present actions and desired outcomes. By regularly referencing these future aspirations during interactions, the chatbot reinforces a long-term perspective, mitigating the impact of immediate challenges and fostering sustained motivation. Specifically, the system encourages users to frame current difficulties as temporary obstacles on the path to achieving their future self’s objectives, thereby increasing resilience and promoting proactive problem-solving.

Risk and Responsibility: A Pragmatic Approach to Safety

Robust risk assessment for the SelfCloneChatbot requires a multi-layered approach to prevent the provision of harmful or inappropriate advice. This includes comprehensive pre-deployment testing using adversarial datasets designed to elicit potentially dangerous responses, as well as continuous monitoring of user interactions post-launch. Specific risk areas addressed include, but are not limited to, mental health crises, medical misinformation, legal guidance, and financial advice. Mitigation strategies involve implementing content filtering, utilizing pre-defined safety protocols, and employing reinforcement learning from human feedback to refine the chatbot’s responses. Furthermore, the system must be capable of identifying and escalating high-risk situations to qualified human professionals, ensuring user safety is prioritized over solely relying on automated responses.

Maintaining user privacy and data security within the SelfCloneChatbot necessitates strict adherence to established ethical guidelines and relevant data protection regulations, including but not limited to GDPR, HIPAA, and CCPA where applicable. This encompasses obtaining explicit, informed consent for data collection and usage, implementing robust data encryption both in transit and at rest, and ensuring data minimization – collecting only the information essential for personalized coaching. Furthermore, anonymization and pseudonymization techniques should be employed whenever possible, and users must be provided with clear and accessible mechanisms to access, modify, and delete their personal data. Regular security audits and penetration testing are critical for identifying and mitigating potential vulnerabilities, alongside transparent data handling policies communicated to all users.

The efficacy of the SelfCloneChatbot is predicated on fostering a strong TherapeuticAlliance, despite being an artificial intelligence. This requires intentional design to provide EmotionalValidation, meaning the chatbot must accurately perceive and acknowledge the user’s expressed emotions. This is achieved not through genuine empathy, but through pattern recognition of linguistic cues associated with specific emotional states, and responding with pre-programmed affirmations or reflective statements. Successful implementation involves algorithms capable of identifying sentiment, affect, and the intensity of emotional expression within user input, and formulating responses that convey understanding and support, thereby establishing rapport and encouraging continued engagement.

Personalized coaching within the SelfCloneChatbot is facilitated by a dynamic response system that moves beyond pre-programmed answers. The chatbot utilizes user-specific data, gathered through initial assessments and ongoing interactions, to tailor both the content and delivery of coaching advice. This adaptation includes adjusting the complexity of language, the framing of suggestions, and the chosen coaching techniques to align with the user’s stated goals, preferred learning style, and identified emotional state. Furthermore, the system tracks user responses to different approaches, employing machine learning algorithms to refine future interactions and optimize the effectiveness of the personalized coaching experience. This iterative process ensures that the chatbot’s strategies are continuously evolving to better meet the individual needs of each user.

A Band-Aid on a Broken System? The Potential and the Limits

The potential for widespread access to mental wellbeing support is being redefined through innovations like the SelfCloneChatbot. Current mental healthcare systems often face limitations in reach, particularly for individuals in rural areas, those with financial constraints, or those facing social stigmas. This chatbot aims to bridge these gaps by offering a readily available, personalized, and private avenue for support. By simulating a conversation with oneself, the chatbot allows users to explore thoughts and feelings in a non-judgmental space, potentially increasing engagement and adherence to wellbeing practices. This is especially crucial for underserved populations who may lack consistent access to traditional therapy or counseling services, offering a scalable solution to promote preventative mental healthcare and emotional resilience.

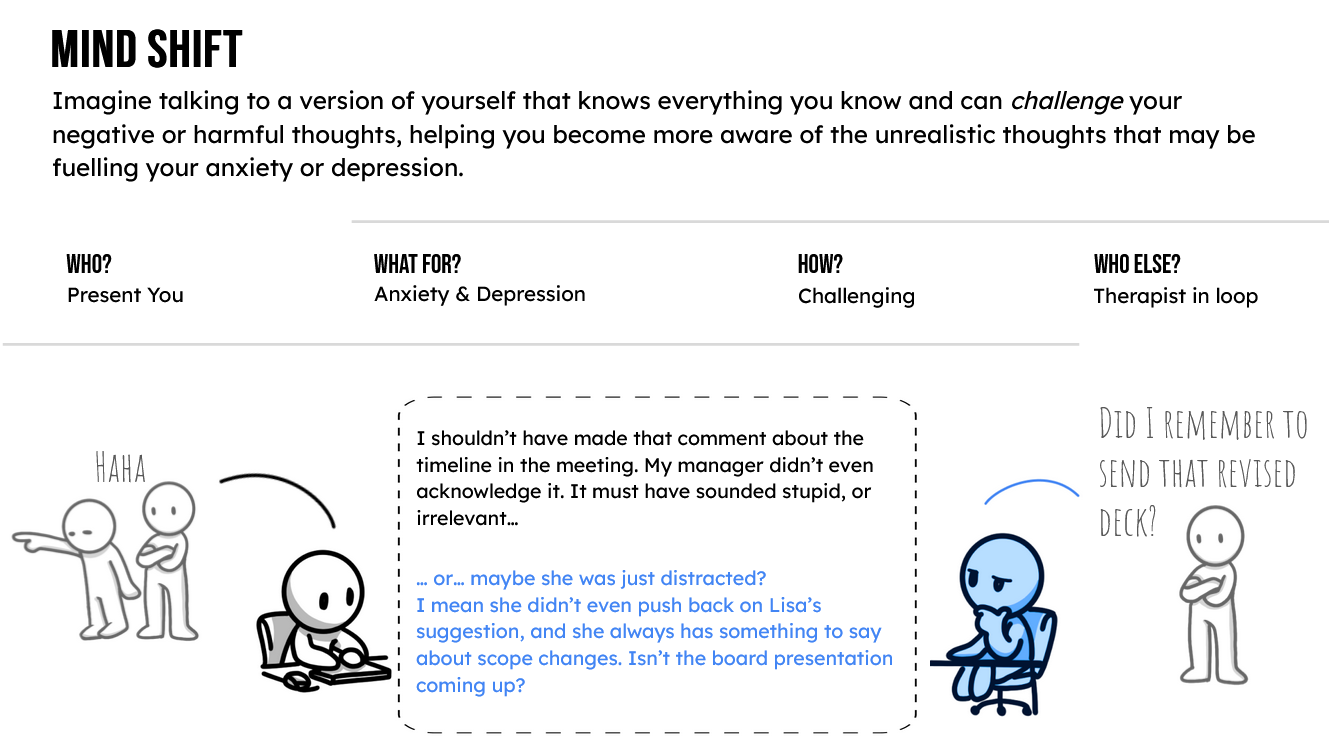

The SelfCloneChatbot distinguishes itself through a proactive approach to mental wellbeing, moving beyond reactive support to actively identify and reshape negative thought patterns. Leveraging techniques inspired by Cognitive Behavioral Therapy, such as the MindShiftTechnique, the chatbot doesn’t simply respond to distress; it analyzes conversational cues and user-defined triggers to pinpoint unhelpful thinking styles. By gently challenging these patterns and guiding users toward more balanced perspectives, the chatbot cultivates greater emotional resilience. This isn’t about eliminating negative thoughts entirely, but equipping individuals with the tools to reframe them, reducing their impact and fostering a more optimistic outlook. The system aims to build a user’s capacity to navigate challenges with increased emotional stability and self-awareness, ultimately promoting long-term mental wellbeing.

A novel framework for designing self-clone chatbots to support mental wellbeing has been established through a rigorous process of expert consultation and user-centered design. This structured approach delineates actionable dimensions – encompassing personality modeling, conversational strategies, and therapeutic technique implementation – alongside crucial safety guardrails to mitigate potential harms. The research moves beyond theoretical possibilities by providing concrete guidelines for developers, addressing key considerations such as data privacy, bias detection, and appropriate escalation protocols for users in crisis. By prioritizing both efficacy and ethical responsibility, this framework aims to facilitate the creation of self-clone chatbots that can deliver personalized, proactive mental wellbeing support with a demonstrably higher degree of safety and trustworthiness.

The pursuit of replicating internal states, as explored in the design of self-clone chatbots, feels predictably ambitious. This framework, striving for therapeutic grounding and safety, assumes a level of predictability in human response that history consistently mocks. As Ken Thompson observed, “Software is like entropy: It is difficult to stop it from becoming disordered.” The very attempt to codify an ‘internal dialogue’ within a chatbot, to create a stable mirror for self-reflection, invites unforeseen consequences. One anticipates the inevitable emergence of emergent behavior – bugs, if one prefers – that will challenge the carefully constructed boundaries of this digital self. The system’s stability, it seems, is merely a measure of how thoroughly it hasn’t been stressed.

What Comes Next?

The framework proposed for self-clone chatbots, while logically sound on paper, inevitably invites consideration of where such systems will actually break. The core challenge isn’t replicating internal dialogue – that’s a solvable engineering problem. It’s the uncanny valley of the self, and the production environment will mercilessly expose every flaw in its mimicry. Users won’t engage with a perfectly reasonable simulation; they’ll probe for the seams, the glitches that reveal it isn’t them. And when those seams appear, the therapeutic effect will likely invert.

Future work will undoubtedly focus on increasingly sophisticated natural language processing, attempting to bridge the gap between simulation and subjective experience. However, a more fruitful, though less glamorous, path might lie in rigorously mapping failure modes. What kinds of self-deception are most likely to occur? Where will the chatbot’s responses exacerbate existing anxieties, rather than alleviate them? Every abstraction dies in production, and this one promises a particularly insightful autopsy.

Ultimately, the success of these systems won’t be measured by their technical elegance, but by their resilience to user exploitation – and, more importantly, by a clear understanding that even the most sophisticated self-clone will remain a reflection, not the thing itself. It’s a comforting illusion, perhaps, but an illusion nonetheless, and one that will inevitably crash when pressed too hard.

Original article: https://arxiv.org/pdf/2601.15465.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- VCT Pacific 2026 talks finals venues, roadshows, and local talent

- EUR ILS PREDICTION

- Lily Allen and David Harbour ‘sell their New York townhouse for $7million – a $1million loss’ amid divorce battle

- Will Victoria Beckham get the last laugh after all? Posh Spice’s solo track shoots up the charts as social media campaign to get her to number one in ‘plot twist of the year’ gains momentum amid Brooklyn fallout

- Vanessa Williams hid her sexual abuse ordeal for decades because she knew her dad ‘could not have handled it’ and only revealed she’d been molested at 10 years old after he’d died

- Dec Donnelly admits he only lasted a week of dry January as his ‘feral’ children drove him to a glass of wine – as Ant McPartlin shares how his New Year’s resolution is inspired by young son Wilder

- Invincible Season 4’s 1st Look Reveals Villains With Thragg & 2 More

- SEGA Football Club Champions 2026 is now live, bringing management action to Android and iOS

- The five movies competing for an Oscar that has never been won before

- eFootball 2026 Manchester United 25-26 Jan pack review

2026-01-25 10:05